This repository contains Terraform automation for deploying Polygon Supernet infrastructure on AWS.

Polygon Supernets is Polygon's solution to build and power dedicated app-chains. Supernets are powered by Polygon's cutting-edge EVM technology, industry-leading blockchain tools and premium end-to-end support.

To find out more about Polygon, visit the official website.

If you'd like to learn more about the Polygon Supernets, how it works and how you can use it for your project, please check out the Polygon Supernets Documentation.

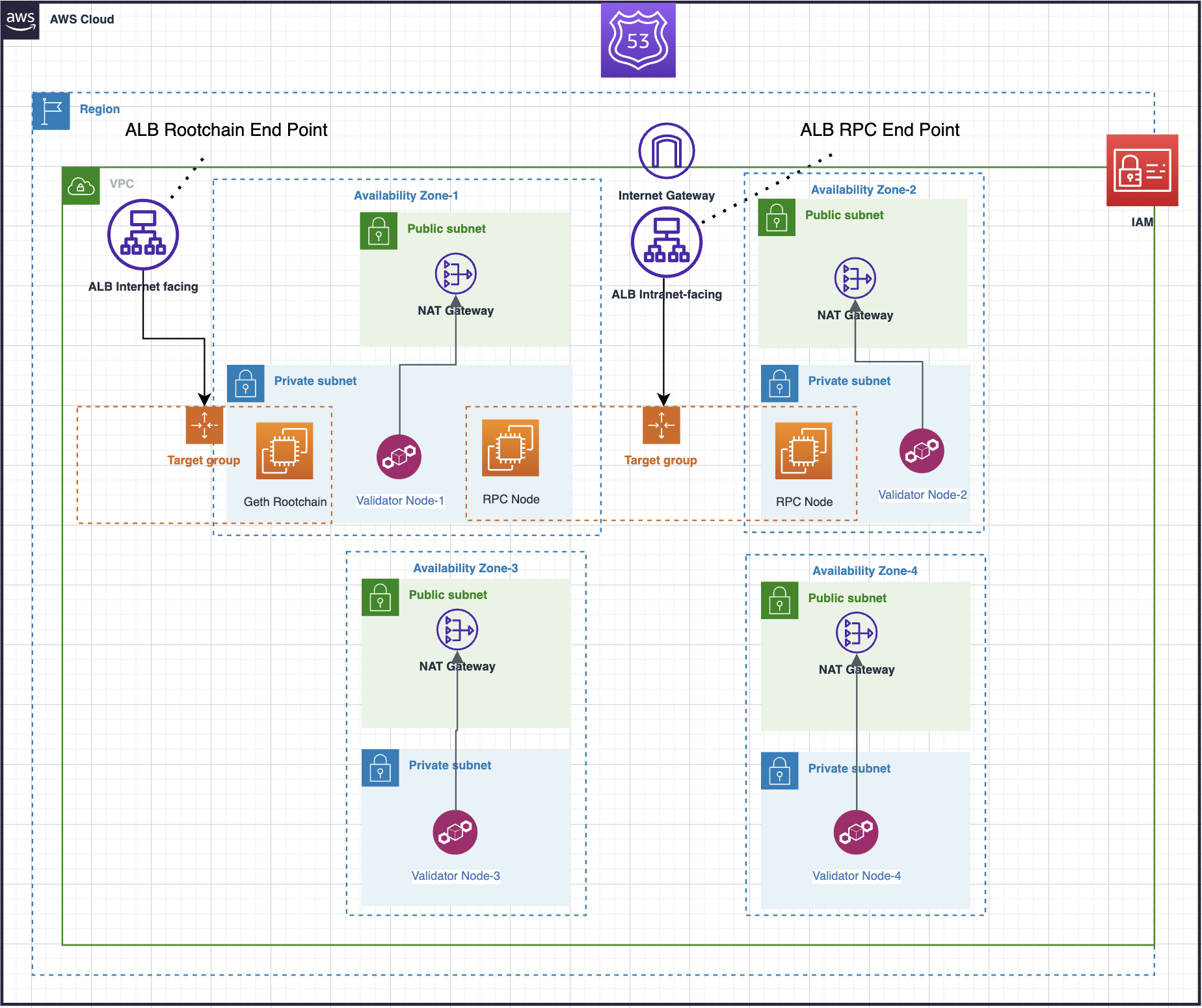

The following resources will be deployed using Terraform:

- Dedicated VPC

- 4 validator nodes (which are also bootnodes)

- 1 rootchain (L1) node running Geth

- Application Load Balancer used for exposing the

JSON-RPCendpoint

In order to use these automations, you'll need a few things installed:

| Name | Version |

|---|---|

| terraform | >= 1.4.4 |

| Session Manager Plugin | |

| aws cli | >= 2.11.0 |

| ansible | >= 2.14 |

| boto3 | >= 1.26 |

| botocore | >= 1.29 |

- First, you'll want to clone the project repository and change directories:

git clone [email protected]:maticnetwork/terraform-polygon-supernets.git

cd terraform-polygon-supernets

-

Now we'll need to make sure our command line is capable of executing AWS commands. To utilize AWS services, you must set up your AWS credentials. There are two methods to establish these credentials: using the AWS CLI or manually configuring them in your AWS console. For more information about setting up AWS credentials, check out the documentation provided by AWS here.

You can use

aws configure,aws configure sso, or set appropriate variables in~/.aws/credentialsor in~/.aws/config. Alternatively, you can directly set access keys as shown below.

$ export AWS_ACCESS_KEY_ID=AKIAIOSFODNN7EXAMPLE

$ export AWS_SECRET_ACCESS_KEY=wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

$ export AWS_DEFAULT_REGION=us-west-2

Note: Ensure that the AWS user role has the necessary permissions to create all

resources listed in the /modules directory.

Before proceeding, verify that your AWS CLI is properly authenticated.

Execute the following command: aws sts get-caller-identity.

If the command runs successfully, you are ready to continue.

- There are a few environment variables that should be set before

provisioning the infrastructure. Customize

example.envbased on your requirements and then run the commands below to set your local environment variables.

set -a

source example.env

set +a

To verify that your environment variables have been

set correctly, run the command env | grep -i tf_var.

This will display the environment variables, allowing you to confirm they match the values specified in example.env.

The full list of variables that can be configured are in variables.tf.

- Now we'll run

terraform initto download and install the provider plugins, which are used to interact with AWS and initialize the backend to store the state file.

terraform init

- Next, we're going to "plan" and "apply" using Terraform. While working iteratively on your Supernet, you'll typically make changes to the Terraform modules, preview the potential infrastructure modifications, and then apply the changes if they meet your expectations.

terraform plan

terraform apply

- While applying the Terraform configuration, a private key was generated to grant you access to your VMs. To save this key, use the following commands.

terraform output pk_ansible > ~/devnet_private.key

chmod 600 ~/devnet_private.key

By now, the necessary AWS infrastructure for operating a Supernet should be deployed. It's a good time to sign in to your AWS Console and examine the setup.

At this stage we'll be using Ansible to configure the VMs that we just deployed with Terraform.

- To begin, switch your working directory to the Ansible folder

ansible.

cd ansible

- The Ansible playbooks that we use require certain external dependencies. In order to retrieve these collections, you can run the following command:

ansible-galaxy install -r requirements.yml

- In case you've altered your region, company name, or deployment name, remember to

modify the

inventory/aws_ec2.ymlfile accordingly.

regions:

- us-west-2

###

filters:

tag:BaseDN: "<YOUR_DEPLOYMENT_NAME>.edge.<YOUR_COMPANY>.private"

The ssh configuration used by Ansible assumes that your default

profile will be configured for the same region where your VMs are

deployed. You can run aws configure get region and confirm that the

default matches your inventory location.

-

The

local-extra-vars.ymlfile contains a number of values that are often adjusted during Supernet deployments. At the very least, make sure to update theclean_deploy_titleto match the deployment name you used.Other values like

block_gas_limitandblock_timesignificantly impact performance and should be set with care. -

To verify the availability of your instances, run the following command:

ansible-inventory --graph

This command will list a bunch of instances. At this stage, it's important to ensure that your SSM and SSH configurations allow access to these instances based on their instance IDs. To test SSM, execute a command similar to the one below:

aws ssm start-session --target [aws instance id]

An example instance id is i-0641e8ff5f2a31647. When you run this

command, you should have shell access to your VM.

- Now that we've confirmed access to our VMs, we must verify that the instances are accessible by Ansible. Attempt running this command:

ansible --inventory inventory/aws_ec2.yml --extra-vars "@local-extra-vars.yml" all -m ping

- Run ansible playbook

ansible-playbook --inventory inventory/aws_ec2.yml --extra-vars "@local-extra-vars.yml" site.yml

After the full playbook runs, you should have a functional Supernet. If you want perform some tests, you can obtain your public RPC endpoint with the following command:

terraform output aws_lb_ext_domain

By default, we are pre-funding this address:

0x85da99c8a7c2c95964c8efd687e95e632fc533d6 which has the private key

0x42b6e34dc21598a807dc19d7784c71b2a7a01f6480dc6f58258f78e539f1a1fa. Using

that private key and the RPC address from the previous step you should

be able to send transactions. The command below uses

cast to send a test transaction:

cast send --legacy \

--rpc-url https://ext-rpc-devnet13-edge-10623089.us-west-2.elb.amazonaws.com \

--value 1 \

--private-key 0x42b6e34dc21598a807dc19d7784c71b2a7a01f6480dc6f58258f78e539f1a1fa \

0x9f34dCBECFC0BD4c1ee3d12e1Fb5DCF1A0b9BCcB

This command uses

polycli to do a simple

load test. We're not required to specify a private key here because

0x42b6e34dc21598a807dc19d7784c71b2a7a01f6480dc6f58258f78e539f1a1fa

is the default load test address of polycli:

polycli loadtest --chain-id 1 --mode t --requests 10 \

--verbosity 601 https://ext-rpc-devnet13-edge-10623089.us-west-2.elb.amazonaws.com

In the root directory, run terraform destroy

Take a look at Supernet Set Up

Take a look at Load Testing