AWS Media2Cloud solution is designed to demonstrate a serverless ingest and analysis framework that can quickly setup a baseline ingest and analysis workflow for placing video and image assets and associated metadata under management control of an AWS customer. The solution will setup the core building blocks that are common in an ingest and analysis strategy:

- Establish a storage policy that manages master materials as well as proxies generated by the ingest process.

- Provide a unique identifier (UUID) for each master video asset.

- Calculate and provide a MD5 checksum.

- Perform a technical metadata extract against the master asset.

- Build standardized proxies for use in a media asset management solution.

- Run the proxies through audio, video, image analysis.

- Provide a serverless dashboard that allows a developer to setup and monitor the ingest and analysis process.

For more information and a detailed deployment guide visit the Media2Cloud Solution at https://aws.amazon.com/answers/media-entertainment/media2cloud/.

- Clone the repository, then make the desired code changes

- Next, run unit tests to make sure added customization passes the tests

cd ./deployment

bash ./run-unit-tests.sh

- Configure the bucket name of your target Amazon S3 distribution bucket. (Note: You would have to create an S3 bucket with this naming convention:

MY_BUCKET_BASENAME-<aws-region>

where

- MY_BUCKET_BASENAME is the basename of your bucket

- <aws-region> is where you are testing the customized solution.

For instance, create a bucket named as my-media2cloud-eu-west-1 where your bucket basename will be my-media2cloud

Also, the templates and packages stored in this bucket should be publicly accessible.

- Now build the distributable:

bash ./build-s3-dist.sh --bucket MY_BUCKET_BASENAME

- Deploy the distributable to an Amazon S3 bucket in your account. Note: you must have the AWS Command Line Interface installed.

bash ./deploy-s3-dist.sh --bucket MY_BUCKET_BASENAME --region eu-west-1

- Get the link of the media2cloud-deploy.template uploaded to your Amazon S3 bucket.

- Deploy the Media2Cloud Solution to your account by launching a new AWS CloudFormation stack using the link of the media2cloud-deploy.template.

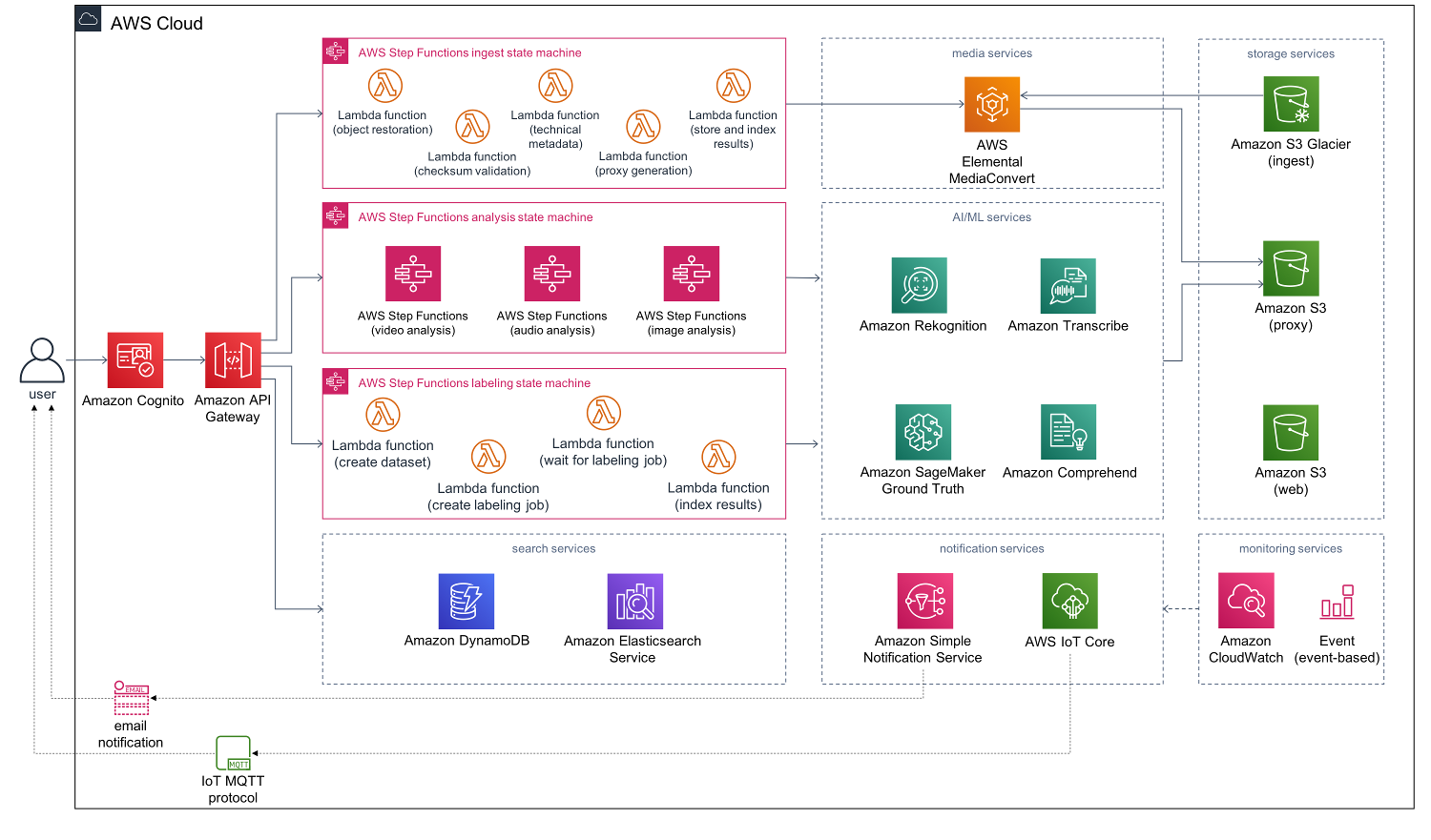

The Media2Cloud Solution consists of a demo web portal, an ingest, audio, video, image analysis, a labeling job, a search and storage, and an API layers.

- The demo web portal uses JQuery framework that interacts with Amazon S3, Amazon API Gateway, AWS Iot Core, and Amazon Cognito.

- The ingest orchestration layer is an AWS Step Functions state machine that restores, computes checksum, extracts exif and mediainfo, and creates proxy and thumbnail images of the uploaded file.

- The set of analysis (audio, video, image) orchestration layer are AWS Step Functions state machine that coordinates metadata extraction from Amazon AI services.

- The search and storage layer uses Amazon Elasticsearch to index all metadata extracted from ingest and analysis states and to provide search capability.

- The API layer provides simple APIs (assets, analysis, and search) to process HTTP requests from the web client.

| Path | Description |

|---|---|

| deployment/ | cloudformation templates and shell scripts |

| deployment/common.sh | shell script used by others |

| deployment/build-s3-dist.sh | shell script to build the solution |

| deployment/deploy-s3-dist.sh | shell script to deploy the solution |

| deployment/run-unit-tests.sh | shell script to run unit tests |

| deployment/media2cloud-deploy.yaml | solution CloudFormation main deployment template |

| deployment/media2cloud-api-stack.yaml | solution CloudFormation template for deploying API services |

| deployment/media2cloud-bucket-stack.yaml | solution CloudFormation template for deploying ingest and proxy buckets |

| deployment/media2cloud-groundtruth-stack.yaml | solution CloudFormation template for deploying AWS SageMaker Ground Truth resources |

| deployment/media2cloud-search-engine-stack.yaml | solution CloudFormation template for deploying AWS Elasticsearch search engine |

| deployment/media2cloud-state-machine-stack.yaml | solution CloudFormation template for deploying ingest, audio, video, image analysis state machines |

| deployment/media2cloud-webapp-stack.yaml | solution CloudFormation template for deploying webapp and Amazon CloudFront distribution |

| source/ | source code folder |

| source/analysis-monitor/ | microservice for monitoring image, audio, video state machines |

| source/api/ | microservice to handle API requests |

| source/audio-analysis/ | microservice for audio analysis state machine |

| source/audio-analysis/lib/comprehend/ | implementation for extracting keyphrases, entity, moderation via Amazon Comprehend service |

| source/audio-analysis/lib/transcribe/ | implementation for managing speech-to-text via Amazon Transcribe service |

| source/custom-resources/ | AWS CloudFormation custom resource for aiding the deployment of the solution |

| source/document-analysis/ | microservice for document analysis (Amazon Textract service). Currently disabled |

| source/error-handler/ | microservice for handling state machine errors via Amazon CloudWatch Events service |

| source/gt-labeling/ | microservice for labeling job state machine |

| source/image-analysis/ | microservice for image analysis state machine |

| source/ingest/ | microservice for ingest state machine |

| source/ingest/lib/checksum/ | implementation of MD5 checksum |

| source/ingest/lib/image-process/ | implementation of extracting exif and creating proxy image |

| source/ingest/lib/indexer/ | implementation of indexing technical metadata to AWS Elasticsearch engine |

| source/ingest/lib/s3restore/ | implementation of restoring s3 object from GLACIER or DEEP ARCHIVE storage classes |

| source/ingest/lib/transcode/ | implementation of creating video proxy via AWS Elemental MediaConvert service |

| source/s3event/ | microservice for s3event to auto-ingest asset on s3:objectCreated event. Currently disabled |

| source/video-analysis/ | microservice for video analysis state machine |

| source/webapp/ | web application package |

| source/webapp/public/ | static html and css files |

| source/webapp/src/ | webapp source folder |

| source/webapp/src/third_party/ | webapp 3rd party libraries |

| source/webapp/src/third_party/amazon-cognito-identity-bundle | Amazon Cognito library for handling web app authentication |

| source/webapp/src/third_party/aws-iot-sdk-browser-bundle/ | AWS IoT Core for subscribing IoT message topic via MQTT protocol |

| source/webapp/src/third_party/cropper-bundle/ | JS cropper for image cropping UI |

| source/webapp/src/third_party/mime-bundle/ | MIME library for type detection |

| source/webapp/src/third_party/spark-md5-bundle/ | SPARK library for computing checksum on client side |

| source/webapp/src/videojs-bundle/ | VideoJS library for video preview |

| source/layers/ | AWS Lambda layers |

| source/layers/aws-sdk-layer/ | latest version of AWS SDK for NodeJS |

| source/layers/core-lib/ | core library shared among state machines |

| source/fixity-lib/ | wrapper layer for SPARK and RUSHA libraries for MD5 and SHA1 checksums |

| source/image-process-lib/ | wrapper layer for exifinfo and Jimp libraries for image analysis |

| source/mediainfo/ | wrapper layer for mediainfo library for extracting media information |

| source/samples/ | sample codes |

Check out the mini workshop where you can learn how to use the RESTful APIs exposed by Media2Cloud solution, automate the ingest process, and automate the analysis process.

By default, Media2Cloud CloudFormation template creates three buckets for you: an ingest bucket that stores the uploaded files; a proxy bucket that stores proxy files, thumbnails, and analysis results; and a web bucket that stores the static assets for the web interface.

You can customize the CloudFormation template to use your bucket instead of the solution to create it.

-

Open deployment/media2cloud-bucket-stack.yaml with your text editor.

-

Under the Mappings section, find the UserDefined group.

Mappings:

...

UserDefined:

Bucket:

Ingest: ""

Proxy: ""

- Set the Ingest key to the bucket name you would like to be your source bucket.

Ingest: "my-ingest-bucket"

- Optionally set the Proxy key to the bucket name you would like to store the proxies and analysis results generated by the Media2Cloud solution

Proxy: "my-proxy-bucket"

-

Save the YAML template and build and deploy the customized solution

-

You will also need to make sure the buckets allows the web client to access the proxy files by setting the correct Cross Origin Resource Sharing (CORS) as follows:

<?xml version="1.0" encoding="UTF-8"?>

<CORSConfiguration xmlns="https://s3.amazonaws.com/doc/2006-03-01/">

<CORSRule>

<AllowedOrigin>https://<cf-id>.cloudfront.net</AllowedOrigin>

<AllowedMethod>GET</AllowedMethod>

<AllowedMethod>PUT</AllowedMethod>

<AllowedMethod>POST</AllowedMethod>

<AllowedMethod>HEAD</AllowedMethod>

<AllowedMethod>DELETE</AllowedMethod>

<MaxAgeSeconds>3000</MaxAgeSeconds>

<ExposeHeader>Content-Length</ExposeHeader>

<ExposeHeader>ETag</ExposeHeader>

<ExposeHeader>x-amz-meta-uuid</ExposeHeader>

<ExposeHeader>x-amz-meta-md5</ExposeHeader>

<AllowedHeader>*</AllowedHeader>

</CORSRule>

</CORSConfiguration>

where <cf-id> is the Amazon CloudFront distribution created by the solution to host the Media2Cloud web interface.

--

The solution comes with a sample code, execution-summary.js that helps you to understand how much resources a specified ingest or analysis process consumes including lambda executions of different states and the number of state transitions. The code also outputs the summary to a CSV file in your current folder.

IMPORTANT: the sample code only estimates the resources being used by a specific state machine. It doesn't include other resources such as DynamoDB Read/Write unit, AI/ML processes, S3 GET/PUT/SELECT requests, MediaConvert, SNS publish, and IoT publish.

To run the sample code, cd to ./source/samples directory and run the following command:

npm install

export AWS_REGION=<aws-region>

node execution-summary.js --arn <execution-arn>

where

<aws-region> is the AWS Region where you create the solution, i.e., us-east-1.

<execution-arn> you can find it from AWS Step Functions console:

- Go to AWS Step Functions console, select State machines, click on the ingest, analysis, video-analysis, audio-analysis, or image-analysis state machine. The naming convention of the state machines are SO0050-<stack-name>-ingest, SO0050-<stack-name>-analysis, and so forth where <stack-name> is the name you specified when you create the solution.

- Click on the SO0081-<stack-name>-ingest state machine, you should see a list of executions.

- Select one of the executions and you will find the Execution ARN

- Use the Execution ARN for the commandline option --arn, see an example below:

npm install

export AWS_REGION=eu-west-1

node execution-summary.js --arn arn:aws:states:eu-west-1:1234:execution:SO0050-m2c-ingest:1234-abcd

Copyright 2019 Amazon.com, Inc. and its affiliates. All Rights Reserved.

SPDX-License-Identifier: LicenseRef-.amazon.com.-AmznSL-1.0

Licensed under the Amazon Software License (the "License"). You may not use this file except in compliance with the License. A copy of the License is located at

or in the "license" file accompanying this file. This file is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.