Jacopo Bonato, Marco Cotogni, Luigi Sabetta

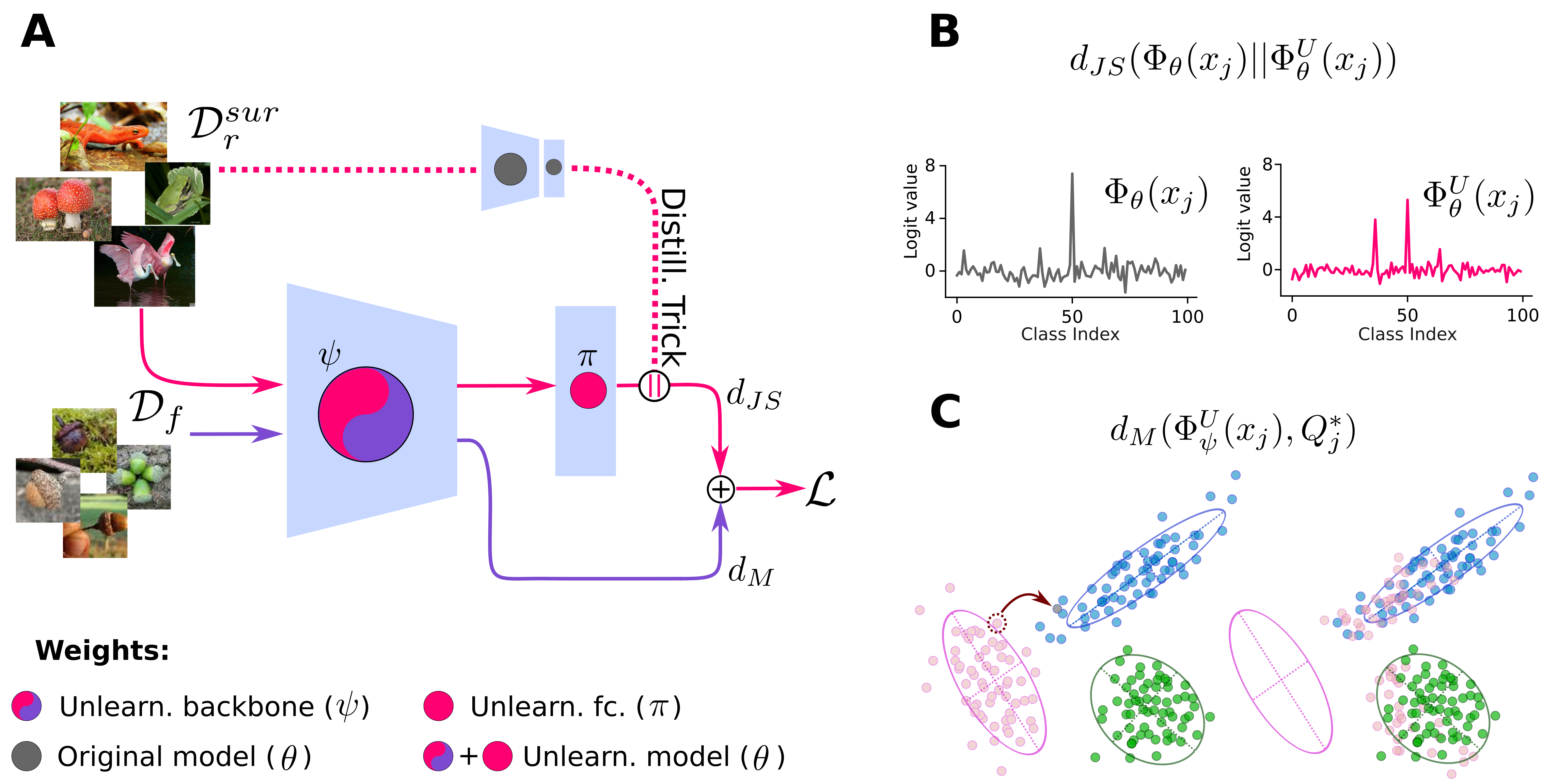

SCAR is a novel model-agnostic unlearning algorithm named Selective-distillation for Class and Architecture-agnostic unleaRning. SCAR utilizes metric learning and knowledge distillation techniques to efficiently remove targeted information from models without relying on a retain set. By leveraging the Mahalanobis distance, SCAR shifts feature vectors of instances to forget towards distributions of samples from other classes, facilitating effective metric learning-based unlearning. Additionally, SCAR maintains model accuracy by distilling knowledge from the original model using out-of-distribution images.

Key contributions of this work include the development of SCAR, which achieves competitive unlearning performance without retain data, a unique self-forget mechanism in class removal scenarios, comprehensive analyses demonstrating efficacy across different datasets and architectures, and experimental evidence showcasing SCAR's comparable or superior performance to traditional unlearning methods and state-of-the-art techniques that do not use a retain set.

Key contributions of this work include the development of SCAR, which achieves competitive unlearning performance without retain data, a unique self-forget mechanism in class removal scenarios, comprehensive analyses demonstrating efficacy across different datasets and architectures, and experimental evidence showcasing SCAR's comparable or superior performance to traditional unlearning methods and state-of-the-art techniques that do not use a retain set.

# Clone the repository

git https://github.com/jbonato1/SCAR

# Navigate to the project directory

cd your-repo

# Installation WITH DOCKER

#Step 1:

#Build the docker image from the Dockerfile :

docker build -f Dockerfile -t scar:1.0 .

#Step 2:

#Run your image :

docker run -it --gpus all --ipc=host -v "/path_to_dataset_folder":/root/data -v "/path_to_scar_folder":/scar scar:1.0 /bin/bash

# Install LOCALLY

pip install -r requirements.txt- Step 1:

Run

to train the original model. Choose the dataset in opts

python3 training_original.py

- Step 2:

Run

to sample the forget sample for HR scenario

sample_fgt_samples.py

- Step 3:

Run

to train the retrained model. Choose the dataset in opts. Use --mode CR for class removal and --mode HR for homogeneus removal

pyhton3 training_oracle.py

If you already have trained and retrained model you can skip the above steps.

If you plan to execute the HR scenario, before launching any unlearning method, you have to execute the script sample_fgt_samples.py for generating the indices of the samples to be forgotten.

If you plan to execute "SCAR" or "SCAR_self", you have to retrieve the surrogate dataset. To do so, sample 10000 images from ImageNet, COCO, or any other dataset and run the script src/organize_surrogate_dataset.py. The script will organize the surrogate dataset in folders each one containing a chunk of the dataset (this is done in order to give the possiblity of select subset of different size). We suggest to use the ImageNet dataset for the surrogate dataset. We provide the list of the images sampled in "surrogate_ImageNet.txt".

For reproducing the experiments:

python src/main.py --run_name <run_name> --dataset <dataset> --mode <mode> --cuda <cuda> --load_unlearned_model --save_model --save_df --push_results --run_original --run_unlearn --run_rt_model --num_workers <num_workers> --method <method> --model <model> --bsize <bsize> --wd <wd> --momentum <momentum> --lr <lr> --epochs <epochs> --scheduler <scheduler> --temperature <temperature> --lambda_1 <lambda_1> --lambda_2 <lambda_2> --delta <delta> --gamma1 <gamma1> --gamma2 <gamma2>

Configuration Options:

--run_name: Name of the run (default: "test").

--dataset: Dataset for the experiment (default: "cifar100").

--mode: Scenario for the experiment (default: "CR").

--cuda: Select zero-indexed CUDA device. Use -1 to run on CPU (default: 0).

--load_unlearned_model: Load a pre-trained unlearned model.

--save_model: Save the trained model.

--save_df: Save the experiment results as a DataFrame.

--push_results: push results on google docs

--run_original: Run the original model.

--run_unlearn: Run the unlearned model.

--run_rt_model: Run the real-time model.

--num_workers: Number of workers for data loading (default: 4).

--method: Method for unlearning (default: "SCAR").

--model: Model architecture (default: 'resnet18').

--bsize: Batch size (default: 1024).

--wd: Weight decay (default: 0.0).

--momentum: Momentum for SGD optimizer (default: 0.9).

--lr: Learning rate (default: 0.0005).

--epochs: Number of epochs (default: 30).

--scheduler: Learning rate scheduler milestones (default: [25, 40]).

--temperature: Temperature for unlearning algorithm (default: 1).

--lambda_1: Lambda 1 hyperparameter (default: 1).

--lambda_2: Lambda 2 hyperparameter (default: 5).

--gamma1: mahalanobis gamma1 parameters

--gamma2: mahalanobis gamma2 parameters

--delta: tuckey transformation delta

All the hyperparameters are reported in the Supplementary Material of the paper

Example CIFAR 10 in CR scenario

python3 main_def.py --run_name cifar10_CR --dataset cifar10 --mode CR --cuda 0 --save_model --save_df --run_unlearn --num_workers 4 --method SCAR --model resnet18 --bsize 1024 --lr 0.0005 --epochs 30 --temperature 1 --lambda_1 1 --lambda_2 5 --delta .5 --gamma1 3 --gamma2 3If you find this work useful, please consider citing it:

TBD

This repository is based on: