You can find the details of the model and results here.

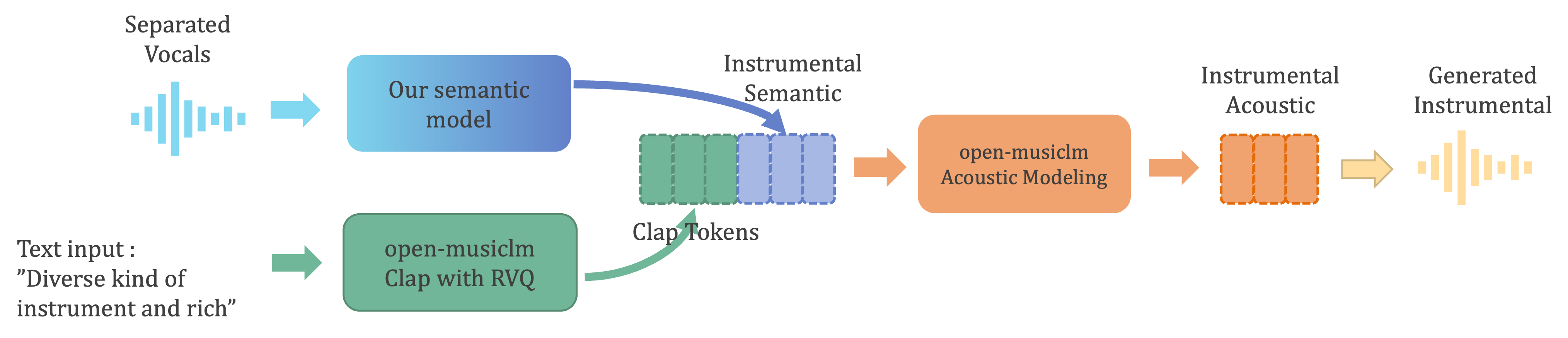

This is the unoffical implementation of "SingSong: Generating musical accompaniments from singing" from Google research. Demos and more details can be found in here. We'll employ two models for the implementation of Singsong:

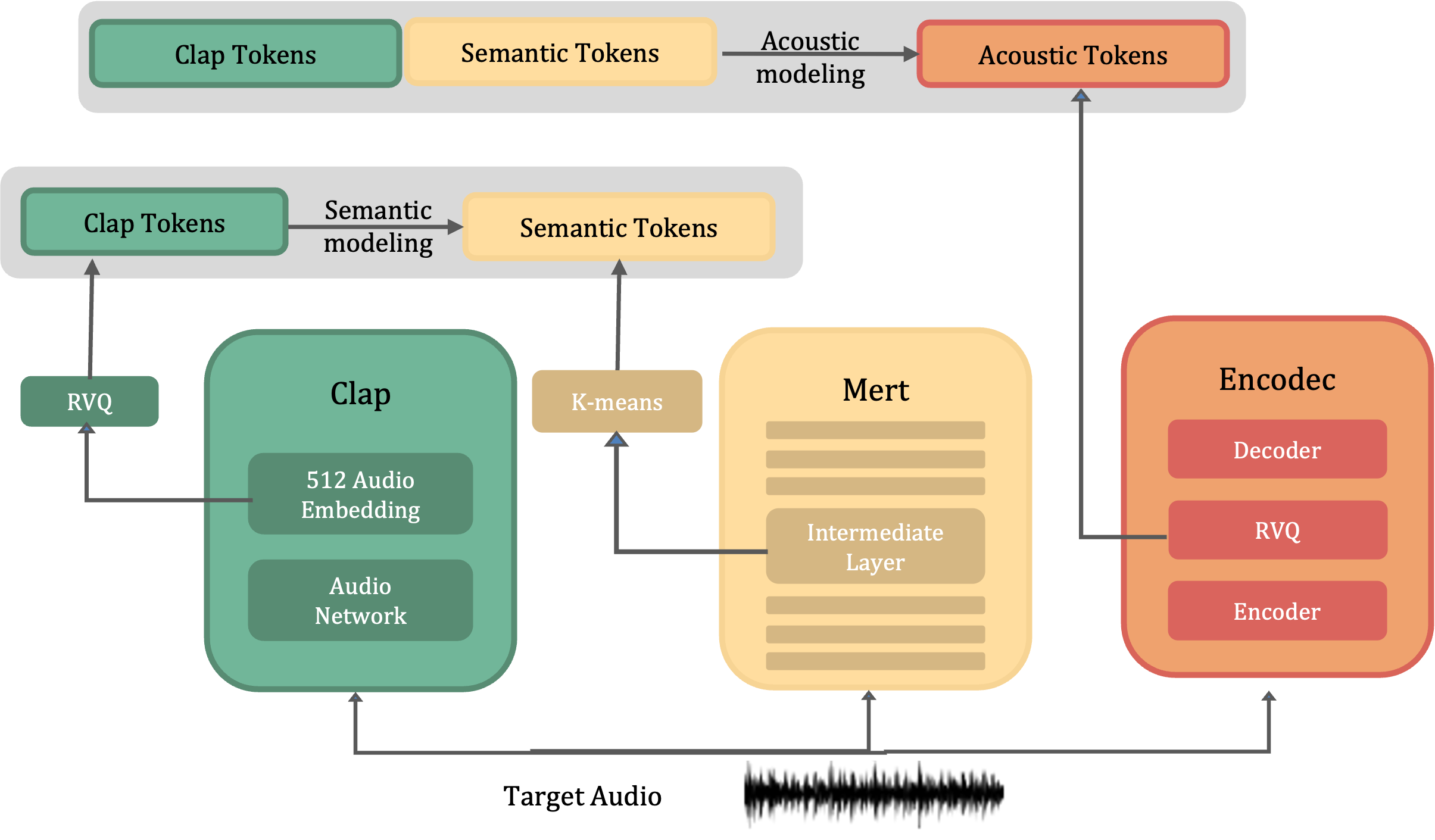

- Open-musiclm: An unofficial implementation of the MusicLM paper, complete with pre-trained weights. We'll utilize this without any modifications.

- Open-musiclm : You can download experimental kmeans_10s_no_fusion.joblib, clap.rvq.950_no_fusion.pt, fine.transformer.24000.pt, semantic.transformer.14000.pt, coarse.transformer.18000.pt in here. Refer to the open-musicLM for more information. Plus, please download 630k-audioset-best.pt from here and put it in ./open_musiclm/laion_clap/630k-audioset-best.pt

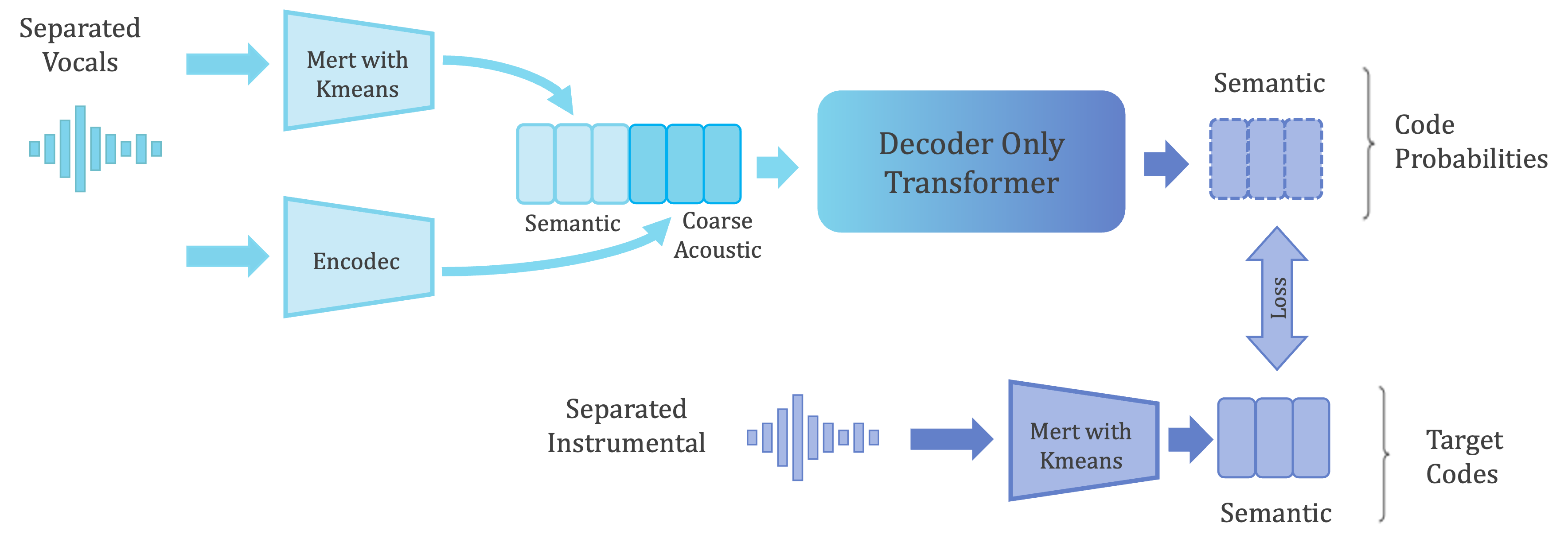

- Our semcoarsetosem model: You can download real_semcoarsetosem.transformer.5170.pt in here

Correctly change all the file path and run inference.ipynb

Correctly change all the file path and run train.ipynb