A simple spark standalone cluster for your testing environment purposes. A docker-compose up away from you solution for your spark development environment.

The Docker compose will create the following containers:

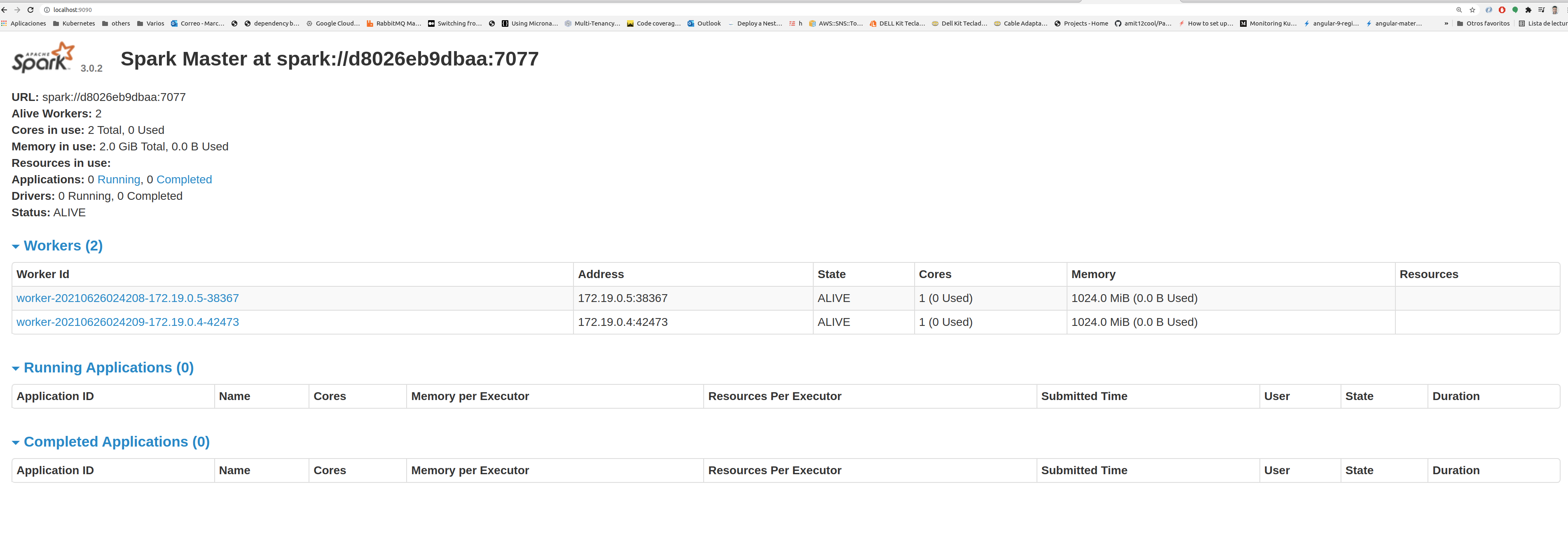

| container | Exposed ports |

|---|---|

| spark-master | 9090 7077 |

| spark-worker-1 | 9091 |

| spark-worker-2 | 9092 |

The following steps will make you run your spark cluster's containers.

-

Docker installed

-

Docker compose installed

docker build -t spark:3.1.2 .The final step to create your test cluster will be to run the compose file:

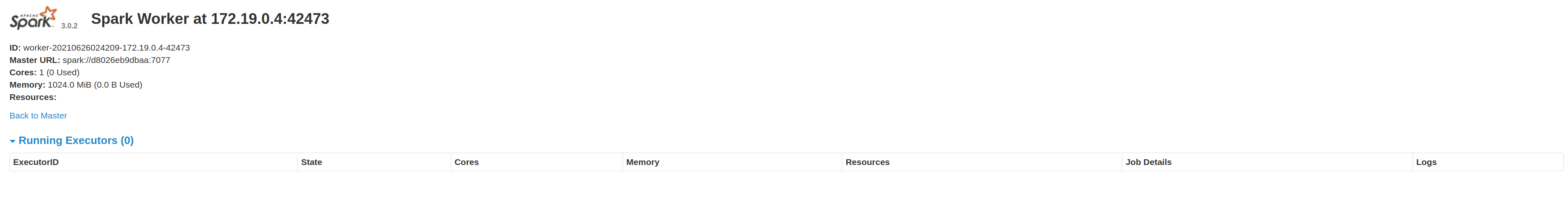

docker-compose up -dJust validate your cluster accesing the spark UI on each worker & master URL.

This cluster is shipped with three workers and one spark master, each of these has a particular set of resource allocation(basically RAM & cpu cores allocation).

-

The default CPU cores allocation for each spark worker is 1 core.

-

The default RAM for each spark-worker is 1024 MB.

-

The default RAM allocation for spark executors is 256mb.

-

The default RAM allocation for spark driver is 128mb

-

If you wish to modify this allocations just edit the env/spark-worker.sh file.

To make app running easier I've shipped two volume mounts described in the following chart:

| Host Mount | Container Mount | Purposse |

|---|---|---|

| apps | /opt/spark-apps | Used to make available your app's jars on all workers & master |

This is basically a dummy DFS created from docker Volumes...(maybe not...)

Example: if you container id is c0, let try the following command

docker exec -it c0 /bin/bash./bin/spark-shell --master spark:https://spark-master:7077./bin/pyspark --master spark:https://spark-master:7077./bin/run-example --master spark:https://spark-master:7077 SparkPi 1000./bin/spark-submit --master spark:https://spark-master:7077 examples/src/main/python/pi.py 1000./bin/spark-submit --master spark:https://spark-master:7077 --class SimpleApp /opt/spark-apps/fuzzy/fuzzylogic/target/scala-2.12/fuzzylogic_2.12-0.1.0-SNAPSHOT.jar./bin/spark-submit --master spark:https://spark-master:7077 --class simpleFuzzy /opt/spark-apps/fuzzy2/fuzzylogic/target/scala-2.12/fuzzylogic_v2_2.12-0.1.0-SNAPSHOT.jar