Welcome to Colosseum, a successor of AirSim

Colosseum is a simulator for robotic, autonomous systems, built on Unreal Engine (we now also have an experimental Unity release). It is open-source, cross platform, and supports software-in-the-loop simulation with popular flight controllers such as PX4 & ArduPilot and hardware-in-loop with PX4 for physically and visually realistic simulations. It is developed as an Unreal plugin that can simply be dropped into any Unreal environment. Similarly, we have an experimental release for a Unity plugin.

This is a fork of the AirSim repository, which Microsoft decided to shutdown in July of 2022. This fork serves as a waypoint to building a new and better simulation platform. The creater and maintainer of this fork is Codex Laboratories LLC (our website is here). Colosseum is one of the underlying simulation systems that we use in our product, the SWARM Simulation Platform. This platform exists to provide pre-built tools and low-code/no-code autonomy solutions. Please feel free to check this platform out and reach out if interested.

We have decided to create a Slack to better allow for community engagement. Join here: Colosseum Slack

This section will contain a list of the current features that the community and Codex Labs are working on to support and build.

Click here to view our current development goals!

If you want to be apart of the official development team, attend meetings, etc., please utilize the Slack channel (link above) and let Tyler Fedrizzi know!

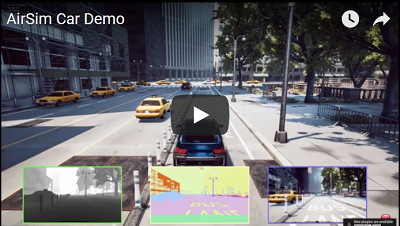

Check out the quick 1.5 minute demo

Quadrotor UAVs in Colosseum

Cars in Colosseum

For more details, see the use precompiled binaries document.

View our detailed documentation on all aspects of Colosseum.

If you have remote control (RC) as shown below, you can manually control the drone in the simulator. For cars, you can use arrow keys to drive manually.

Colosseum exposes APIs so you can interact with the vehicle in the simulation programmatically. You can use these APIs to retrieve images, get state, control the vehicle and so on. The APIs are exposed through the RPC, and are accessible via a variety of languages, including C++, Python, C# and Java.

These APIs are also available as part of a separate, independent cross-platform library, so you can deploy them on a companion computer on your vehicle. This way you can write and test your code in the simulator, and later execute it on the real vehicles. Transfer learning and related research is one of our focus areas.

Note that you can use SimMode setting to specify the default vehicle or the new ComputerVision mode so you don't get prompted each time you start Colosseum.

There are two ways you can generate training data from Colosseum for deep learning. The easiest way is to simply press the record button in the lower right corner. This will start writing pose and images for each frame. The data logging code is pretty simple and you can modify it to your heart's content.

A better way to generate training data exactly the way you want is by accessing the APIs. This allows you to be in full control of how, what, where and when you want to log data.

Yet another way to use Colosseum is the so-called "Computer Vision" mode. In this mode, you don't have vehicles or physics. You can use the keyboard to move around the scene, or use APIs to position available cameras in any arbitrary pose, and collect images such as depth, disparity, surface normals or object segmentation.

Press F10 to see various options available for weather effects. You can also control the weather using APIs. Press F1 to see other options available.

- Video - Setting up Colosseum with Pixhawk Tutorial by Chris Lovett

- Video - Using Colosseum with Pixhawk Tutorial by Chris Lovett

- Video - Using off-the-self environments with Colosseum by Jim Piavis

- Webinar - Harnessing high-fidelity simulation for autonomous systems by Sai Vemprala

- Reinforcement Learning with Colosseum by Ashish Kapoor

- The Autonomous Driving Cookbook by Microsoft Deep Learning and Robotics Garage Chapter

- Using TensorFlow for simple collision avoidance by Simon Levy and WLU team

More technical details are available in Colosseum paper (FSR 2017 Conference). Please cite this as:

@inproceedings{airsim2017fsr,

author = {Shital Shah and Debadeepta Dey and Chris Lovett and Ashish Kapoor},

title = {AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles},

year = {2017},

booktitle = {Field and Service Robotics},

eprint = {arXiv:1705.05065},

url = {https://arxiv.org/abs/1705.05065}

}

Please take a look at open issues if you are looking for areas to contribute to.

We are maintaining a list of a few projects, people and groups that we are aware of. If you would like to be featured in this list please make a request here.

Join our GitHub Discussions group to stay up to date or ask any questions.

We also have an Colosseum group on Facebook.

- Cinematographic Camera

- ROS2 wrapper

- API to list all assets

- movetoGPS API

- Optical flow camera

- simSetKinematics API

- Dynamically set object textures from existing UE material or texture PNG

- Ability to spawn/destroy lights and control light parameters

- Support for multiple drones in Unity

- Control manual camera speed through the keyboard

For complete list of changes, view our Changelog

If you run into problems, check the FAQ and feel free to post issues in the Colosseum repository.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

This project is released under the MIT License. Please review the License file for more details.