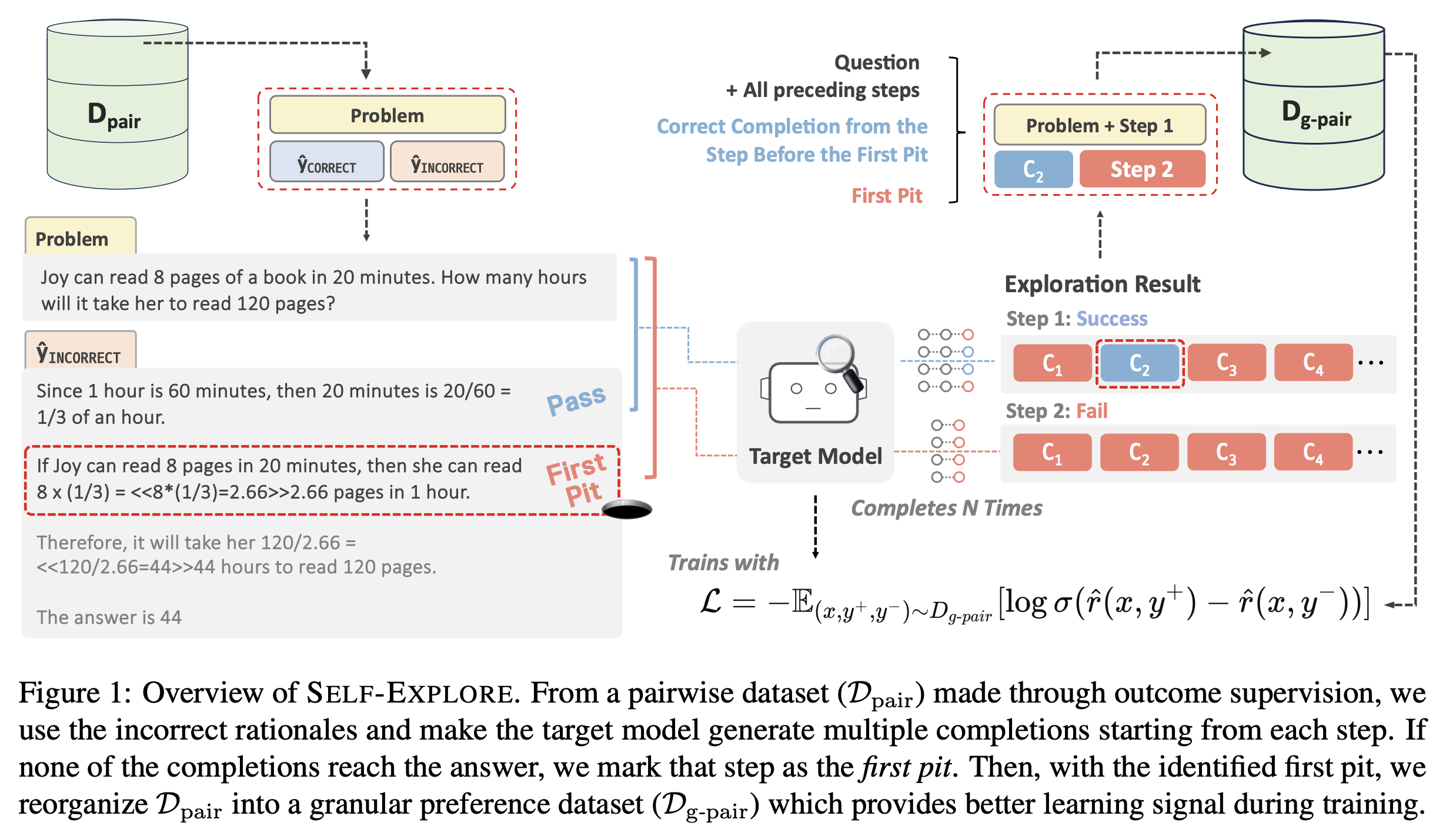

Self-Explore to avoid ️the p️️it!

Improving the Reasoning Capabilities of Language Models with Fine-grained Rewards

This is the official github repository for Self-Explore.

Paper Link: https://arxiv.org/abs/2404.10346

Run pip install -r requirements.txt

All experiments were carried out using 4 x NVIDIA A100 80GB, with CUDA version 12.0.

In the data directory, you will find the train and test file for GSM8K and MATH.

Run SFT (or FT, in short) to get the base generator.

In /scripts/{task}/sft/run_ft.sh you'll see the script necessary for this. (For data_path, please put the trian file.)

Put necessary paths to the files and models then simply run sh scripts/{task}/sft/run_ft.sh in the main directory.

Now you'll need to generate N instances per problem.

To do this, go to gen directory and run sh gen_rft_data.sh.

This assumes you are using 4 GPUs, and generates the predictions in parallel using each GPU.

Once completed, you will see RFT and DPO training file.

Run RFT to get the RFT model, which acts our explorer and reference model when training for DPO.

in /scripts/{task}/sft/run_rft.sh you'll see the script necessary for this.

Put necessary paths to the files and models then simply run sh /scripts/{task}/sft/run_rft.sh in the main directory.

To find the first pit, let the RFT model explore from each step within rejected sample.

You can do this by running gen_step_explore.sh in gen directory. (For data_path here, please put the DPO file generated).

Then you will get a file named ending in gpair_{k}.jsonl

which is your fine-grained pairwise training data.

You can apply any arbitrary preference learning objective, but in our work, we chose DPO (Direct Preference Optimization).

To do this refer to scripts/{task}/dpo/run_dpo.sh.

- To run with the outcome-supervision labels, set the training data as the DPO file generated in Stage 3.

- To run with the step-level fine-grained labels (ours), set the training data as the gpair file generated in Stage 4.

Under eval/{task} directory, you'll find the script needed for running evaluation.

We release our best trained DeepSeek-Math's GSM8K and MATH trained checkpoints on huggingface.

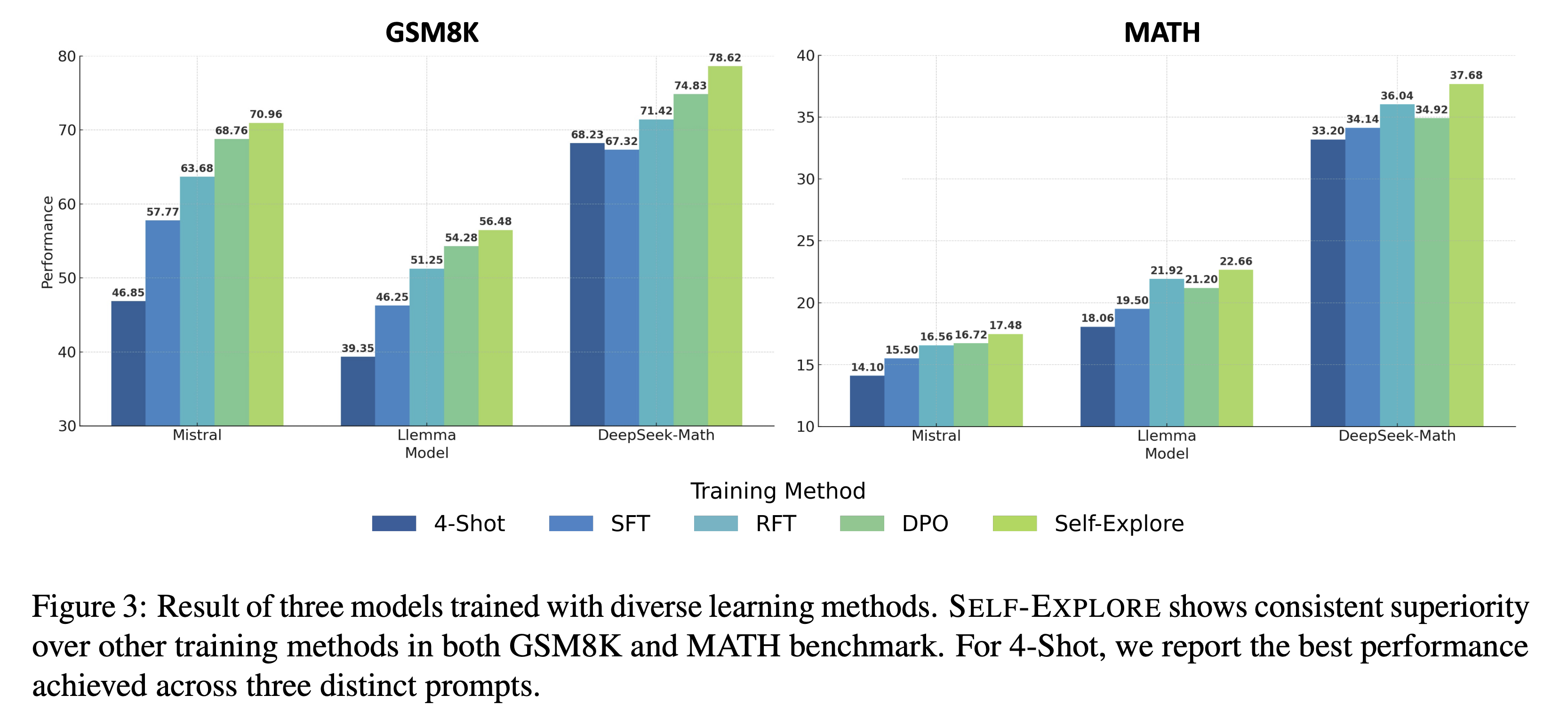

| Model | Accuracy | Download |

|---|---|---|

| DeepSeek_Math_Self_Explore_GSM8K | 78.62 | 🤗 HuggingFace |

| DeepSeek_Math_Self_Explore_MATH | 37.68 | 🤗 HuggingFace |

Our evaluation codes are borrowed from:

- GSM8K: OVM

- MATH: DeepSeek-Math

@misc{hwang2024selfexplore,

title={Self-Explore to Avoid the Pit: Improving the Reasoning Capabilities of Language Models with Fine-grained Rewards},

author={Hyeonbin Hwang and Doyoung Kim and Seungone Kim and Seonghyeon Ye and Minjoon Seo},

year={2024},

eprint={2404.10346},

archivePrefix={arXiv},

primaryClass={cs.CL}

}