graph TD;

FL[Fromal_Logic] --> A[Automata];

MDP[MDP_Data] --> ST[System_Transition];

A & ST --> BA[Buchi_Automata];

BA --> RL_R[RL_Reward];

RL_R --> RL_Agent;

RL_Agent --> BA;

- Formal Logic

Lexical Definition

Parser

- Formal Logic → Automata

a. LOMAP

https://github.com/wasserfeder/lomap

LOMAP uses Spot and ltl2dstar as its bases.

Both Spot and ltl2dstar support Spin.

b. Classes of LOMAP

graph TD;

M[Model] --> At[Automaton];

At --> Robin;

At --> Buchi;

At --> Fsa;

M --> Mk[Markov];

M --> Ts;

c. Graph Representation : networkx

- Node

- Edge : {prev_node, next_node, attribute:’could be any hashable objects’}

d.Spin format

Grammer

- ltl ::= opd | (ltl) | ltl binop ltl | unop ltl

opd

- true, false

op

- finally <> F

- globally [] G

- next X

- until U

- not !

- and &&

- or ||

- implication →

- equivalence ↔

e.Spot

f. ltl2dstar

- Buchi Automata

- RL

Finite state predict automata is a fsa with computable edges.

{states, transitions, guards, initial states, final states}

classDiagram

class Predicate{

+actionable() bool

+evaluate(classState): PredicateEvaluationResult

}

class PredicateEvaluationResult{

result: float

+and()

+or()

+invert()

+get-result(): float

}

FSA <|-- FSPA

FSPA : + PREDICATE-DICT

FSPA : + computes-guard(classState) float

FSPA <.. classState

FSPA <.. PredicateEvaluationResult

FSPA <.. Predicate

- Algorithm

PPO will the default algorithm

# environment initilization

# actor and critic model initlization

# while loop

# RUN simulation until finished

# compute V(s), remenber R

# update v

# Advantage = R + V(S_t-1) - V(S)

# gradient = log(pi(a|theta))AdvantageSometimes, Deep Q learning can also be deployed

Experience Replay - reduce vairence

Update Tearget network after C-steps - reduce varince

Double DQN(Q network for chossing actions, target network for evaluatiing) — avoide false positive

- MDP

MDP {S, A, Sigma, R, T}

R and T are unknonwn initially.

FSPA —> R

T is learned through experience

product automaton: MDP and FSPA

How to get the product automaton

Template midlevel fspa

deterministic automaton

Actor-critic method–proximal policy gradient (37) as our RL algorithm.

curriculum training

-

Reward

-

FSPA guied MDP

-

Implementation on GYM

flowchart LR

a --> b --> a

flowchart LR;

s0["s0(a)"] --> R0((R)) -->|0.9| s1["s1(b)"]

R0 -->|0.1|s0

s1 --> R1((R)) -->|1| s1

s1 --> L1((L)) -->|0.9| s0

L1 -->|0.1| s1

s0 --> L0((L)) -->|1| s0

flowchart LR;

q0((q0)) --> q0

q0 -->|a| q1((q1))

q1 --> q1

q1 --> |b|q2((q2))

q2 --> q2

flowchart LR;

q0s0[q0, s0] --> R0((R)) --> q1s0[q1, s0]

R0 --> q1s1[q1, s1]

q0s0 --> L0((L)) --> q1s0

q1s1 --> L1((L)) --> q2s0[q2, s0]

q1s1 --> R1((R)) --> q2s1[q2, s1]

L1 --> q2s1

q1s0 --> R3((R)) --> q1s1

R3 --> q1s0

q1s0 --> L3((L)) --> q1s0

Algorithm

----------------------------------------------------------------------

**********A gym wrapper for computing ltl reward

Input: PFSA, MDP State:********** S**********, Next MPD State:********** NS, **********FSA Q:********** q

**********Next FSA State:********** nq = fsa.nextQ(s, q)

**********Get all Out Edges:********** edges = fsa.outedge(nq)

**********Compute the smallest predicate:********** p = samllest(edges, NS)

**********Reward = p

return********** Reward

flowchart LR;

MDPS[MDP State] --> NN[MLP]-->action1

NN --> action2

FSA[FSA state] --> NN

- Grid World, Fully Observable, Dense Reward: Distance to target ,Discrite Control

- Grid World, Partially Observable, Sparse Reward: 0, 1, finished or not, Discrete Control

- Continues World, PPO

- MiniGrid

- pybullt manipulation

- meta-world (mojo-co based environment) This environment is for Multi-task RL and Meta-Learning

- The reward will escalate near 0;

- State space expanded by the number of fspa states. A simple fspa can have 10 or more states. The total fspa guided MDP’s state number is multiplied by the factor of 10 or more.

- TLTL’s dense rewards are hard to converge to the “real goal”. The agent gets more reward staying near the Predicate boundary, where has the highest robustness, than going to the next fspa state. Once the fspa runs into the next state, the robustness will drop dramatically as the target state changes.

We test our algorithm on a simple 8*8 minigrid world. The reward is composed of four parts:

💡 ********Note: we do not use TLTL robustness here as rewards here.********reward = 0

next_p = self.fspa.get_next_state_from_mdp_state(self.current_p)

"Reward I: Encourage FSPA state transition"

if next_p != self.current_p:

reward = 5

terminated = (next_p in self.fspa.final)

"Reward II: Complete the whole mission"

if terminated:

# print(terminated,"next p", next_p, self.env.agent_pos, reward)

reward = 50

#reward = reward - 0.9 * (self.step_count/self.max_steps)

"Reward III: Punish transition to trap states"

if next_p in self.fspa.trap:

truncated = True

if truncated:

reward = -50

"Reward IV: Energy for steps "

reward = reward - 10*(self.step_count/self.max_steps)

self.current_p = next_penv = TltlWrapper(env, tltl="F p1 & F p2 & F p3 & (! p1 U p2) & (! p2 U p3)",

predicates={'p1': PositionPredicate(True, [3, 3]), 'p2': PositionPredicate(True, [5, 5]),

'p3': PositionPredicate(True, [2, 2])})- reset: randomly initialize the fspa state and mdp state

-

F p1 & F p2 & (! p1 U p2)

-

F p1 & F p2 & F p3 & (! p1 U p2) & (! p2 U p3)

- Reward fspa

- Test Result

Always begin from a fixed state.

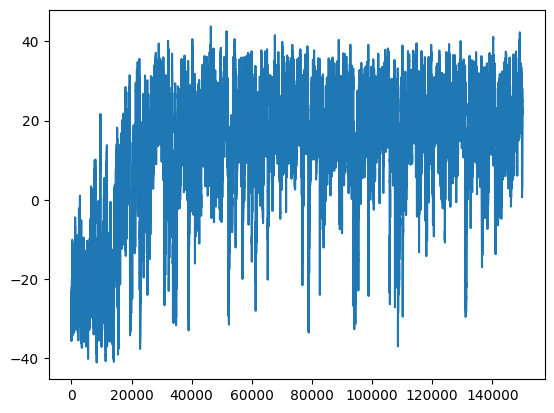

- Reward During Training

-

A formal methods approach to interpret-able reinforcement learning for robotic planning

A formal methods approach to interpretable reinforcement learning.pdf

-

Temporal Logic Guided Safe Reinforcement Learning Using Control Barrier Functions

Temporal Logic Guided Safe Reinforcement Learning Using Control Barrier functions.pdf