This is the official source code for PVN3D: A Deep Point-wise 3D Keypoints Voting Network for 6DoF Pose Estimation, CVPR 2020. (PDF, Video_bilibili, Video_youtube).

We optimized and applied PVN3D to a robotic manipulation contest OCRTOC (IROS 2020: Open Cloud Robot Table Organization Challenge) and got 2nd place! The model was trained on synthesis data generated from rendering engine and only a few frames (about 100 frames) of real data but generalize to real scenes during inference, revealing its capability of cross-domain generalization.

- The following setting is for pytorch 1.0.1. For pytorch 1.5 & cuda 10, switch to branch pytorch-1.5.

- Install CUDA9.0

- Set up python environment from requirement.txt:

pip3 install -r requirement.txt

- Install tkinter through

sudo apt install python3-tk - Install python-pcl.

- Install PointNet++ (refer from Pointnet2_PyTorch):

python3 setup.py build_ext

-

LineMOD: Download the preprocessed LineMOD dataset from here (refer from DenseFusion). Unzip it and link the unzipped

Linemod_preprocessed/topvn3d/datasets/linemod/Linemod_preprocessed:ln -s path_to_unzipped_Linemod_preprocessed pvn3d/dataset/linemod/

-

YCB-Video: Download the YCB-Video Dataset from PoseCNN. Unzip it and link the unzipped

YCB_Video_Datasettopvn3d/datasets/ycb/YCB_Video_Dataset:ln -s path_to_unzipped_YCB_Video_Dataset pvn3d/datasets/ycb/

- First, generate synthesis data for each object using scripts from raster triangle.

- Train the model for the target object. Take object ape for example:

The trained checkpoints are stored in

cd pvn3d python3 -m train.train_linemod_pvn3d --cls apetrain_log/linemod/checkpoints/{cls}/,train_log/linemod/checkpoints/ape/in this example.

- Start evaluation by:

You can evaluate different checkpoint by revising

# commands in eval_linemod.sh cls='ape' tst_mdl=train_log/linemod/checkpoints/${cls}/${cls}_pvn3d_best.pth.tar python3 -m train.train_linemod_pvn3d -checkpoint $tst_mdl -eval_net --test --cls $cls

tst_mdlto the path of your target model. - We provide our pre-trained models for each object at onedrive link, baiduyun link (access code(提取码): 8kmp). Download them and move them to their according folders. For example, move the

ape_pvn3d_best.pth.tartotrain_log/linemod/checkpoints/ape/. Then revisetst_mdl=train_log/linemod/checkpoints/ape/ape_pvn3d_best.path.tarfor testing.

- After training your models or downloading the pre-trained models, you can start the demo by:

The visualization results will be stored in

# commands in demo_linemod.sh cls='ape' tst_mdl=train_log/linemod/checkpoints/${cls}/${cls}_pvn3d_best.pth.tar python3 -m demo -dataset linemod -checkpoint $tst_mdl -cls $cls

train_log/linemod/eval_results/{cls}/pose_vis

- Preprocess the validation set to speed up training:

cd pvn3d python3 -m datasets.ycb.preprocess_testset - Start training on the YCB-Video Dataset by:

The trained model checkpoints are stored in

python3 -m train.train_ycb_pvn3d

train_log/ycb/checkpoints/

- Start evaluating by:

You can evaluate different checkpoint by revising the

# commands in eval_ycb.sh tst_mdl=train_log/ycb/checkpoints/pvn3d_best.pth.tar python3 -m train.train_ycb_pvn3d -checkpoint $tst_mdl -eval_net --test

tst_mdlto the path of your target model. - We provide our pre-trained models at onedrive link, baiduyun link (access code(提取码): h2i5). Download the ycb pre-trained model, move it to

train_log/ycb/checkpoints/and modifytst_mdlfor testing.

- After training your model or downloading the pre-trained model, you can start the demo by:

The visualization results will be stored in

# commands in demo_ycb.sh tst_mdl=train_log/ycb/checkpoints/pvn3d_best.pth.tar python3 -m demo -checkpoint $tst_mdl -dataset ycb

train_log/ycb/eval_results/pose_vis

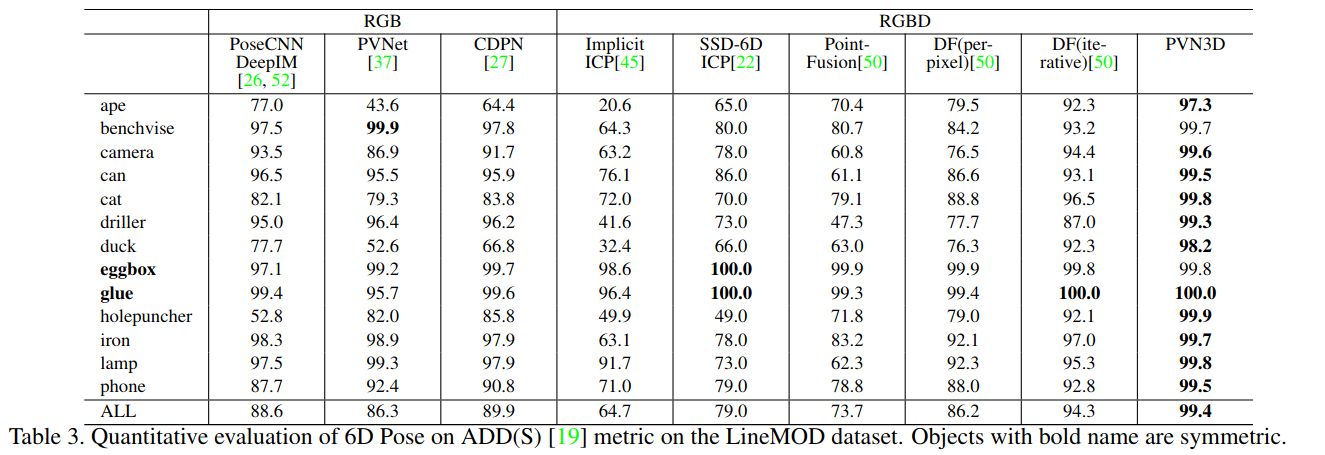

- Evaluation result on the LineMOD dataset:

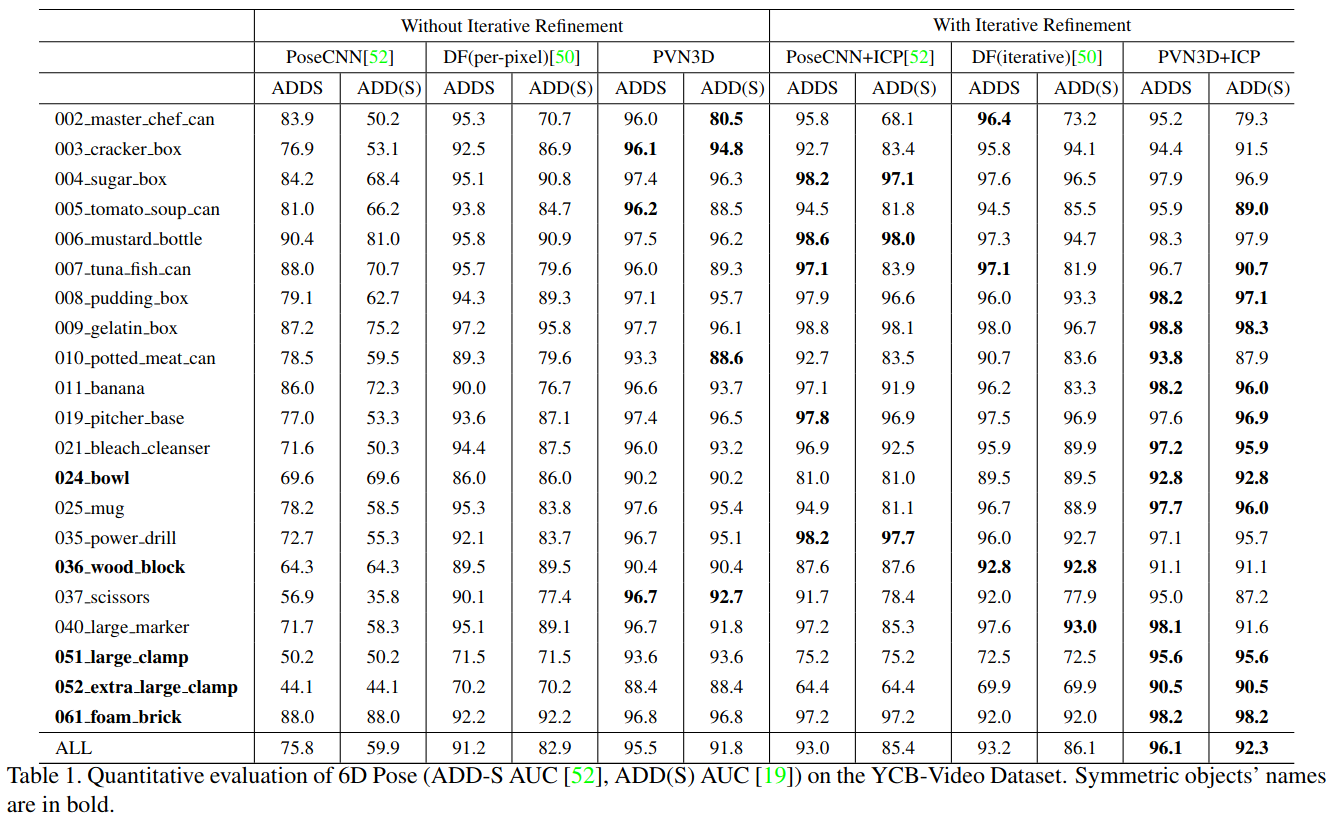

- Evaluation result on the YCB-Video dataset:

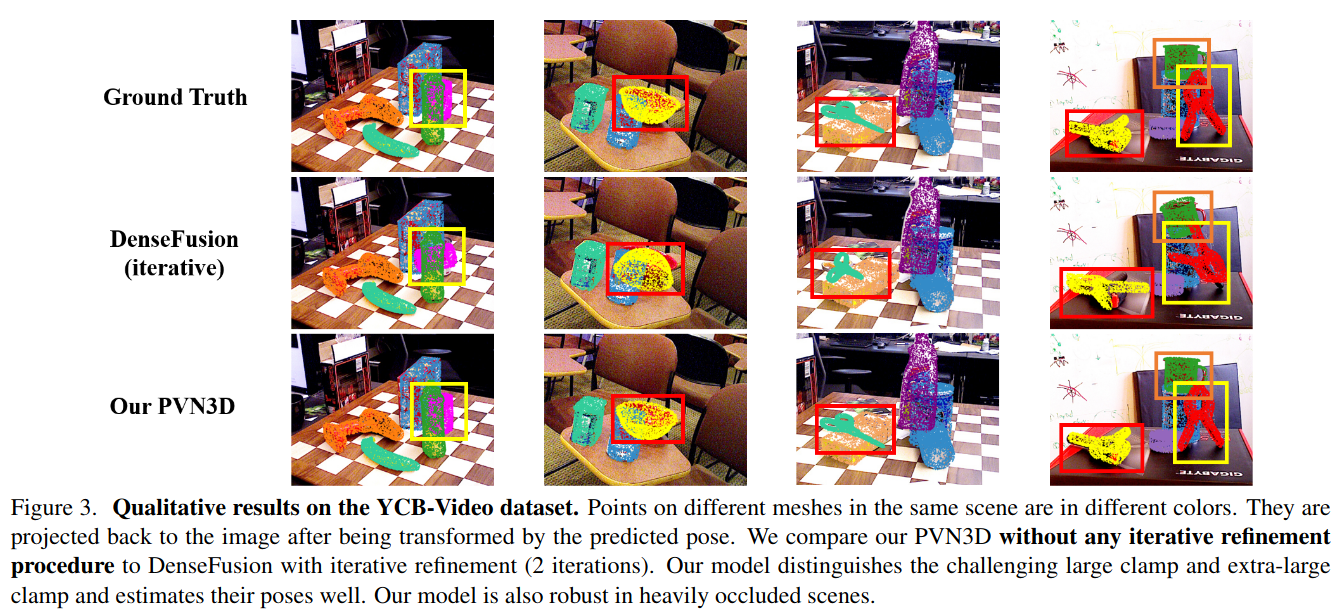

- Visualization of some predicted poses on YCB-Video dataset:

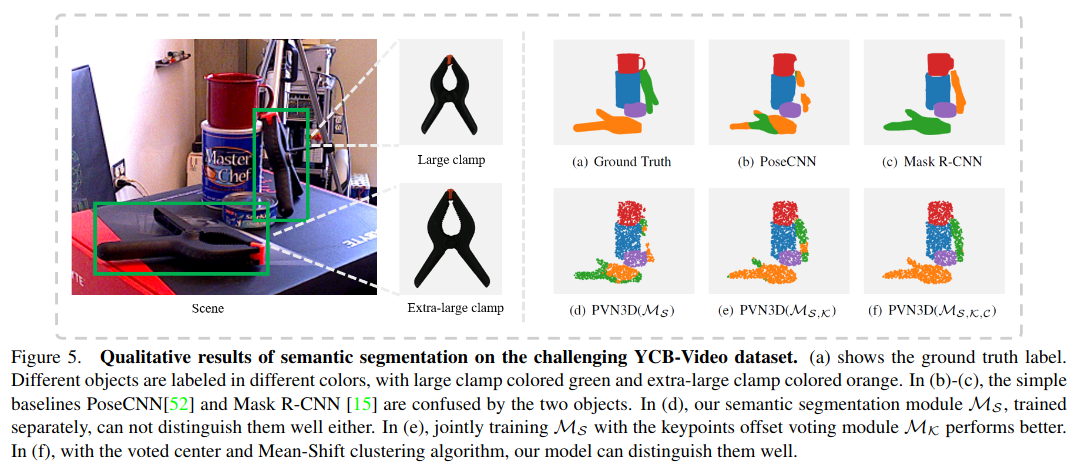

- Joint training for distinguishing objects with similar appearance but different in size:

- Compile the FPS scripts (refer from clean-pvnet):

cd lib/utils/dataset_tools/fps/

python3 setup.py build_ext --inplace

- Generate information of objects, eg. radius, 3D keypoints, etc. in your datasets with the

gen_obj_info.pyscript:

cd ../

python3 gen_obj_info.py --help

- Modify info of your new dataset in

PVN3D/pvn3d/common.py - Write your dataset preprocess script following

PVN3D/pvn3d/datasets/ycb/ycb_dataset.py(for multi objects of a scene) orPVN3D/pvn3d/datasets/linemod/linemod_dataset.py(for single object of a scene). Note that you should modify or call the function that get your model info, such as 3D keypoints, center points, and radius properly. - (Important!) Visualize and check if you process the data properly, eg, the projected keypoint and center point, the semantic label of each point, etc.

- For inference, make sure that you load the 3D keypoints, center point, and radius of your objects in the object coordinate system properly in

PVN3D/pvn3d/lib/utils/pvn3d_eval_utils.py.

Please cite PVN3D if you use this repository in your publications:

@InProceedings{He_2020_CVPR,

author = {He, Yisheng and Sun, Wei and Huang, Haibin and Liu, Jianran and Fan, Haoqiang and Sun, Jian},

title = {PVN3D: A Deep Point-Wise 3D Keypoints Voting Network for 6DoF Pose Estimation},

booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}