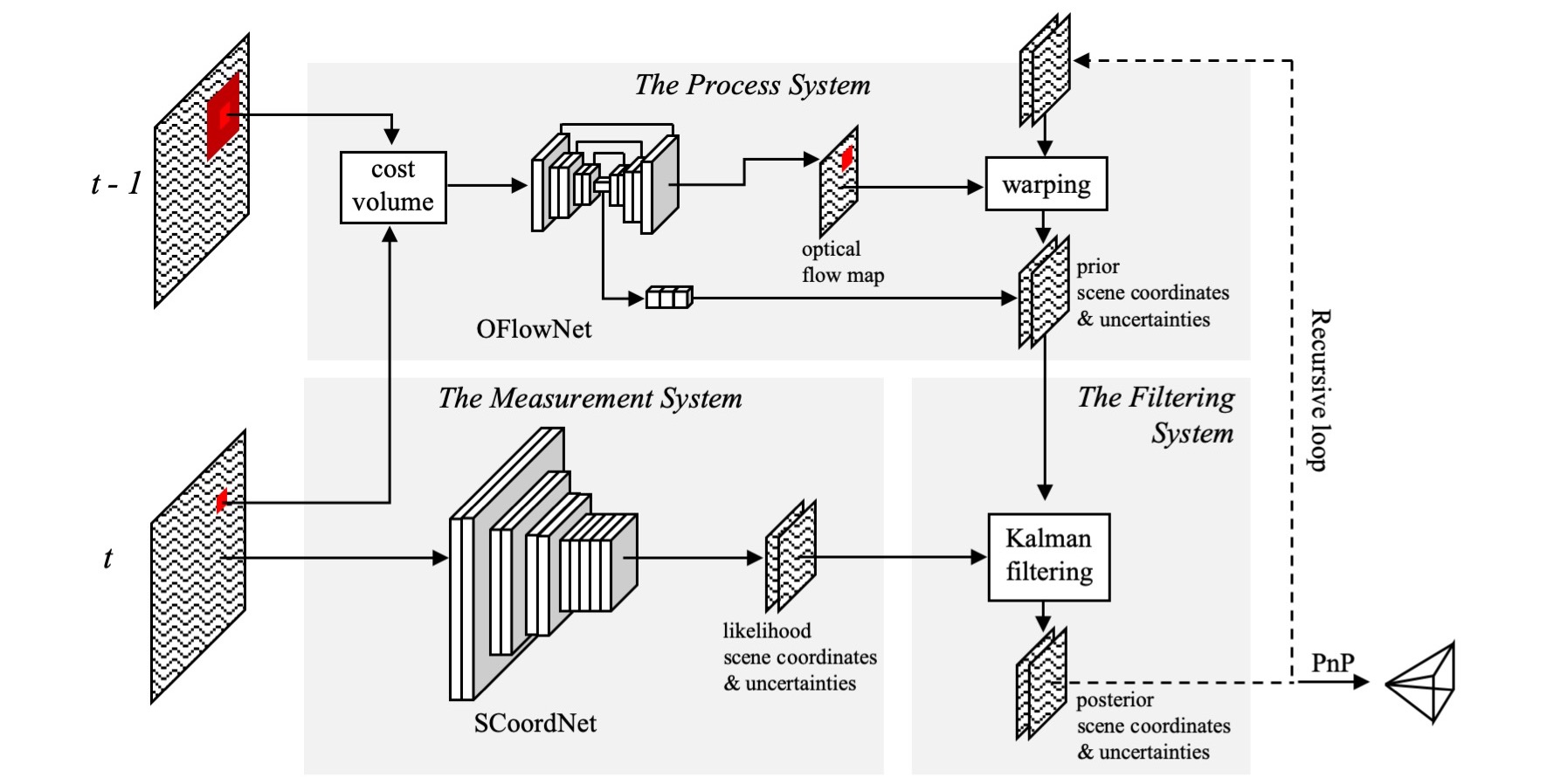

This is a study project on Bayesian State estimation in a deep learning framework. KFNet estimates scene coordinates aka. point clouds using a measurement + process systems and uses a kalman filter formulation to refine the estimated point clouds. This repository is adapted from this main codebase.

You can either follow the below evaluation procedure to predict the point clouds, or you can download the point clouds that we have already obtained here and skip to the visualization step.

The original paper & our presentation video and slides can be found here.

The codebase requires CUDA 9.0 cudnn 7 and tensorflow 1.10-gpu. These are not the latest versions and we have built a docker container that covers all of these required dependancies. For this, however, you need docker to be setup in your system, and its install procedure is explained below.

Follow this tutorial for installing docker, if not installed already. Otherwise skip to the next step. Run the following commands to install docker.io

sudo apt update

sudo apt install docker.io

sudo systemctl start docker

sudo systemctl enable docker

Follow the steps to install Nvidia-container-toolkit here. If the console output shows the GPU, contine to the next steps.

Download our docker image

-

run

docker load < kfnetv1.tar.gz -

Check if the docker image is present by running

docker image ls

docker run -it --rm --gpus all -v $(pwd):/workspace/ gokulkfnet/cuda9-cudnn7-tf1.10-gpu:v1 bash

Note: -it tag means interactive mode. --rm tag means, the container will be automatically deleted after we exit the command line si closed.

In the docker terminal, go to workspace folder cd ../workspace

- Download models from here and paste in ./models folder. Also refer main repository

- Download the prepared labels and images as mentioned here and paste in ./inputs folder

To predict scene coordinates,

-

run

python KFNet/eval.py --input_folder <input folder> --output_folder <output folder> --model_folder ./models/KFNet/$scene$ --scene $scene$

Example:

python KFNet/eval.py --input_folder ./input --output_folder ./output/fire --model_folder ./models/KFNet/fire --scene fire

Note: this will save the process, measured and filtered scene coordinates.

To predict optical flow,

- run

python OFlowNet/eval.py <input folder> --output_folder <output folder> --model_folder ./models/OFlowNet

Example:

python OFlowNet/eval.py --input_folder ./input --output_folder ./output/fire --model_folder ./models/OFlowNet --scene fire

The jupyter notebook we use here for visualization is written in Python3.7 and requires Open3D( for point cloud visualization) and OpenCV. you can install this by running the below command.

pip install -r requirements.txt

Having all dependancies installed,

- check

PointCloudVisualization.ipynbto visualize the point clouds. - Check

OpticalFlowVisualization.ipynbto visualize the optical flow We modified the thresholding mechanism to reject outliers in point clouds. KFNet had a static thresholding for outlier rejection using the uncertainity maps by default. we rewrote the function to do autothreshold based on the uncertainity maps.

We also modified the evaluation script to provide the process, measurment as well as the filtered states (point clouds)

Having estimated the point clouds, we can solve for the camera poses using the Perspective-n-Point method with RANSAC and refinement by Non Linear Optimization, which uses a reprojection error based constraint. The dependancies for this section involve a different docker container using Ubuntu 20.04 which we provide here. Follow the below steps to obtain the pose estimates.

- Download the above link and unzip all the files.

- run

docker load < PnP_Docker.tar docker image lsdocker run -it --rm --gpus all -v $(pwd):/workspace/ ubuntu/ubuntu:20.04 bash- unzip PnP.zip && cd PnP

- unzip PnP_Files.zip

- Place pnp_input and pnp_new in the main repo.

- cd pnp-new

To get camera poses,

-

run

python main.py <path_to_output_file_list> <output_folder> --gt <path_to_ground_truth_pose_list> --thread <32>

Example:

python main.py ../pnp-input/fire/cord_list.txt ./pnp-output/ --gt ../pnp-input/fire/gt_pose_list.txt --thread 32

Presentation Slides: https://drive.google.com/file/d/17f3e22c-c8zTfKAxjqHQ3oCDh7V22oQg/view?usp=sharing