The tremendous recent advances in generative artificial intelligence techniques have led to significant successes and promise in a wide range of different applications ranging from conversational agents and textual content generation to voice and visual synthesis. Amid the rise in generative AI and its increasing widespread adoption, there has been significant growing concern over the use of generative AI for malicious purposes. In the realm of visual content synthesis using generative AI, key areas of significant concern has been image forgery (e.g., generation of images containing or derived from copyright content), and data poisoning (i.e., generation of adversarially contaminated images). Motivated to address these key concerns to encourage responsible generative AI, we introduce the DeepfakeArt Challenge, a large-scale challenge benchmark dataset designed specifically to aid in the building of machine learning algorithms for generative AI art forgery and data poisoning detection. Comprising of over 32,000 records across a variety of generative forgery and data poisoning techniques, each entry consists of a pair of images that are either forgeries / adversarially contaminated or not. Each of the generated images in the DeepfakeArt Challenge benchmark dataset has been quality checked in a comprehensive manner. The DeepfakeArt Challenge is a core part of GenAI4Good, a global open source initiative for accelerating machine learning for promoting responsible creation and deployment of generative AI for good.

The generative forgery and data poisoning methods leveraged in the DeepfakeArt Challenge benchmark dataset include:

- Inpainting

- Style Transfer

- Adversarial data poisoning

- Cutmix

Team Members:

- Hossein Aboutalebi

- Dayou Mao

- Carol Xu

- Alexander Wong

Partners:

- Vision and Image Processing Research Group, University of Waterloo

The DeepfakeArt Challenge benchmark dataset is available here

The DeepfakeArt Challenge paper is available here

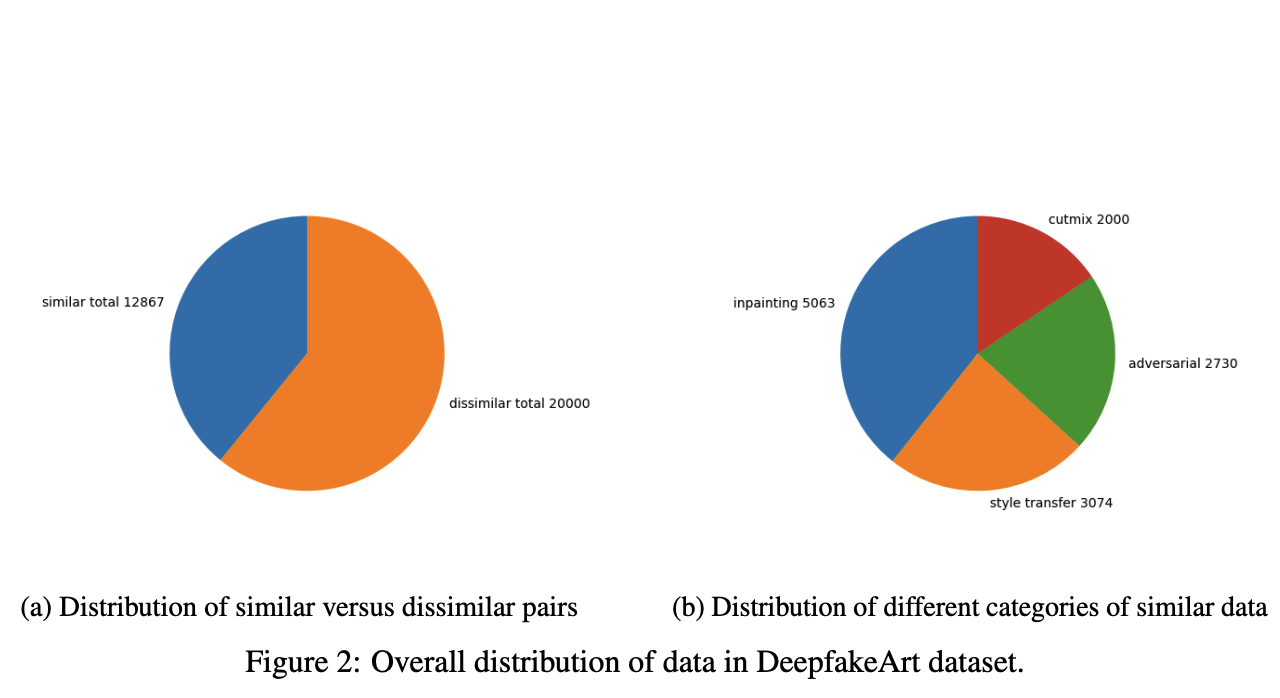

The DeepfakeArt Challenge benchmark dataset, as proposed, encompasses over 32,000 records, incorporating a wide spectrum of generative forgery and data poisoning techniques. Each record is represented by a pair of images, which could be either forgeries, adversarially compromised, or not. Fig. 2 (a) depicts the overall distribution of data, differentiating between forgery/adversarially contaminated records and untainted ones. The dispersion of data across various generative forgery and data poisoning techniques is demonstrated in Fig. 2 (b). Notably, as presented Fig. in 2 (a), the dataset contains almost double the number of dissimilar pairs compared to similar pairs, making the identification of similar pairs substantially more challenging given that two-thirds of the dataset comprises dissimilar pairs.

Generated forgery images via inpainting.The source dataset for the inpainting category is WikiArt (ref). Each image is sampled randomly from the dataset as the source image to generate forgery images. Each record in this category consists of three images:

- source image: The source image used to create a forgery image from

- inpainting image: The inpainting image generated by Stable Diffusion 2 model (ref)

- masking image: black-white image which white parts depicts which parts of original image is inpainted by Stable Diffusion 2 to generate inpainting image

The prompt used for the generation of the inpainting image is: "generate a painting compatible with the rest of the image"

This category consists of more than 5063 records. The original images are masked between 40%-60%. We applied one of the followed masking schema randomly:

- side masking: where the top side, bottom side, right side or left side of the source image is masked

- diagonal masking: where the upper right, upper left, lower right, or lower left diagonal side of thw source image is masked

- random masking: where randomly selected parts of the source image are masked

The code for the data generation in this category can be found here

Generated forgery images via style transfer.The source dataset for the style transfer category is WikiArt (ref). Each record in this category consists of two images:

- source image: The source image used to create a forgery image from

- style transferred image: The style transferred image generated by ControlNet (ref)

- edge image: This edge image is created using Canny edge detection

- prompt: one of four prompts used for style transfer

Guided by Canny edge detections and prompts, we selected 200 images from each sub-directory of the WikiArt dataset, except for Action_painting and Analytical_Cubism, which contain only 98 and 110 images, respectively. We utilized four distinct prompts for different styles for the generations:

- "a high-quality, detailed, realistic image",

- "a high-quality, detailed, cartoon style drawing",

- "a high-quality, detailed, oil painting"

- "a high-quality, detailed, pencil drawing".

Each prompt was used for a quarter of the images from each sub-directory.

This category consists of more than 3,074 records.

The code for the data generation of this category can be found here

Images in this category are adversarially data poisoned images that were generated using RobustBench (ref) and torchattack (ref) libraries on WikiArt dataset. Here we used a ResNet50 model trained on ImageNet against:

The dataset used here is WikiArt. For each source image, we have reported 3 attacks results. The images are also center cropped to make it harder for detection.

Each record in this category consists of two images:

- source image: The source image used to create an adversarially data poisoned image from

- adv_image: The adversarially data poisoned image

This category consists of more than 2,730 records.

The code for this category can be found (here).

This method is initially proposed in the paper of "Diffusion Art or Digital Forgery? Investigating Data Replication in Diffusion Models" (ref).

In this section, images were generated using the "Cutmix" technique, which involves selecting a square patch of pixels from a source image and overlaying it onto a target image. Both the source and target images were randomly chosen from the WikiArt dataset. The patch size, source image extraction location, and target image overlay location were all determined randomly. This section contains 2,000 records.

The code for this section can be found (here).

Aboutalebi, Hossein, Daniel Mao, Carol Xu, and Alexander Wong. "DeepfakeArt Challenge: A Benchmark Dataset for Generative AI Art Forgery and Data Poisoning Detection." arXiv preprint arXiv:2306.01272 (2023)