General linear and non-linear optimization methods in Python.

pip install -> pip install --upgrade https://github.com/gusmaogabriels/optinpy/zipball/master

- Linear Programming

- Non-linear Optimization

- Numerical Differentiation

- Equivalent tableau (

.tableau) -> under development - Nodes objetcs (

.node) - Arcs objects (

.arc)

- Big-M (

.bigm)

**Example**

```python

### BIG-M ###

n3 = optinpy.graph() # Create an graph object

bs = zip(range(1,6),[10,4,0,-6,-8]) # Set node net production (+) or consumption (-)

for b in bs:

n3.add_node(*b) # Add node to graph and assign them a net production (+) or consumption (-)

connections = [[1,2,1],[1,3,8],[1,4,1],[2,3,2],[3,4,1],[3,5,4],[4,5,12],[5,2,7]] # Define arcs [from,to,cost]

for c in connections:

n3.add_connection(*c) # Add arcs (connections) to the graph

optinpy.bigm(n3,20) # MCF problem via Big-M

```

-

Dijkstra's (

.dijkstra) -

Ford-Bellman-Moore's (

.fmb)Example

### Example 1 ### n = optinpy.graph() # Create an graph object connections = [[1,2,2],[1,3,4],[1,4,5],[2,4,2],[3,4,1]] for c in connections: n.add_connection(*c) # Define arcs [from,to,cost] rot,ds = optinpy.sp.fmb(n,1,verbose=True) # Shortest-path via Ford-Belman-Moore rot,ds = optinpy.sp.dijkstra(n,1,verbose=True) # Shortest-path via Dijkstra ### Example 2 ### https://www.youtube.com/watch?v=obWXjtg0L64 n = optinpy.graph() # Create an graph object connections = [[1,2,10],[1,6,8],[2,4,2],[3,2,1],[4,3,-2],[5,2,-4],\ [5,4,-1],[6,5,1]] # Define arcs [from,to,cost] for c in connections: n.add_connection(*c) # Add arcs (connections) to the graph rot,ds = optinpy.sp.fmb(n,1,verbose=True) # Shortest-path via Ford-Belman-Moore ### Example 3 ### https://www.youtube.com/watch?v=gdmfOwyQlcI n = optinpy.graph() # Create an graph object connections = [['A','B',4],['A','E',7],['A','C',3],['B','C',6],['B','D',5],['C','D',11],\ ['C','E',8],['D','E',2],['D','F',2],['D','G',10],['E','G',5]] # Define arcs [from,to,cost] for c in connections: n.add_connection(*c) # Add arcs (connections) to the graph rot,ds = optinpy.sp.dijkstra(n,'A',verbose=True) # Shortest-path via Dijkstra

-

Prim's (

.prim) -

Kruskal's alike (see commit notes) (

.kruskal) -

Borůvka's (

.boruvka)Example

n = optinpy.graph() # Create an graph object connections = [['A','B',7],['A','D',5],['B','C',8],['B','D',9],['B','E',7],['C','E',5],\ ['D','E',15],['D','F',6],['E','F',8],['E','G',9],['F','G',11]] # Define arcs [from,to,cost] for c in connections: n.add_connection(*c) # Add arcs (connections) to the graph n2 = optinpy.graph() connections = [['A','B',13],['A','C',6],['B','C',7],['B','D',1],['C','E',8],['C','D',14],\ ['C','H',20],['D','E',9],['D','F',3],['E','F',2],['E','J',18],['G','H',15],\ ['G','I',5],['G','J',19],['G','K',10],['H','J',17],['I','K',11],['J','K',16],\ ['J','L',4],['K','L',12]] # Define arcs [from,to,cost] for c in connections: n2.add_connection(*c) # Add arcs (connections) to the graph # Assessing n2 print("Prims's") arcs1 = optinpy.mst.prim(n2,'A',verbose=True) # Minimum spanning-tree via Prim print("Kruskal's") arcs2 = optinpy.mst.kruskal(n2,verbose=True) # Minimum spanning-tree via Kruskal print("Boruvka's") arcs3 = optinpy.mst.boruvka(n2,verbose=True) # Minimum spanning-tree via Boruvka

BEING FIXED: AFTER ADDITION OF LB/UB DEFINITION FOR x, GLITCHES CAME ABOUT... IT SHOULD BE FIXED ASAP

-

Base Class Constructor (

S = optinpy.simplex(A,b,c,lb,ub))Define a linear optimization problem S:

S = optinpy.simplex(A,b,c,lb,ub)to find the minimum value of c×x (default) subject to A×x ≤ b, where A is a n×m matrix holding the constraints coefficients, b ∈ Rn and c ∈ Rm is the objective function cofficients, lb and ub are the lower and upper bound values in Rn for x, respectively. -

Primal Step (

S.primal())Given S a Simplex object,

S.primal()carries out a primal pivotting as of a primal feasible x, including in the basis set the basis of greatest improvement of the Objective Functionl, while still respecting the primal feasibilityA×x ≤ b, then determining which basis should leave the basis set. -

Dual Simplex (

S.dual())Given S a Simplex object,

S.dual()carries out a dual pivotting as of a dual feasible x, so that there is first defined which basis should leave the basis set and the which one should enter while keeping the dual feasibility.

Unidimensional minimizers that seek improving an objective function along a given descent direction, d, i.e. f(x) parametrized as f(α) = (x0+α×d). Backtracking and 2nd-3rd order Interpolation methods enforde that the ensuing value satisfies Armijo's condition: f(x0+α×d) < f(x0)+c∇f(x0)'d, where c is an arbitrary threshold, c ∈ (0,1). Unimodality and Golden-section are exploitation-based methods that successively probe and slice the domain determined by d, excluding, as of a quasi-convexity assumption on the α-domain, regions for which the function's value should be worse off.

Finds an α value that satisfies Armijo's condition with successive value decrement by a factor ρ.

Finds an α value that satisfies Armijo's condition by successively minimizing a 2nd- or 3rd-order f(α) polynomial interpolated from the latest f-α pairs.

Successive α-domain subsectioning by uniformly random probing.

Successive α-domain subsectioning following the golden-ratio.

A dictionary object that holds the method/algorithm's set-up for the fminunc, fmincon, fminnlcon functions as well as for linesearch and numerical differentiation algorithms.

Standard parameters

{'fminunc': # fminunc algorithm definition

{'method': 'newton', # 'gradient', 'newton', `'modified-newton', 'fletcher-reeves' or 'quasi-newton'

'params':

{'gradient':

{'max_iter':1e3},

'newton':

{'max_iter':1e3},

'modified-newton':

{'sigma' : 1, 'max_iter':1e3}, # sigma is the lower bound for the modified Hessian eigenvalue

'conjugate-gradient':

{'max_iter':1e3},

'fletcher-reeves':

{'max_iter':1e3},

'quasi-newton':

{'max_iter':1e3,'hessian_update':'davidon-fletcher-powell'} # hessian_update is either dfp or BFGS

}},\

'fmincon':

{'method':'newton','params':\

{'projected-gradient':

{'max_iter':1e3,'threshold':1e-6},\

}},\

'fminnlcon':

{'method':'penalty','params':\

{'log-barrier':

{'max_iter':1e3,'threshold':1e-6},\

'barrier':

{'max_iter':1e3,'threshold':1e-6},\

'penalty':

{'max_iter':1e3,'threshold':1e-6},\

}},

'jacobian': # jacobian algorithm definition

{'algorithm':'central','epsilon':sqrt(eps)}, # algorithm = 'central', 'forward', 'backward'; epsilon = perturbation

'hessian':

{'algorithm':'central','epsilon':sqrt(eps),'initial':None}, # algorithm = 'central', 'forward', 'backward'; epsilon = perturbation, 'inital' = initial hessian with (size of x)-by-(size of x) or None for identity.

'linesearch':

{'method':'backtracking', # 'backtracking', 'interp23, 'unimodality' or 'golden-section'

'params':

{'backtracking':

{'alpha':1,'rho':0.5,'c':1e-4,'max_iter':1e3}, # alpha = initial step scale; rho = step reduction factor; c = Armijo's parameter

'interp23':

{'alpha':1,'alpha_min':0.1,'rho':0.5,'c':1e-4,'max_iter':1e3}, # alpha_min = minimum ultimate alpha below which 3rd order interpolation ensues

'unimodality':

{'b':1,'threshold':1e-4,'max_iter':1e3}, # b = initial step scale in derivatives domain; threshold: variation threshold

'golden_ratio':

{'b':1,'threshold':1e-4,'max_iter':1e3}

}

}

} fminunc evokes the so far implemented unconstrained non-linear optimization algorithms given the parameters set.

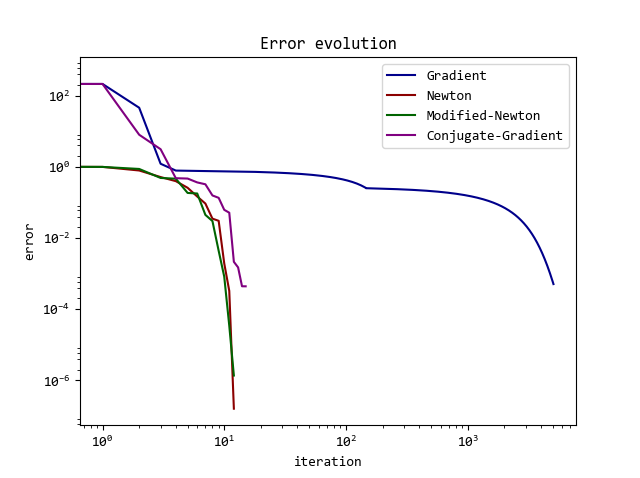

Gradient vs. Newton's Method, Modified-Newton (somewhere in between weighted by σ parameter), and Conjugate Gradient starting @ (2,2)*

Log-scale error evolution

* Line-search method: 'interp23' with alpha = 1, rho = 0.5, alpha_min = 0.1, c = 0.1 (Wolfe's condition); gradient and Hessian calculation from central algorithms and 10-6 perturbation epsilon. max_iter = 103

-

The gradient algorithm minimizes f(x) by first-order approximation: f(x) = f(x0) + ∇f(x0)'Δx, with descent direction, d, given by:

d = -∇f(x0).

The ultimate iteration step is given by the minimization of f(α) = f(x0) - α∇f(x0) with a lineserach substep.

-

Newton's method minimizes f(x) as of a second order approximation of the function f(x) = f(x0) + ∇f(x0)'Δx + Δx'H(x0)Δx, where H(x0) is the Hessian matrix. The descent direction is given by:

d = -H(x0)-1*∇f(x0).

-

The modified-Newton's algorithm handles the inversion of H(x0) by enforcing positive eigenvalues so that Cholesky's decomposition can be used to solve H(x0)*d = -∇f(x0) as a system of lower-triangular matrices:

H(x) = L*L'

L*y = -∇f(x0)

L'*d = y.

-

The conjugate gradient algorithm builds a set of Hessian-orthogonal (Q-orthogonal) directions as of Gram-Schmidt Q-orthogonalization so that descent directions, d, are Q-orthogonal and preceding gradients are orthogonal: dk+1 = pk+1 - ∑ki=0(pk+1'Qdi/di'Q**di)di, where p is a linear independent set. Replacing pi by -∇f(xi), leads us to:

dk+1 = -∇f(xk+1) + ∇f(xk+1)'H(xk+1)dk/dk'H(xk+1)dk

-

Quasi-Newton methods are in between gradient and Newton's method, with successive approximations of the Hessian and its inverse matrix.

for the following updates, consider:

qk = ∇f(xk+1) - ∇f(xk)

pk = αkdk

-

Rank-2 correction of the inverse Hessian as of a sum of 2 rank-1 matrices.

H-1k+1 = H-1k + pk(pk)'/(pk)'qk - H-1kqk(qk)'H-1k/(qk)'H-1kqk

-

Also a Rank-2 correction of the inverse Hessian as of a sum of 2 rank-1 matrices.

H-1k+1 = H-1k + (1+(qk)'H-1kqk/(qk)'pk)pk(pk)'/(pk)'qk - (pk(qk)'H-1k+H-1kqk(pk)')/(qk)'pk

fmincon extends fminunc functionality for cases in which linear constraints are in play.

Projected-gradient algorithm lifting off from the axis center @ (0,0) to a feasible starting point by Simplex with -∇f(x0) cost

Constraints:

2x1 - 2 ≤ -1 (black)

5x1 + 3x2 ≤ 0 (red)

2x1 + x2 ≤ 3 (blue)

x1 - 2x2 ≤ 2 (green)

* Line-search method: 'interp23' with alpha = 1, rho = 0.6, alpha_min = 0.1, c = 10-3 (Wolfe's condition); gradient and Hessian calculation from central algorithms and eps0.5 perturbation epsilon, where eps stands for the smallest float64 number suchs that 1.0 + eps != 0. max_iter = 103

-

The projected-gradient algorithm minimizes f(x) by first-order approximation: f(x) = f(x0) + ∇f(x0)'Δx, with descent direction, d under inequalities constraints A<=b and equalities constraints Aeq=beq given by:

d = -P∇f(xk).

where P is the orthogonal projection matrix on the nullspace of the subspace defined by the active constraints.

P is given by I - Ak'(AkAk')-1Ak and I is the identity matrix.

Ak is n-by-m consists of the union of the set of active inequality constraints and the equality constrints set.

The ultimate iteration step is given by the minimization of f(α) = f(x0) - αd(xk) with a lineserach substep.

If d is less than a given arbitrary tolerance, the Lagrangian multipliers are computed to ckeck whether the Karush-Kuhn-Tucker (KKT) conditions are satisfied. If any inequality constraint lagragian multiplier is less than zero, the constraint is relaxed, the respective line in Ak is removed and a the iterative process proceeds with Ak+1. Otherwise the solution satisfies the KKT conditions and the point is said to be optimal.

The lagrangian multipliers are given by -(AkAk')-1Ak∇f(xk).

An initial feasible point is attained by minimizing a Simplex from an initial arbitrary with cost function as the gradient at that point. As the Simplex package is being reestructured, for now, the Scipy package linprog is being used to solve the Simplex.

Example: f(x) = ||x||²-2x1-3x4, begining @ (0,0,0,0) to a feasible starting point by Simplex with -∇f(x0) cost, under constraints:

2x1 + x2 + x3 + 4x4 = 7

x1 + x2 + 2x3 + x4 = 6The initial feasible point, is found by minimizing -∇f @ (0,0,0,0) = -(-2, 34/3, 0, -7/3) under the constraints, which leads to the initial feasible point x0 = (0, 17/3, 0, 1/3).

Since the are no inequality constraints, the set of active constraints reamins the same of the iterative process, and so does the orthogonal projection matrix onto the active set nullspace, P.

By using the 2nd-3rd order interpolation method for the linesearch, the optimal solution, x* = (1.123, 0.6507, 1.829, 0.5685) is attained with only one iteration, f(x*) = 1.401.* Line-search method: 'interp23' with alpha = 1, rho = 0.6, alpha_min = 0.1, c = 10-3 (Wolfe's condition); gradient and Hessian calculation from central algorithms and eps0.5 perturbation epsilon, where eps stands for the smallest float64 number suchs that 1.0 + eps != 0. max_iter = 103

fminnlcon makes use of fminunc routines within linearly and/or non-linearly constrained optimization domains. In essence, it all boils down to modifying the objective function to include the constraints weighted by a increasing or decreasing factor so that, when convergence is achieved, the constraint parcel of the objective function tends to zero. The increase in the c parameter, which weights the constraints, is given by a beta factor.

Since such approach relies on succesive unconstrained optimization and the solution, depending on the algorithm, must always lie within or outside the feasible set, the increase/decrease (beta factor) in the constraints weight should be done in a way that on one hand it is not too litle, so that the iterative process would take forever, and, on the other hand, it cannot be too large for then a succeeding solution might hop to the other side of the feasibility frontier, when constraint weighting function become numerically inconsistent.

The unconstrained optimization method that is meployed within inner optimization steps is the one defined in .nonlinear.params. The converge phase will depend on the suitability of the unconstrained optimization method both to the plain objective function, f(x), and the its combination with the weighted constraint parcel, P(x) or B(x).

Newton's Method might lead to singular Hessian matrices close to the feasible set boundaries; therefore, it is strongly advised for one, if willing to use a second-order unconstrained optimization method, to opt for Modified-Newton's instead.

-

The penalty algorithm starts from from an initially infeasible point with a function of the following shape:

f(x) + c×P(x), where c is a scalar and P(x) is a function that maps from ℝm (m restrictions) to ℝ, such that,

- P(x) ≥ 0 for x ∈ ℝn

- P(x) = 0 for x ∈ S, where S is the feasible set

- As c → inf, P(x) → 0

As default, P(x) = ∑ max({0,gi(x)}) for i = 1, 2, 3, ... p, where gi is the ith constraint.

-

Barrier algorithms must start from an initialy feasible point with and, generally, the weighted constrain mapping function leads to the following minimization form:

f(x) + (1/c)×B(x), where c is a scalar and B(x) is a function that maps from ℝm (m restrictions) to ℝ, such that,

- B(x) ≥ 0 for x ∈ ℝn

- B(x) = 0 for x ∈ S, where S is the feasible set

- As 1/c → 0, B(x) → inf

As standard, B(x) = -∑(1/gi(x)) for i = 1, 2, 3, ... p, where gi is the ith constraint.

One must beware that the unconstrained method along with the line-search algorithm to be used with the barrier method must be carefully chosen in order not to overshoot to the infeasible region. This will probably happen for the gradient algorithm in case the calculated or estimated gradients are not normalized within each iteration.

-

Following the general shape of barrier algorithms, the log-barrier algorithm must as well start from an initialy feasible point. The only difference is in the shape of B.

As default, B(x) = -∑(ln(-gi(x))) for i = 1, 2, 3, ... p, where gi is the ith constraint.

Example: f(x) = (x1-2)² + 2(x2-4)² + 3(x3-4)², under the constraint: ||x||² ≤ 1.

Optimal point @ x* = (0.1547,0.5744,0.8038), f(x*) = 57.5212

Barrier and Log-barrier methods beginning from the feasible point x0 = (0.1,0.1,0.1) and, the Penalty method from the infeasible point (1.4,1.4,1.4), using the Modified-Newton method with minimum eigenvalue set at 1.0 and 'backtracking' linesearch method, with alpha = 1, rho = 0.6, c = 10-4 (Wolfe's condition). The initial value for the nonlineraly-constrained method c parameters: c = 10-3 (constraint weight) and beta = 1.1 (weight increment, i.e. 10% per iteration) and threshold of 10-4.

As regards the graphs below, the L2-norm of residual evolution should no be compared between barrier and penalty methods for the starting point are must be different between methods (feasible and infeasible, respectively). In addition, variations in the defined unconstrained optimization method may lead to different optimization paths and, hence, to variations in the L2-norm of residual shape.

L2-norm of residuals as function of the c parameter

L2-norm of residuals (left) and P(x) or B(x) (right) vs. iteration

Numerical routines to estimate the Jacobian and Hessian matrices of a function at a given point, x0, as of small perturbations along it. As default, the perturbation ε is taken as the square root of the machine precision for a floating point type, eps, such that: 1.0 + eps != 0, and the algorithm for both Jacobian and Hessian is the central algorithm.

-

Typically, the first derivatives of a function or array of functions may be estimated by three different first-order approximation formulas/algorithms:

-

Central:

∇xjfi = (fi(xj,0+ε)-fi(xj,0-ε))/2ε

-

Forward:

∇xjfi = (fi(xj,0+ε)-fi(xj,0))/ε

-

Backward:

∇xjfi = (fi(xj,0)-fi(xj,0-ε))/ε

-

-

The following alogirthms are used for the estimation of the second derivatives for a function from a reference point x0:

-

Central:

On-diagonal terms: 𝛿²f/𝛿xj² = (-f(xj,0+2ε)+16f(xj,0+ε)-30f(xj,0)+16f(xj,0-ε)-f(xj,0-2ε))/12ε²

Off-diagonal terms: 𝛿²f/𝛿xj𝛿xk = (f(xj,0+ε,xk,0+ε)-f(xj,0+ε,xk,0-ε)-f(xj,0-ε,xk,0+ε)+f(xj,0-ε,xk,0-2))/4ε²

-

Forward:

On-diagonal terms: 𝛿²f/𝛿xj² = (f(xj,0+2ε)-2f(xj,0+ε)+f(xj,0))/ε²

Off-diagonal terms: 𝛿²f/𝛿xj𝛿xk = (f(xj,0+ε,xk,0+ε)-f(xj,0+ε,xk,0)-f(xj,0,xk,0+ε)+f(xj,0,xk,0))/ε²

-

Backward:

On-diagonal terms: 𝛿²f/𝛿xj² = (f(xj,0-2ε)-2f(xj,0-ε)+f(xj,0))/ε²

Off-diagonal terms: 𝛿²f/𝛿xj𝛿xk = (f(xj,0-ε,xk,0-ε)-f(xj,0-ε,xk,0)-f(xj,0,xk,0-ε)+f(xj,0,xk,0))/ε²

-

The University of Campinas, UNICAMP

- IA897 - Introdução à Otimização Matemática - Introduction to Optimization

- IA881 - Otimização Linear - Linear Programming

- IA543 - Otimização Não Linear - Nonlinear Optimization (Prof. Takaaki)

Copyright © 2016 - Gabriel Sabença Gusmão