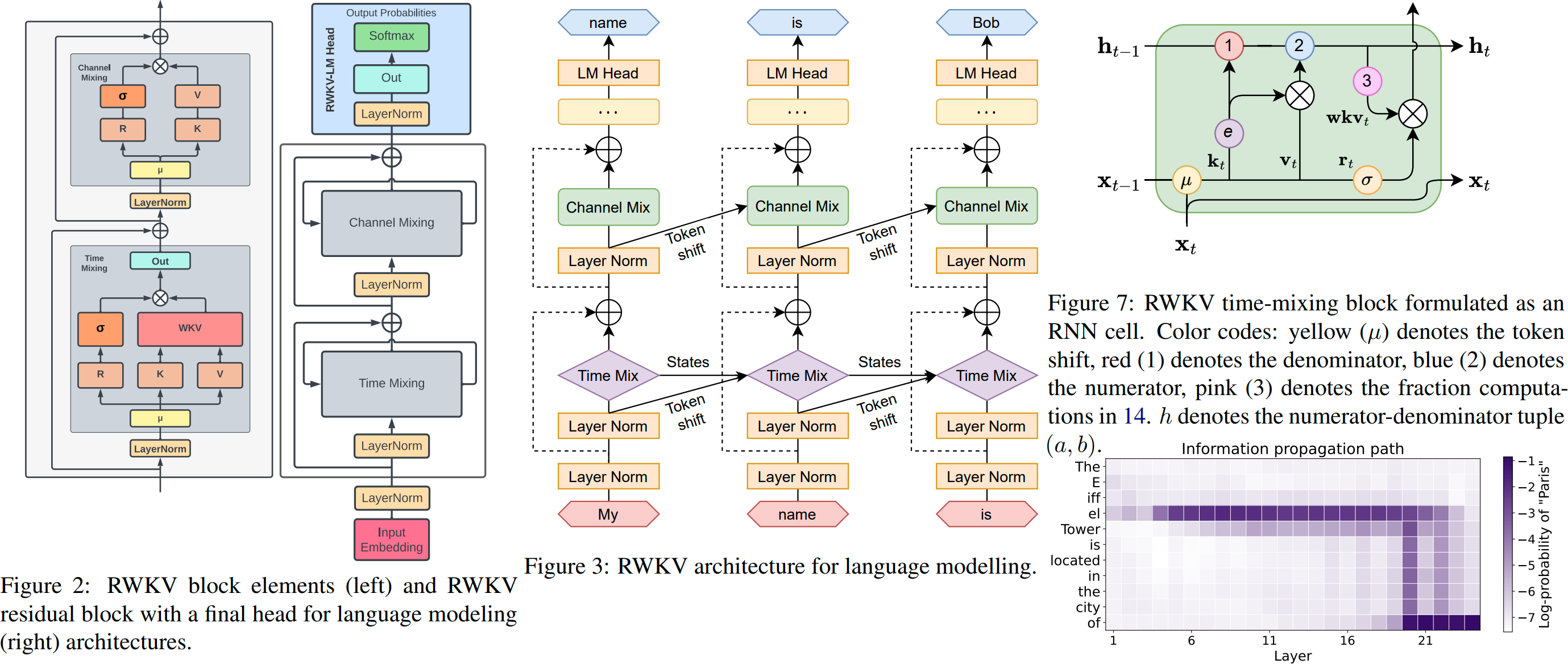

RWKV (pronounced as RwaKuv) is an RNN with GPT-level LLM performance, which can also be directly trained like a GPT transformer (parallelizable).

So it's combining the best of RNN and transformer - great performance, fast inference, fast training, saves VRAM, "infinite" ctxlen, and free sentence embedding. Moreover it's 100% attention-free.

Good

- Lower resource usage (VRAM, CPU, GPU, etc) when running and training.

- 10x to a 100x lower compute requirements compared to transformers with large context sizes.

- Scales to any context length linearly (transformers scales quadratically)

- Perform just as well, in terms of answer quality and capability

- RWKV models are generally better trained in other languages (e.g. Chinese, Japanese, etc), then most existing OSS models

Bad

- Is sensitive to prompt fomatting, you may need to change how you prompt the model

- Is weaker at task that require lookback, so reorder your prompt accordingly

- (e.g. Instead of saying "For the document above do X", which will require a lookback. Say "For the document below do X" instead)

- RWKV - The model architecture itself, code found at https://github.com/BlinkDL/RWKV-LM

- RWKV World - New base model that is being trained on a larger more diverse mix of dataset, which include samples from over a 100 languages. Partially instruction trained.

- Raven - Official finetuned version of the base model, with instruction training

- Base model / Pile Plus Model - RWKV Base model is currently trained on "The Pile" with additional mix of other datasets. This model is not instruction trained.

- For the majority of use cases, you should be using the pretrained, finetuned 7B world model

- On a case by case basis, you may find the older (smaller dataset), but larger raven model, to be bettter in certain specific benchmarks. When the 14B world model is ready, it is expected to replace the raven model in all use cases.

- If you want to finetune a model, for a very specific use case, without any existing instruction tuning, you may find the pile model more useful (rare, in most use cases its better to finetune the world or raven model)