-

Installing

kafkainUbuntu 18.04 x64

$ tar xvzf kafka_2.12-2.3.0.tgz

$ mv ~/Downloads/kafka_2.12-2.3.0 ~/Documents/_applications/- Add these settings to

.bashrcfor personal preference

export APPLICATIONS_HOME="${HOME}/Documents/_applications"

export KAFKA_HOME="${APPLICATIONS_HOME}/kafka_2.12-2.3.0"

export KAFKA_BIN="${KAFKA_HOME}/bin"

export KAFKA_CONFIG="${KAFKA_HOME}/config"

alias kafka-restart='sudo systemctl restart confluentKafka'

alias kafka-start='sudo systemctl start confluentKafka'

alias kafka-stop='sudo systemctl stop confluentKafka'

alias kafka-status='sudo systemctl status confluentKafka'

alias kafka-enable='sudo systemctl enable confluentKafka'

alias kafka-disable='sudo systemctl disable confluentKafka'- Configure to start

kafkaautomatically with the server

$ sudo cp kafka.service /etc/systemd/system

$ sudo cp zookeepr.service /etc/systemd/system- Update the

systemdservice after copyingkafka/zookeeperservices

$ sudo systemctl daemon-reload- Enable to auto-start

kafkaservice

$ kafka-enable- Start/Re-Start

kafkaservice

$ kafka-start

$ kafka-restart- Check

kafkaversion (ORsudo systemctl status kafka)

$ kafka-status- Setting up Kafka management for kafka cluster

- kafka-manager-docker

- How to install Kafka Manager for Managing kafka cluster

- CMAK

http:https://localhost:9990, instead of default port 9000

$ $HOME/jdk14.sh

$ $HOME/Documents/_applications/cmak-3.0.0.5/bin/cmak -Dhttp.port=9990 -Dconfig.file=$HOME/Documents/_applications/cmak-3.0.0.5/conf/application.conf -Dcmak.zkhosts="localhost:2181"- change the following in conf/application.conf

kafka-manager.zkhosts="localhost:2181"

basicAuthentication.username="admin"

basicAuthentication.password="password"- Adding new cluster in Kafka manager

:2181,:2181,:2181

- Trifecta CLI tool that enables users to quickly and easily inspect, publish and verify messages in Kafka, Storm and Zookeeper

- Trifecta

http:https://localhost:9980, instead of default port 8888

$ . "$HOME/jdk8.sh"

$ java -jar "$HOME/Documents/_applications/trifecta-bundle-0.18.13.bin.jar" --http-start -Dtrifecta.web.port=9980 $ docker run -d --rm -p 9000:9000 \

-e KAFKA_BROKERCONNECT=localhost:9092 \

-e JVM_OPTS="-Xms32M -Xmx64M" \

-e SERVER_SERVLET_CONTEXTPATH="/" \

obsidiandynamics/kafdrop:latest- fast-data-dev

- Docker Hub - This includes

zookeeperandkafka - LensesIO Kafka Development Environment

http:https://localhost:3030 - OR Run individual docker images (see next sections)

$ docker pull landoop/fast-data-dev

$ docker run --rm --net=host lensesio/fast-data-dev- schema-registry-ui

- Docker Hub

- Running the docker image with overridden port 8710 e.g. LensesIO schemaRegistryUI in

http:https://localhost:8710 - Resolving lensesIO

schema-registry-uicommon issues

$ docker pull landoop/schema-registry-ui alias confluentZookeeperStart='cd ${CONFLUENT_HOME}; bin/zookeeper-server-start etc/kafka/zookeeper.properties'

alias confluentKafkaStart='cd ${CONFLUENT_HOME}; bin/kafka-server-start etc/kafka/server.properties'

alias confluentSchemaStart='cd ${CONFLUENT_HOME}; bin/schema-registry-start etc/schema-registry/schema-registry.properties'

alias dockerLensesIOSchemaUI='docker run --rm -it -p 127.0.0.1:8710:8000 -e "SCHEMAREGISTRY_URL=http:https://localhost:8081" landoop/schema-registry-ui' $ confluentZookeeperStart

$ confluentKafkaStart

$ confluentSchemaStart

$ dockerLensesIOSchemaUI- kafka-topics-ui

- Docker Hub

- Running the docker image with overridden port 8720 e.g. LensesIO kafkaTopicsUI in

http:https://localhost:8720 - Resolving lensesIO

kafka-topics-uicommon issues

$ docker pull landoop/kafka-topics-ui alias confluentZookeeperStart='cd ${CONFLUENT_HOME}; bin/zookeeper-server-start etc/kafka/zookeeper.properties'

alias confluentKafkaStart='cd ${CONFLUENT_HOME}; bin/kafka-server-start etc/kafka/server.properties'

alias confluentRestStart='cd ${CONFLUENT_HOME}; bin/kafka-rest-start etc/kafka-rest/kafka-rest.properties'

alias dockerLensesIOTopicsUI='docker run --rm -it -p 127.0.0.1:8720:8000 -e "KAFKA_REST_PROXY_URL=http:https://localhost:8082" -e "PROXY=false" landoop/kafka-topics-ui' $ confluentZookeeperStart

$ confluentKafkaStart

$ confluentRestStart

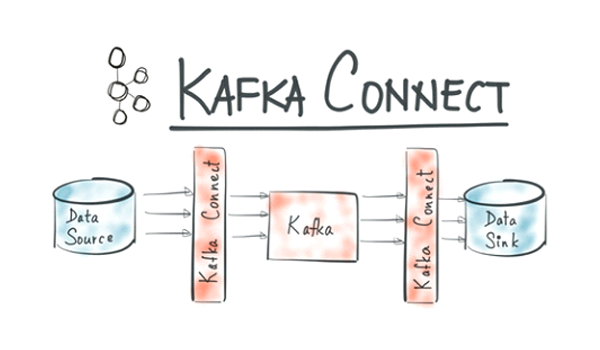

$ dockerLensesIOTopicsUI- kafka-connect-ui

- Docker Hub

- Running the docker image with overridden port 8730 e.g. LensesIO kafkaConnectUI in

http:https://localhost:8730 - Resolving lensesIO

kafka-connect-uicommon issues

$ docker pull landoop/kafka-connect-ui alias confluentKafkaStart='cd ${CONFLUENT_HOME}; bin/kafka-server-start etc/kafka/server.properties'

alias confluentZookeeperStart='cd ${CONFLUENT_HOME}; bin/zookeeper-server-start etc/kafka/zookeeper.properties'

alias confluentConnectStart='cd ${CONFLUENT_HOME}; bin/connect-distributed etc/kafka/connect-distributed.properties'

alias dockerLensesIOConnectUI='docker run --rm -it -p 127.0.0.1:8730:8000 -e "CONNECT_URL=http:https://localhost:8083" -e "PROXY=false" landoop/kafka-connect-ui' $ confluentZookeeperStart

$ confluentKafkaStart

$ confluentConnectStart

$ dockerLensesIOConnectUI# create topics

$ kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 3 --topic sample-input-topic

$ kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 3 --topic sample-output-topic

# check/describe/delete topics

$ kafka-topics.sh --list --zookeeper localhost:2181

$ kafka-topics.sh --list --zookeeper localhost:2181 --topic sample-input-topic

$ kafka-topics.sh --describe --zookeeper localhost:2181 --topic sample-input-topic

$ kafka-topics.sh --delete --zookeeper localhost:2181 --topic sample-input-topic

# start kafka producer to manually enter some data

$ kafka-console-producer.sh --broker-list localhost:9092 --topic sample-input-topic

# enter

> kafka hello world

> kafka sample data processing

> kafka the quick brown fox jumps over the lazy dog

# exit

# start kafka producer to pipe in some file

$ kafka-console-producer.sh --broker-list localhost:9092 --topic sample-input-topic < sampleTextFile.txt

# start kafka consumer

$ kafka-console-consumer.sh --topic sample-input-topic --bootstrap-server localhost:9092 --from-beginning

$ kafka-console-consumer.sh -bootstrap-server localhost:9092 \

--topic sample-input-topic \

--from-beginning \

--formatter kafka.tools.DefaultMessageFormatter \

--property print.key=true \

--property print.value=true \

--property key.deserializer=org.apache.kafka.common.serialization.StringDeserializer \

--property value.deserializer=org.apache.kafka.common.serialization.IntegerDeserializer- Download

- Documentation

- Configuration Reference

- Quick Start for Apache Kafka using Confluent Platform (Local)

- CLI Command Reference

- Confluent

localcommands for single node instance locally - Confluent

http:https://localhost:9021Control Center

-

RabbitMQ

(Commercial License) -

Confluent Platform Logs are in

/tmp/confluent.0YtCGnLS/- Control Center

http:https://localhost:9021 - LensesIO schemaRegistryUI

http:https://localhost:8710 - LensesIO kafkaTopicsUI

http:https://localhost:8720 - LensesIO kafkaConnectUI

http:https://localhost:8730

$ confluentControlCenterStart

$ dockerLensesIOSchemaUI

$ dockerLensesIOTopicsUI

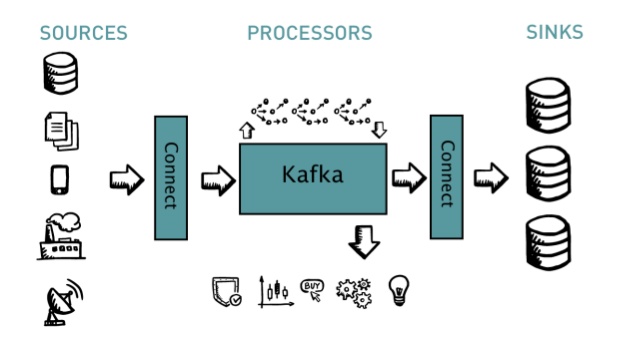

$ dockerLensesIOConnectUI- How to connect Kafka to MongoDB Source

- Topic name must be in the form

logicalName.databaseName.collectionName- Kafka Debezium Connector for MongoDB

- So in essence, create the topic name with

mongoConn.sampleGioDB.books- mongodb.name = mongoConn (see connect-mongodb-source.properties)

- db name is

sampleGioDB(in Mongo) - collection name is

books(in Mongo)

- MongoDB Source Connector in

http:https://localhost:8183/connectors- using

bin/connect-standalonescript with overridden configs

- using

$ kafka-topics --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic mongoConn.sampleGioDB.books

$ kafka-topics --list --zookeeper localhost:2181

$ cd $CONFLUENT_HOME

$ bin/connect-standalone etc/schema-registry/connect-avro-standalone-mongodb-source.properties etc/kafka/connect-mongodb-source.properties ### This worked so use this

$ bin/connect-distributed etc/schema-registry/connect-avro-distributed-mongodb-source.properties etc/kafka/connect-mongodb-source.properties ### Doesn't work. Still needs to investigate why???

$ kafka-console-consumer --bootstrap-server localhost:9092 --topic mongoConn.sampleGioDB.books --from-beginning- Confluent Supported Kafka Connectors

- Elasticsearch Service Sink Connector for Confluent Platform

- Kafka Connect and Elasticsearch

- Kafka Connect in Action: Elasticsearch

- Kafka Connect Elasticsearch Connector in Action

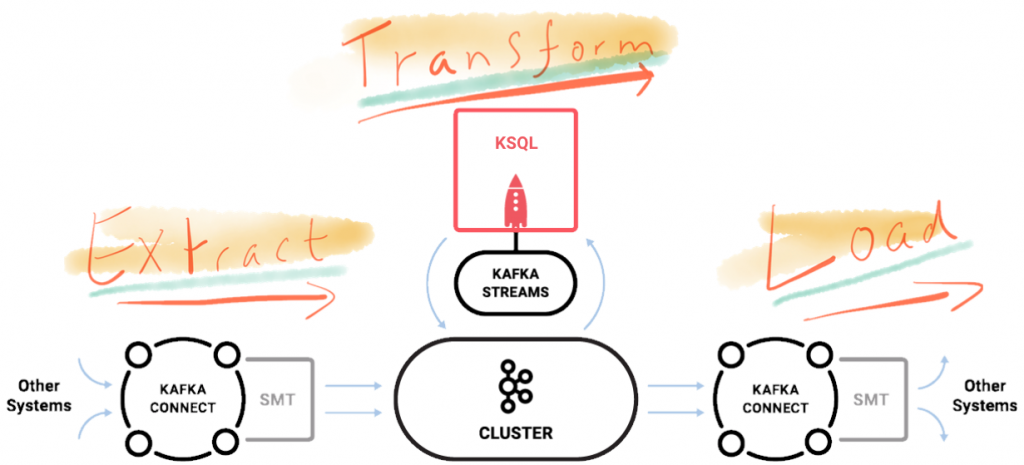

- Building Streaming Data Pipelines with Elasticsearch, Apache Kafka, and KSQL

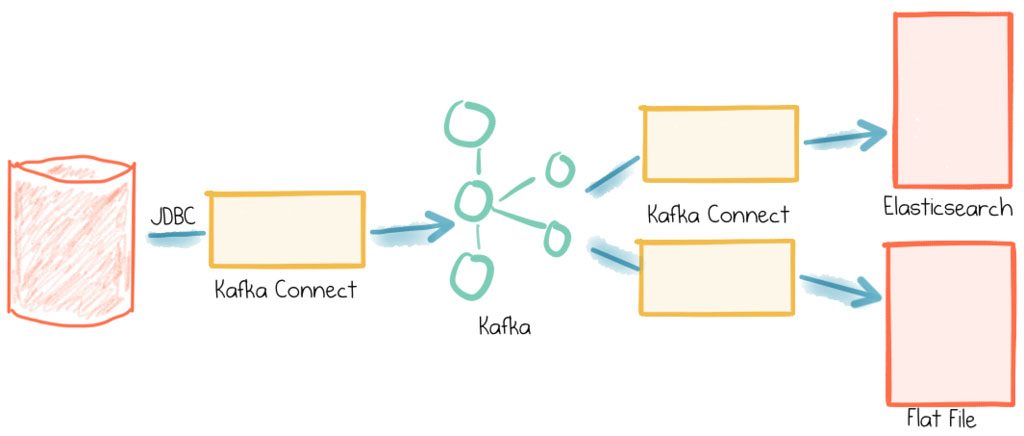

- Simplest Useful Kafka Connect Data Pipeline

- Elasticsearch Sink Connector in

http:https://localhost:8283/connectors- using

bin/connect-standalonescript with overridden configs

- using

- Elasticsearch results after running

connector standalone elasticsearch sink. Note,kafka topic=mongoConn.sampleGioDB.booksis converted to lowercase such aselasticsearch index=mongoconn.samplegiodb.books

$ kafka-topics --list --zookeeper localhost:2181

$ cd $CONFLUENT_HOME

$ bin/connect-standalone etc/schema-registry/connect-avro-standalone-elasticsearch-sink.properties etc/kafka-connect-elasticsearch/connect-elasticsearch-sink.properties ### This worked so use this

$ bin/connect-distributed etc/schema-registry/connect-avro-distributed-elasticsearch-sink.properties etc/kafka-connect-elasticsearch/connect-elasticsearch-sink.properties ### Doesn't work. Still needs to investigate why???

$ kafka-console-consumer --bootstrap-server localhost:9092 --topic mongoConn.sampleGioDB.books --from-beginning- Kafka connect plugin install

- How to install connector plugins in Kafka Connect

- Install the needed Kafka Connectors from Confluent Kafka Connectors Hub. e.g.

$ confluent-hub install confluentinc/kafka-connect-elasticsearch:5.5.1

$ confluent-hub install debezium/debezium-connector-mongodb:1.2.1

$ confluent-hub install debezium/debezium-connector-mysql:1.2.1

$ confluent-hub install mongodb/kafka-connect-mongodb:1.2.0

$ confluent-hub install jcustenborder/kafka-connect-redis:0.0.2.11

$ confluent-hub install hpgrahsl/kafka-connect-mongodb:1.4.0- Create

pluginsdirectory under$CONFLUENT_HOME, then createsymlinkfromlibdirectory where the.jarfiles are

$ cd $CONFLUENT_HOME

$ mkdir plugins

$ cd plugins

$ ln -s ../share/confluent-hub-components/confluentinc-kafka-connect-elasticsearch/lib elasticsearch

$ ln -s ../share/confluent-hub-components/debezium-debezium-connector-mongodb/lib debezium-mongodb

$ ln -s ../share/confluent-hub-components/debezium-debezium-connector-mysql/lib debezium-mysql

$ ln -s ../share/confluent-hub-components/mongodb-kafka-connect-mongodb/lib mongodb

$ ln -s ../share/confluent-hub-components/jcustenborder-kafka-connect-redis/lib redis- Add the

pluginspath in the following files- $CONFLUENT_HOME/etc/kafka/connect-distributed.properties

- $CONFLUENT_HOME/etc/kafka/connect-standalone.properties

- $CONFLUENT_HOME/etc/schema-registry/connect-avro-distributed.properties

- $CONFLUENT_HOME/etc/schema-registry/connect-avro-standalone.properties

plugin.path=$HOME/Documents/_applications/confluent-5.5.1/share/java,$HOME/Documents/_applications/confluent-5.5.1/share/confluent-hub-components,$HOME/Documents/_applications/confluent-5.5.1/plugins- The above

plugins.jar files should work but in case it didn't get added to classpath then manually add the$CLASSPATHto.bashrce.g.

export CLASSPATH="$HOME/Documents/_applications/confluent-5.5.1/share/confluent-hub-components/debezium-debezium-connector-mongodb/*"- To verify that Mongo is connected to Kafka flowing event stream

- use

Trifecta UI localhost - OR use Confluent

KSql- KSql Quick Reference

- KSql Create Stream

- KSql Print Kafka Topic's Content

- alias confluentKSqlStart='cd ${CONFLUENT_HOME}; bin/ksql-server-start etc/ksqldb/ksql-server.properties'

- use

$ trifectaStart

$ confluentKSqlStart

$ ksql ksql> CREATE STREAM sampleGioBooks (id VARCHAR) WITH (kafka_topic='mongoConn.sampleGioDB.books', value_format='JSON');

ksql> describe extended sampleGioBooks

ksql> print 'mongoConn.sampleGioDB.books' from beginning;- Confluent Distributed Mode

- Confluent Distributed Configuration Properties

- Confluent Worker Configuration Properties

- Running Kafka Connect

- Running Kafka Connect – Standalone vs Distributed Mode Examples

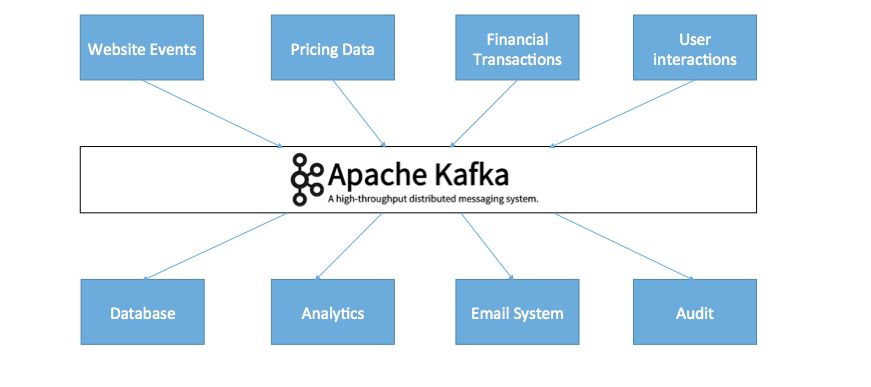

- Kafka Architecture

- 4 Key Benefits of Apache Kafka for Real-Time Data

- Kafka Connectors Without Kafka

- Kafka Streams

- Kafka Technical Overview

- Kafka Detailed Design and Ecosystem

- Kafka Tutorials

- Kafka Producer

- Kafka Consumer

- Kafka Consumer Groups

$ confluent local list

$ confluent local start # to start `all` services

$ confluent local start ksql-server # to start `ksql-server` service onlyOR

alias confluentZookeeperStart='cd ${CONFLUENT_HOME}; bin/zookeeper-server-start etc/kafka/zookeeper.properties'

alias confluentKafkaStart='cd ${CONFLUENT_HOME}; bin/kafka-server-start etc/kafka/server.properties'

alias confluentKSqlStart='cd ${CONFLUENT_HOME}; bin/ksql-server-start etc/ksqldb/ksql-server.properties' $ confluentKSqlStart

$ ksql $ confluent local list

$ confluent local start # to start `all` services

$ confluent local start connect # to start `connect` service only