This is a simplified documentation of Revisiting Long-term Time Series Forecasting: An Investigation on Linear Mapping.

Problem Definition. Given a historical time series observation

where

Theorem 1. Given a seasonal time series satisfying

Corollary 1.1. When the given time series satisfies

Theorem 2. Let

Theorem 3. Let

Consider a sine wave

Let the length of input historical observation and prediction horizon be 90. According to Theorem 1, we can obtain the solution to

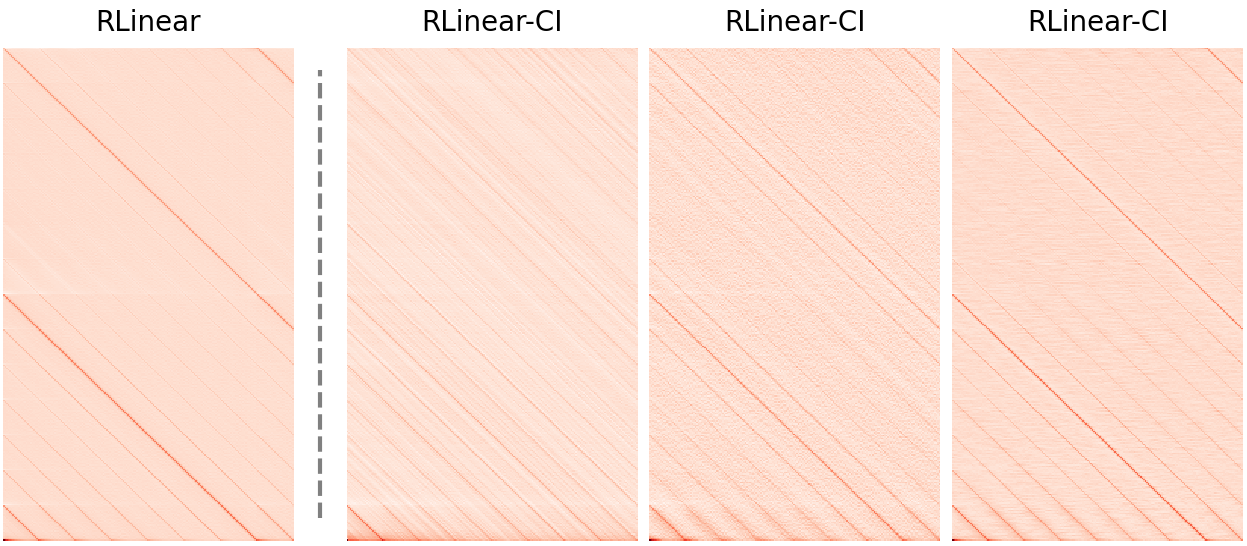

Although Theorem 3 provides a solution for predicting multivariate time series with different periodic channels, an excessively long input horizon may make the optimization of

If you find this repo useful, please cite our paper.

@article{Li2023RevisitingLT,

title={Revisiting Long-term Time Series Forecasting: An Investigation on Linear Mapping},

author={Zhe Li and Shiyi Qi and Yiduo Li and Zenglin Xu},

journal={ArXiv},

year={2023},

volume={abs/2305.10721}

}

If you have any questions or want to discuss some details, please contact [email protected].

We appreciate the following github repos a lot for their valuable code base or datasets:

https://github.com/zhouhaoyi/Informer2020