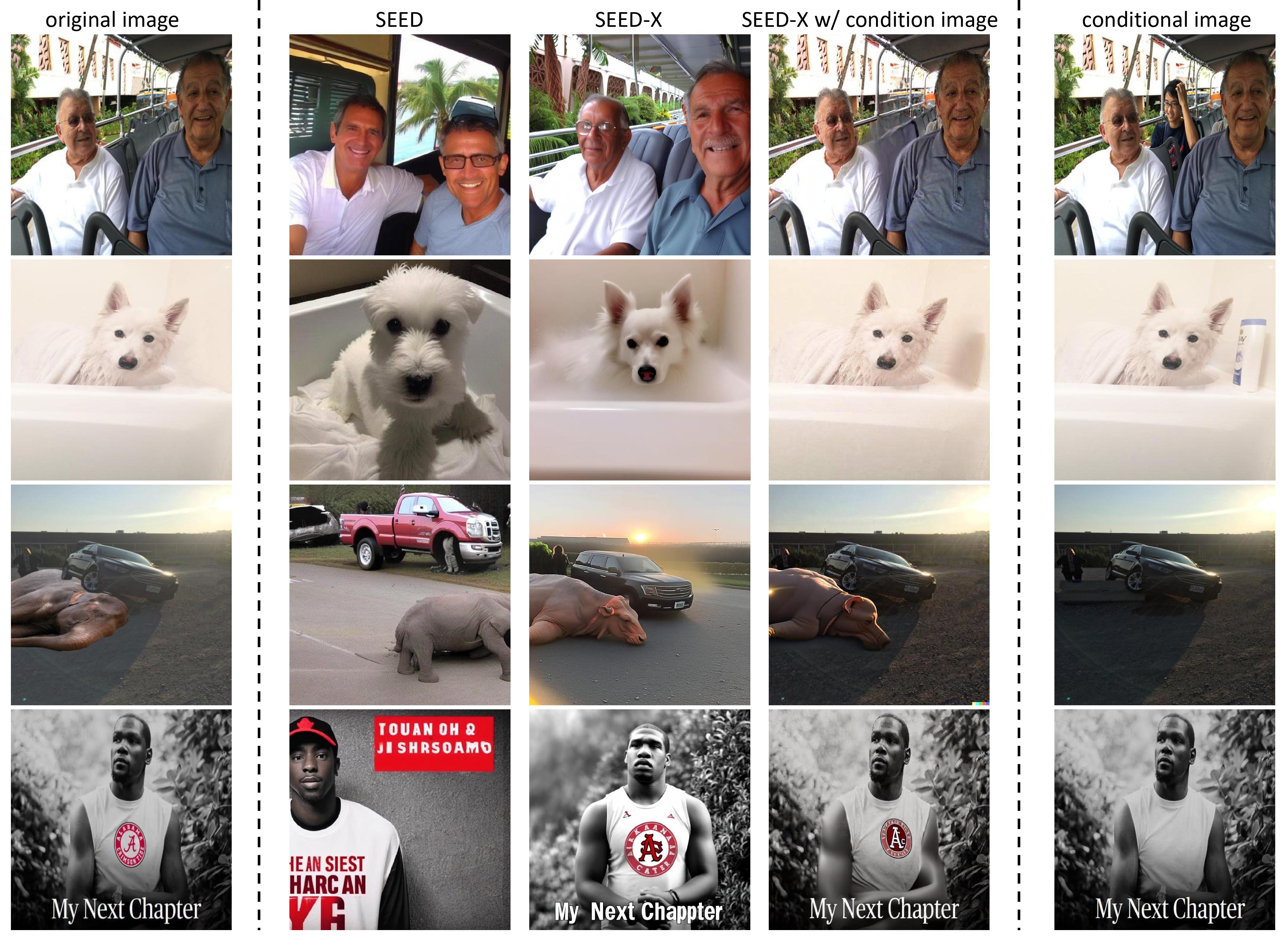

The reconstruction results of our visual de-tokenizer. It can decode realistic images that are semantically aligned with the original images by taking the ViT features as inputs, and further recover fine-grained details by incorporating the conditional images as inputs.

The reconstruction results of our visual de-tokenizer. It can decode realistic images that are semantically aligned with the original images by taking the ViT features as inputs, and further recover fine-grained details by incorporating the conditional images as inputs.

We utilize a pre-trained ViT as the visual tokenizer and pre-train a visual de-tokenizer to decode realistic images by taking the features of the ViT as inputs. Specifically, N visual embeddings (after average pooling) from the ViT tokenizer are fed into a learnable module as the inputs of the U-Net of the pre-trained SD-XL. We perform an ablation study on the number of viual embeddings and the learnable parameters of the SD-XL U-Net, where keys and values within the U-Net are optimized if not specified with "fully fine-tunue". The input images and the reconstructed images from the visual de-tokenizer are shown in the figure below. We can observe that more visual tokens can result in better reconstruction of the original images. For example, the decoded images from 256 visual embeddings can recover the characters' postures of the original images, while decoded images from 32 visual embeddings have already lost the original structure of the scene. We further observe that fully fine-tuning the parameters of the SD-XL U-Net can lead to distortions in image details, such as the woman's feet, compared to only training the keys and values within the U-Net. In SEED-X, we use N = 64 visual embeddings to train the visual de-tokenizer and only optimize the keys and values within the U-Net (See the ablation study below for an explanation of why we do not choose N = 256).

We utilize a pre-trained ViT as the visual tokenizer and pre-train a visual de-tokenizer to decode realistic images by taking the features of the ViT as inputs. Specifically, N visual embeddings (after average pooling) from the ViT tokenizer are fed into a learnable module as the inputs of the U-Net of the pre-trained SD-XL. We perform an ablation study on the number of viual embeddings and the learnable parameters of the SD-XL U-Net, where keys and values within the U-Net are optimized if not specified with "fully fine-tunue". The input images and the reconstructed images from the visual de-tokenizer are shown in the figure below. We can observe that more visual tokens can result in better reconstruction of the original images. For example, the decoded images from 256 visual embeddings can recover the characters' postures of the original images, while decoded images from 32 visual embeddings have already lost the original structure of the scene. We further observe that fully fine-tuning the parameters of the SD-XL U-Net can lead to distortions in image details, such as the woman's feet, compared to only training the keys and values within the U-Net. In SEED-X, we use N = 64 visual embeddings to train the visual de-tokenizer and only optimize the keys and values within the U-Net (See the ablation study below for an explanation of why we do not choose N = 256).

To enable MLLM for image generation, we employ N learnable queries to obtain the output visual representations from the LLM, which are trained to reconstruct N visual embeddings from the ViT tokenizer with a learnable module. We first perform an abation study on the number of learnable queries. The images generated by the MLLM based on the input caption are shown in the figure below. We can observe that using 256 learnable queries to reconstruct 256 visual embeddings can lead to distortion in the generated images compared with N = 64. This occurs because regressing more visual features is more challenging for the model, even though 256 visual embeddings from the de-tokenizer can better reconstruct images, as demonstrated in the previous ablation study. We also observe that, compared to learning a one-layer cross-attention for reconstructing image features, a multi-layer resampler (multi-layer cross-attention) yields less satisfactory performance, which can happen due to the lack of more direct regularizations on the hidden states of the LLM. We further optimize the visual de-tokenizer by using the reconstructed visual embeddings from the MLLM as input instead of ViT features, but the generated images exhibit a more monotonous appearance. It demonstrates the effectiveness of utilizing the ViT Tokenizer as the bridge to decouple the training of visual de-tokenizer and the MLLM for image generation.

To enable MLLM for image generation, we employ N learnable queries to obtain the output visual representations from the LLM, which are trained to reconstruct N visual embeddings from the ViT tokenizer with a learnable module. We first perform an abation study on the number of learnable queries. The images generated by the MLLM based on the input caption are shown in the figure below. We can observe that using 256 learnable queries to reconstruct 256 visual embeddings can lead to distortion in the generated images compared with N = 64. This occurs because regressing more visual features is more challenging for the model, even though 256 visual embeddings from the de-tokenizer can better reconstruct images, as demonstrated in the previous ablation study. We also observe that, compared to learning a one-layer cross-attention for reconstructing image features, a multi-layer resampler (multi-layer cross-attention) yields less satisfactory performance, which can happen due to the lack of more direct regularizations on the hidden states of the LLM. We further optimize the visual de-tokenizer by using the reconstructed visual embeddings from the MLLM as input instead of ViT features, but the generated images exhibit a more monotonous appearance. It demonstrates the effectiveness of utilizing the ViT Tokenizer as the bridge to decouple the training of visual de-tokenizer and the MLLM for image generation.

| MMB | SEED-Bench-2 | MME | |||||

|---|---|---|---|---|---|---|---|

| Single | Single | Multi | Inter- leaved |

Gen | Single | Single | |

| SEED-X | 65.8 | 48.2 | 53.8 | 24.3 | 57.8 | 1250 | 236 |

| SEED-X-I | 77.8 | 66.8 | 57.1 | 40.5 | 61.6 | 1520 | 338 |

| Dataset | Number | Description |

|---|---|---|

| LAION-COCO | 600M | Web-images with synthetic captions by BLIP L/14. 30M images are re-captioned by a MLLM. |

| SAM | 11M | Diverse and high-resolution images, with captions generated by a MLLM. |

| LAION-Aesthetics | 3M | Image-text pairs with predicted aesthetics scores of 6.25 or higher. |

| Unsplash | 2M | Images from contributing global photographers, with captions generated by a MLLM. |

| JourneyDB | 4M | High-resolution Midjourney images, annotated with corresponding text prompt, image caption. |

| CapFusion | 120M | Images from LAION-COCO, with captions integrated from both the web and synthetic captions. |

| Dataset | Number | Description |

|---|---|---|

| GRIT | 191M | Image-text pairs with noun phrases in the caption annotated with bounding boxes. |

| Dataset | Number | Description |

|---|---|---|

| MMC4 | 7M | A augmentation of the text-only c4 corpus with images interleaved. |

| OBELICS | 141M | An web-scale filtered dataset of interleaved image-text documents comprising web pages extracted from Common Crawl. |

| OpenFlamingo | 400K | A sequence of interleaved text and image alt-texts generated by ChatGPT, with images retrieved from LAION-5B. |

| Dataset | Number | Description |

|---|---|---|

| LLaVAR-Pretrain | 400K | Text-rich images from LAION, with OCR results. |

| Slides | 1M | Images from slides, with OCR results. |

| Dataset | Number | Description |

|---|---|---|

| Wikipedi | 66M | Cleaned articles of all languages from the Wikipedia dump. |

| Dataset | Number | Description |

|---|---|---|

| LLaVAR-sft | 16K | High-quality instruction-following data by interacting with GPT-4 based on OCR results of text-rich images. |

| Text-rich QA | 900K | Instruction-following data generated by GPT-4V based on text-rich images. |

| MIMIC-IT | 150K | Difference spotting data with general scene difference and subtle difference. |

| MathQA | 37K | Math word problems that are densely annotated with operation programs. |

| ChartQA | 33K | Human-written questions focusing on visual and logical reasoning about charts. |

| AI2D | 5K | Illustrative diagrams for diagram understanding and associated question answering. |

| ScienceQA | 21K | Multiple-choice science questions collected from elementary and high school science curricula. |

| KVQA | 183K | Questions that require multi-entity, multi-relation, and multi-hop reasoning over large Knowledge Graphs. |

| DVQA | 3M | A synthetic question-answering dataset on images of bar-charts. |

| Grounded QA | 680K | Questions constructed from region captions with bounding boxes. |

| Referencing QA | 630K | Questions constructed from images with regions marked. |

| Dataset | Number | Description |

|---|---|---|

| LLaVA-150k | 150K | A set of GPT-generated multimodal instruction-following data. |

| ShareGPT | 1.2M | 100K high-quality captions collected from GPT4-V and 1.2 million data captioned by a superb caption model. |

| LVIS-Instruct4V | 220K | A fine-grained visual instruction dataset produced by GPT-4V with images from LVIS. |

| VLIT | 770K | A multi-round question answering dataset about a given image from COCO. |

| Vision-Flan | 190K | A visual instruction tuning dataset that consists of 200+ diverse vision-language tasks derived from 101 open-source computer vision datasets. |

| ALLaVA-4V | 1.4M | Images with fine-grained captions, complex instructions and detailed answers generated by GPT-4V. |

| LAION-COCO | 600M | Web-images with synthetic captions by BLIP L/14. 30M images are re-captioned by a MLLM. |

| SAM | 11M | Diverse and high-resolution images, with captions generated by a MLLM. |

| LAION-Aesthetics | 3M | Image-text pairs with predicted aesthetics scores of 6.25 or higher. |

| Unsplash | 2M | Images from contributing global photographers, with captions generated by a MLLM. |

| JourneyDB | 4M | High-resolution Midjourney images, annotated with corresponding text prompt, image caption. |

| Dataset | Number | Description |

|---|---|---|

| Instructpix2pix | 313K | Image editing examples with language instructions generated by GPT-3 and Stable Diffusion. |

| MagicBrush | 10K | Manually annotated triplets (source image, instruction, target image) with multi rounds. |

| Openimages-editing | 1.4M | Image editing examples with language instructions constructed by an automatic pipeline, with images from Openimages. |

| Unsplash-editing | 1.3M | Image editing examples with language instructions constructed by an automatic pipeline, with images from Unsplash. |

| Dataset | Number | Description |

|---|---|---|

| SlidesGen | 10K | Slides with layout descriptions and captions generated by a slide2json tool and a MLLM. |

| Dataset | Number | Description |

|---|---|---|

| VIST | 20K | Inique photos in sequences, aligned to both descriptive (caption) and story language. |

| Dataset | Number | Description |

|---|---|---|

| VITON-HD | 13K | A dataset for high-resolution virtual try-on, with frontal-view woman and top clothing image pairs. |

| Benchmark | Number | Description |

|---|---|---|

| MMBench | 3K | Multiple-choice questions for evaluating both perception and reasoning covering 20 fine-grained ability dimensions. |

| SEED-Bench-2 | 24K | Multiple-choice questions with accurate human annotations, which spans 27 dimensions, including the evaluation of both text and image generation. |

| MME | 2K | True/False questions for evaluating both perception and cognition, including a total of 14 subtasks. |

- Python >= 3.8 (Recommend to use Anaconda)

- PyTorch >=2.0.1

- NVIDIA GPU + CUDA

Clone the repo and install dependent packages

git clone this_project

cd SEED-X

pip install -r requirements.txtWe release the pretrained De-Tokenizer, the pre-trained foundation model SEED-X, the general instruction-tuned model SEED-X-I, the editing model SEED-X-Edit in Google Drive

Please download the checkpoints and save them under the folder ./pretrained. For example, ./pretrained/seed_x.

You also need to download stable-diffusion-xl-base-1.0 and Qwen-VL-Chat, and save them under the folder ./pretrained. Please use the following script to extract the weights of visual encoder in Qwen-VL-Chat.

python3 src/tools/reload_qwen_vit.py# For image reconstruction with ViT image features

python3 src/inference/eval_seed_x_detokenizer.py

# For image reconstruction with ViT image features and conditional image

python3 src/inference/eval_seed_x_detokenizer_with_condition.py# For image comprehension and detection

python3 src/inference/eval_img2text_seed_x.py

# For image generation

python3 src/inference/eval_text2img_seed_x.py# For image comprehension and detection

python3 src/inference/eval_img2text_seed_x_i.py

# For image generation

python3 src/inference/eval_text2img_seed_x_i.py# For image editing

python3 src/inference/eval_img2edit_seed_x_edit.py- Prepare the pretrained models including the pre-trained foundation model SEED-X and the visual encoder of Qwen-VL-Chat (See Model Weights).

- Prepare the instruction tuning data. For example, for "build_llava_jsonl_datapipes" dataloader, each folder stores a number of jsonl files, each jsonl file contains 10K pieces of content, with an example of the content as follows:

{"image": "coco/train2017/000000033471.jpg", "data": ["What are the colors of the bus in the image?", "The bus in the image is white and red.", "What feature can be seen on the back of the bus?", "The back of the bus features an advertisement.", "Is the bus driving down the street or pulled off to the side?", "The bus is driving down the street, which is crowded with people and other vehicles."]}For "build_caption_datapipes_with_pixels" dataloder, each folder stores a number of .tar files and reads image-text pairs in the form of webdataset.

For "build_single_turn_edit_datapipes" dataloder, each folder stores a number of jsonl files, each jsonl file contains 10K pieces of content, with an example of the content as follows:

{"source_image": "source_images/f6f4d0669694df5b.jpg", "target_image": "target_images/f6f4d0669694df5b.jpg", "instruction": "Erase the car that is parked in front of the Roebuck building."}- Run the following script.

# For general instruction tuning for multimodal comprehension and generation

sh scripts/train_seed_x_sft_comp_gen.sh# For training language-guided image editing

sh scripts/train_seed_x_sft_edit.sh- Obtain "pytorch_model.bin" with the following script.

cd train_output/seed_x_sft_comp_gen/checkpoint-xxxx

python3 zero_to_fp32.py . pytorch_model.bin- Change "pretrained_model_path" in "configs/clm_models/agent_seed_x.yaml" with the new checkpoint. For example,

pretrained_model_path: train_output/seed_x_sft_comp_gen/checkpoint-4000/pytorch_model.bin- Change the "llm_cfg_path" and "agent_cfg_path" in the inference script (See below), which will automatically load the trained LoRA weights onto the pretrained model SEED-X.

llm_cfg_path = 'configs/clm_models/llm_seed_x_lora.yaml'

agent_cfg_path = 'configs/clm_models/agent_seed_x.yaml'- Run the inference script,

# For image comprehension

python3 src/inference/eval_img2text_seed_x_i.py

# For image generation

python3 src/inference/eval_text2img_seed_x_i.py

# For image editing

python3 src/inference/eval_img2edit_seed_x_edit.py