Demo samples of our paper "Audio-Visual Speech Separation with Visual Features Enhanced by Adversarial Training". If you have questions, feel free to ask me ([email protected]).

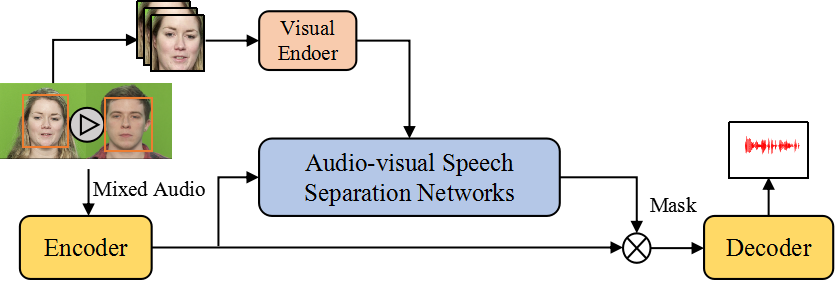

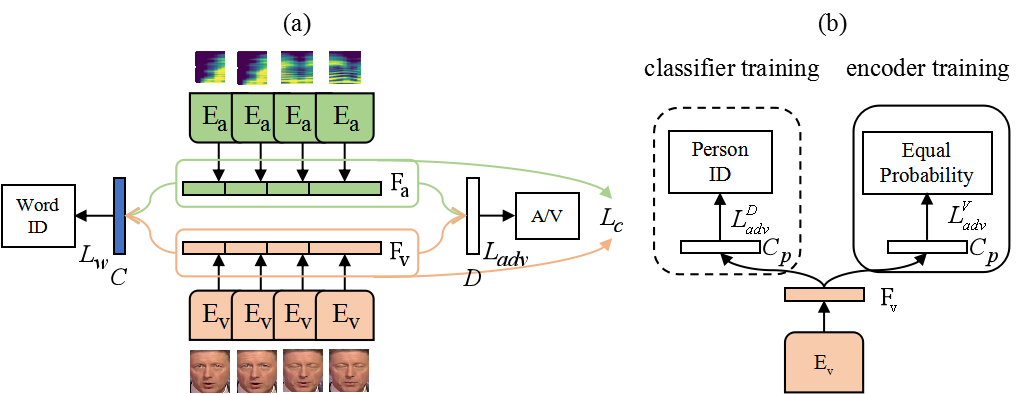

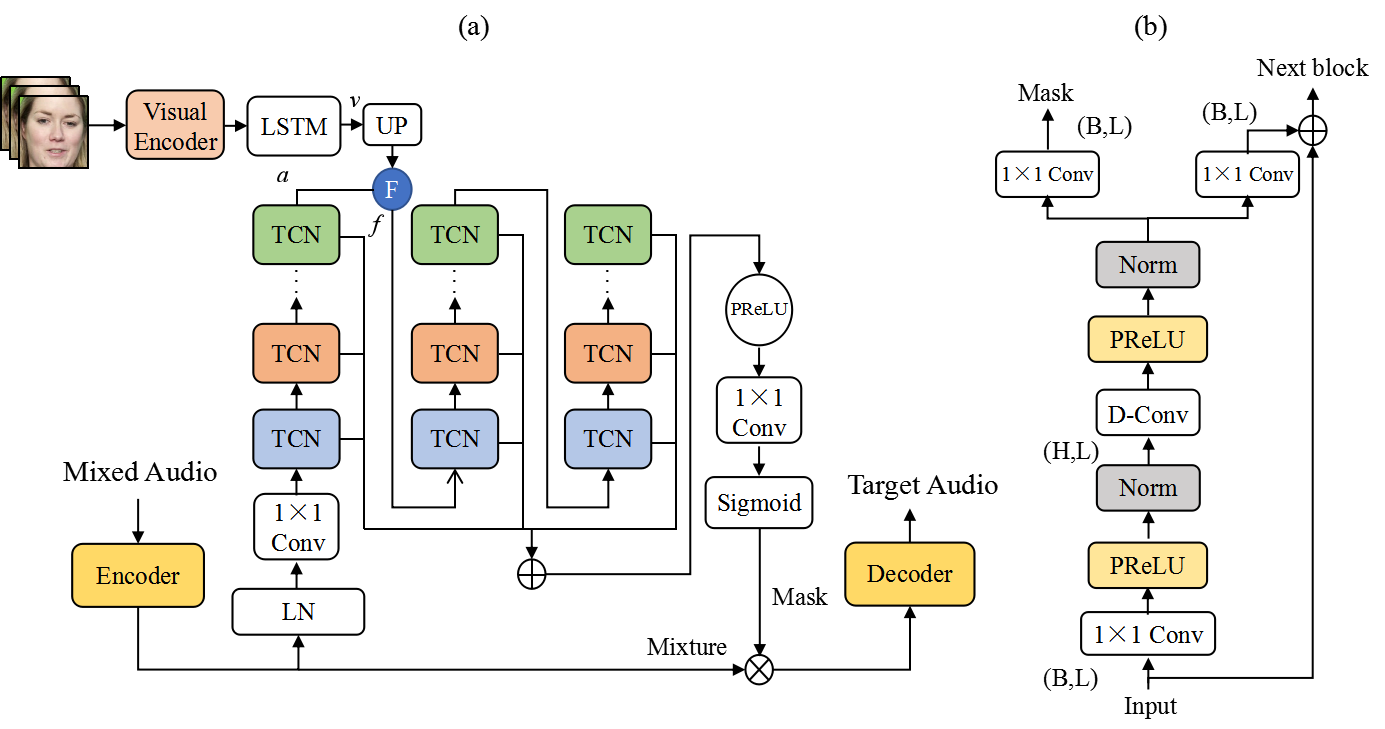

Audio-visual speech separation (AVSS) refers to separating individual voice from an audio mixture of multiple simultaneous talkers by conditioning on visual features. For the AVSS task, visual features play an important role, based on which we manage to extract more effective visual features to improve the performance**. In this paper, we propose a novel AVSS model that uses speech-related visual features for isolating the target speaker. Specifically, the method of extracting speech-related visual features has two steps. Firstly, we extract the visual features that contain speech-related information by learning joint audio-visual representation. Secondly, we use the adversarial training method to enhance speech-related information in visual features further. We adopt the time-domain approach and build audio-visual speech separation networks with temporal convolutional neural network block. Experiments on audio-visual datasets, including GRID, TCD-TIMIT, AVSpeech, and LRS2, show that our model significantly outperforms previous state-of-the-art AVSS models. We also demonstrate that our model can achieve excellent speech separation performance in noisy real-world scenarios. Moreover, in order to alleviate the performance degradation of AVSS models caused by the missing of some video frames, we propose a training strategy, which makes our model robust when video frames are partially missing.

The structure of the visual front model.

The networks can be easily built based on our paper.

- GRID [paper] [dataset page]

- TCD-TIMIT [paper] [dataset page]

- AVSpeech [paper] [dataset page]

- LRS2 [paper] [dataset page]

The method of generating training, validation, and test samples is detailed in our paper.

We provide many samples from standard datasets and recorded in a real world environment.

- Listen and watch the samples that recorded in real world environment at ./samples/samples of real-world environment.

- Listen the samples from standard datasets at ./samples/sample of standard dataset

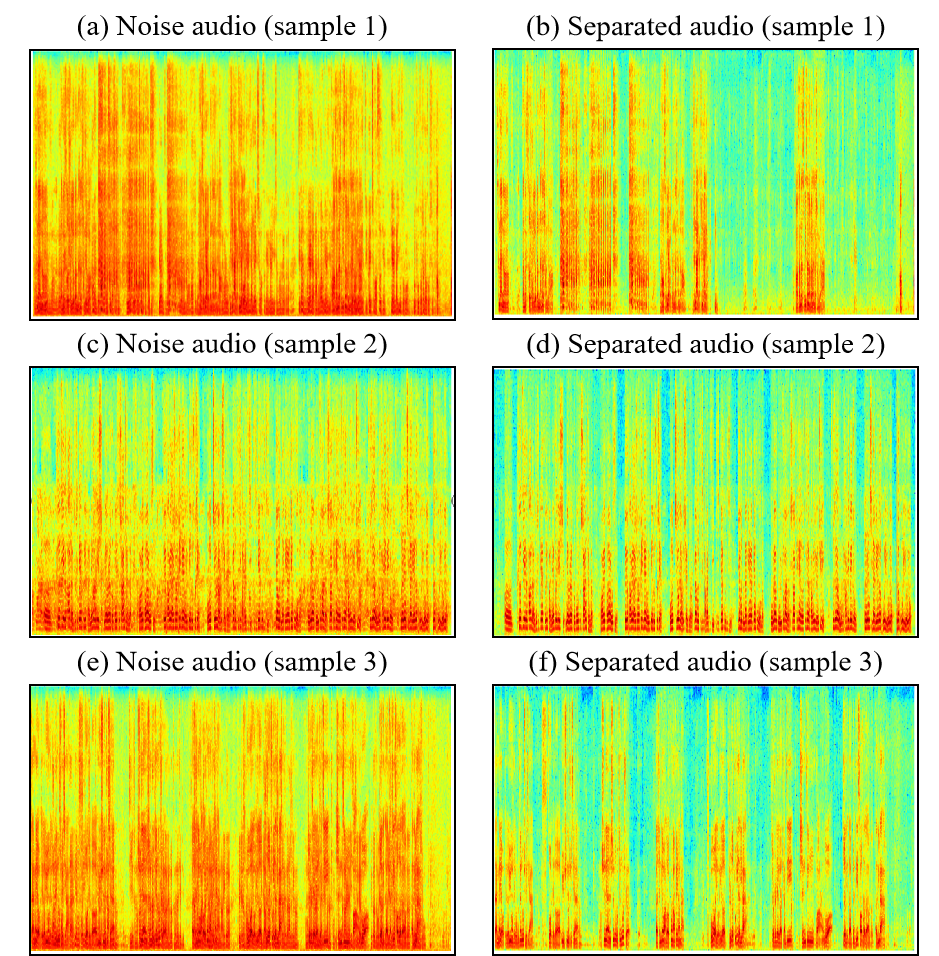

- Spectrogram samples that recorded in real world environment.

If you find this repo helpful, please consider citing:

@inproceedings{zhang2021avss,

title={Audio-Visual Speech Separation with Visual Features Enhanced by Adversarial Training},

author={Zhang, Peng and Xu, Jiaming and Shi, Jing and Hao, Yunzhe and Qin, Lei and Xu, Bo},

booktitle={In Proceedings of the 33th International Joint Conference on Neural Network (IJCNN)},

year={2021},

organization={IEEE}

}