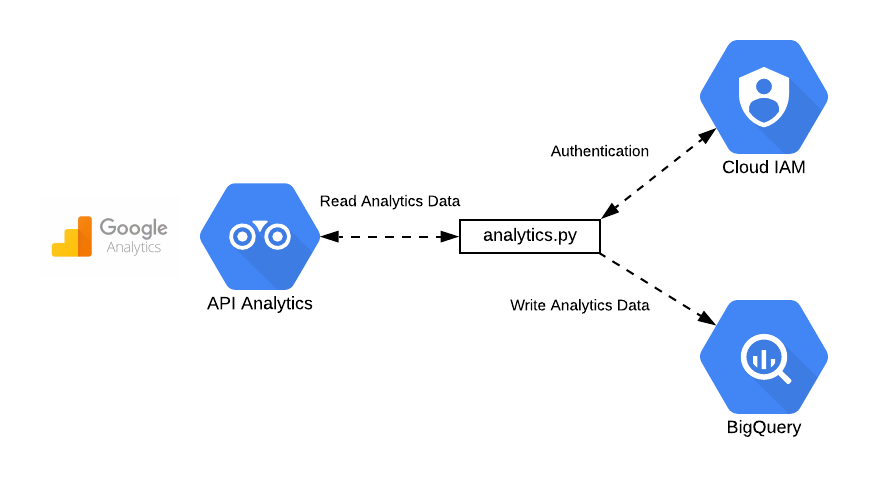

A Python script that extracts data from Google Analytics (GA) and imports it into a Google Big Query (BQ) table.

Google offer a native method of exporting your GA data into BQ, however it is only available in the GA 360 product which costs $$$$.

For those that don't use GA 360 we have created a Python script that calls the GA API, extracts the user activity data and writes it into a specified BQ table.

Having your GA data loaded into BQ gives you various options to do interesting things with your data such as:

- Recommendations: By grouping together users with similar browsing habits, you could recommend other areas of your website that a user might be interested in.

- Custom Dashboards: Using Google Data Studio to create dashboards that combine other data sources in order to enrich your GA data.

- Create a Google Cloud Project.

- Within this Project create a Service Account.

- For that Service Account create a JSON service account key.

- Enable the Google Analytics API.

Note: Google have created a Setup Tool that will guide you through steps 1-4.

- Add the Service Account to the Google Analytics Account with 'Read & Analyze' permissions.

- Use the Google Analytics Account Explorer to obtain the View ID that you want to extract data from.

- Grant Roles to the Service Account - 'BigQuery Data Editor', 'BigQuery Job User'

- Create a BigQuery DataSet.

TODO - describe how we use Google IAM to auth with Analytics and BQ

When running for the first time, it's necessary to set up the Python virtualenv and install dependencies:

virtualenv env

source env/bin/activate

pip install -r requirements.txt

You'll also need to set the GOOGLE_APPLICATION_CREDENTIALS environment variable to the location of the key JSON corresponding to the service account which you set up in Authentication. For example:

export set GOOGLE_APPLICATION_CREDENTIALS=/home/<you>/.keys/ga-service-account.json

To run the script:

python analytics.py -v <your view ID> -t <destination big query table>

The destination BigQuery table will be created, and it should not already exist. It should be a fully-qualified path of the form <project id>.<dataset id>.<table id>; for example fuzzylabs.analytics.test.

A Dockerfile is also provided if you would prefer to run this in a container:

docker build -t ga_bq_importer .

docker run \

-e GOOGLE_APPLICATION_CREDENTIALS=/tmp/keys/gcloud_creds.json \

-v $GOOGLE_APPLICATION_CREDENTIALS:/tmp/keys/gcloud_creds.json:ro \

ga_bq_importer -v <your view ID> -t <destination big query table>