This repository is inspired by the article "Fine-tuning Large Language Models (LLMs)" and contains code for training a text classification model using the Stanford Sentiment Treebank (SST-2) dataset and LoRA (Low-Rank Adaptation of Large Language Models) fine-tuning. LoRA is a way to make the language model more adaptable and efficient. Instead of training the whole model again for each task, LoRA freezes the pre-trained model and adds smaller trainable matrices to each model layer. These matrices help the model adapt to different tasks without changing all the parameters.

Before using the code in this repository, you need to install the required libraries. You can do this by running the following commands:

!pip install datasets

!pip install transformers

!pip install peft

!pip install evaluateThese commands will install the necessary Python libraries for working with datasets, transformers, LoRA, and evaluation metrics.

Here's how to use the code in this repository:

-

Load the SST-2 dataset:

The code loads the SST-2 dataset using the Hugging Face

datasetslibrary. SST-2 consists of sentences from movie reviews, annotated with sentiment labels. You can find more information about the SST-2 dataset here. -

Define the LoRA model architecture:

The code uses the LoRA technique to fine-tune a pre-trained language model for text classification. LoRA efficiently adapts the model to the task by leveraging the low-rank property of weight differences. The implementation of LoRA can be found in the code.

-

Tokenize the dataset:

The dataset is tokenized using the model's tokenizer, and special tokens like

[PAD]are added if they don't already exist. -

Train the LoRA model:

The code defines training hyperparameters and trains the LoRA-adapted model using the provided dataset. Training arguments such as learning rate, batch size, and the number of epochs can be adjusted in the

training_argsvariable. -

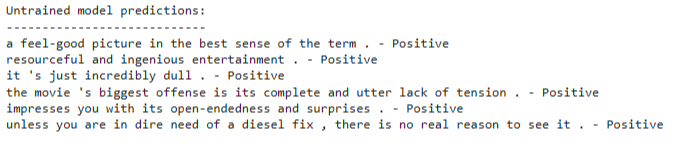

Infer with the trained LoRA model:

After training, you can use the trained LoRA-adapted model for inference on new text inputs. The code demonstrates how to load the model and make predictions on a list of example sentences.

The code uses a text classification model based on a pre-trained language model architecture. LoRA fine-tuning is applied to adapt this pre-trained model efficiently. The LoRA technique leverages the low-rank structure of weight differences to improve adaptation performance.

For detailed information about the LoRA technique, you can refer to the LoRA paper.

To train the LoRA-adapted model, follow these steps:

-

Install the required libraries as mentioned in the Installation section.

-

Define your training dataset or use the provided SST-2 dataset.

-

Configure the model architecture and hyperparameters in the code.

-

Run the training code, and the model will be fine-tuned using the LoRA technique on your dataset.

After training, you can use the trained LoRA-adapted model for inference on new text inputs. To do this:

-

Load the trained LoRA-adapted model as demonstrated in the code.

-

Tokenize your input text using the model's tokenizer.

-

Pass the tokenized input to the model for sentiment classification.

-

The model will predict whether the input text has a positive or negative sentiment.