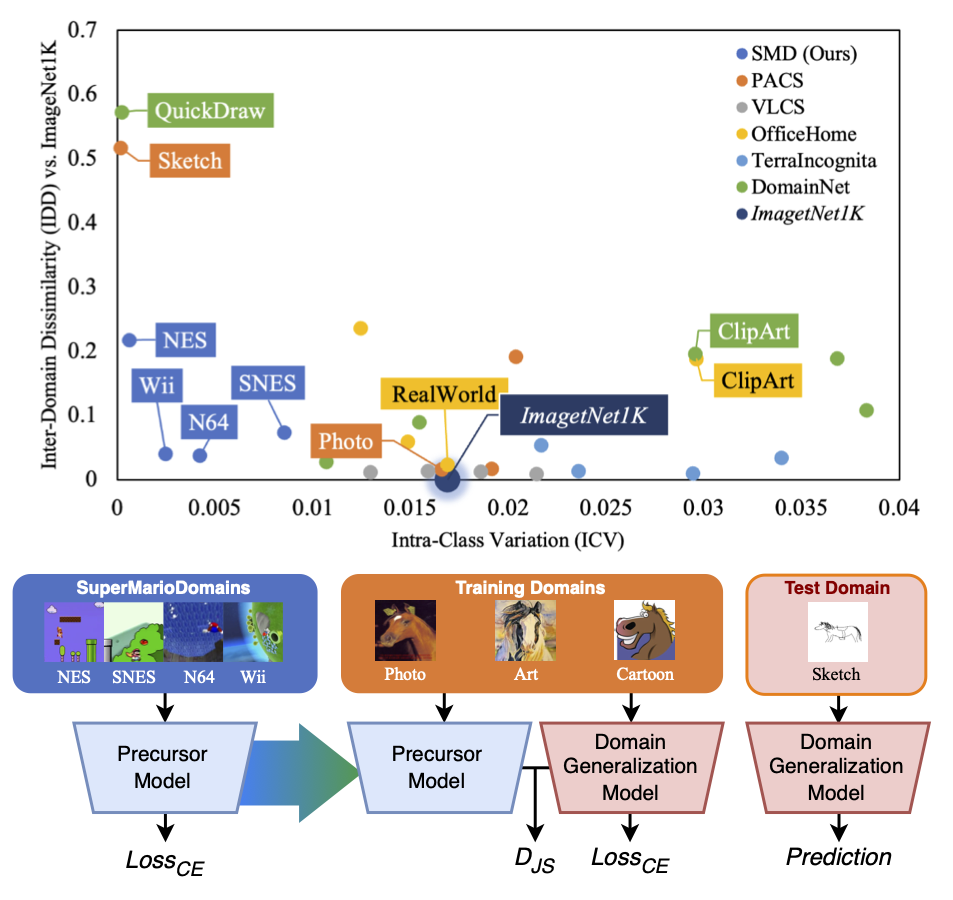

SMD-SMOS: Grounding Stylistic Domain Generalization with Quantitative Domain Shift Measures and Synthetic Scene Images (CVPR Workshop 2024)

Official implementation of Grounding Stylistic Domain Generalization with Quantitative Domain Shift Measures and Synthetic Scene Images (Best Paper at the 3rd VDU Workshop @ CVPR 2024).

All reported expertiments are performed using one Nvidia A100 GPU with CUDA 11.7+.

gdown==4.7.1

numpy==1.23.5

opencv_python==4.7.0.72

Pillow==10.4.0

prettytable==3.10.2

sconf==0.2.5

tensorboardX==2.6

torch==2.0.1

torchvision==0.15.2

git+https://github.com/openai/CLIP.git

PyTorch precursor checkpoints pre-trained with SMD and DomainNet are available at Google Drive and HuggingFace.

By default, the precursors are placed under precursor/.

Make sure you have the proper DG benchmark datasets placed under datadir/.

To run the default SMOS+ pipeline over PACS, you may use:

python3 train_smos.py "test_run" --data_dir "datadir/" \

--work_dir "./output/" \

--project_name "SMOS_PACS" \

--algorithm "SMOS_JS" \

--dataset "PACS" \

--ld_KL 0.15 \

--lr 3e-5 \

--steps 5001 --model_save 5000 \

--resnet_dropout 0.0 \

--weight_decay 0.0 \

--checkpoint_freq 200 \

--smos_pre_featurizer_pretrained True \

--smos_pre_featurizer_path "./precursor/SMD_PT_4800.pth"

Alternatively, SMOS- is simply using an SMD-pretrained-from-scratch precursor with:

--smos_pre_featurizer_path "./precursor/SMD_Scratch_4800.pth"

Please find train_smos_PACS_pt.sh for a sample parameter sweeping script.

Our synthetic precursor training dataset SMD is available here. By default, the SMD domain folders are placed under datadir/smd/.

Training a precursor feature extraction ResNet50 model with all 4 domains of SMD using ERM:

python3 train_precursor.py "SMD_ERM_pre" --data_dir "datadir/" \

--work_dir "./output_precursor/" \

--project_name "SMD_precursor" \

--algorithm "ERM" \

--dataset "SMD" \

--ld_KL 0.0 \

--lr 1e-5 \

--steps 5001 --model_save 2000 \

--resnet_dropout 0.0 \

--weight_decay 0.0 \

--checkpoint_freq 200 \

--pretrained True \

--smos_pre_featurizer_pretrained False

To obtain a Precursor from scratch, simply set --pretrained False.

If you find our work useful, please refer to it with:

@InProceedings{Luo_2024_CVPR,

author = {Luo, Yiran and Feinglass, Joshua and Gokhale, Tejas and Lee, Kuan-Cheng and Baral, Chitta and Yang, Yezhou},

title = {Grounding Stylistic Domain Generalization with Quantitative Domain Shift Measures and Synthetic Scene Images},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2024},

pages = {7303-7313}

}

This project is based off DomainBed (MIT license) and MIRO (MIT license).