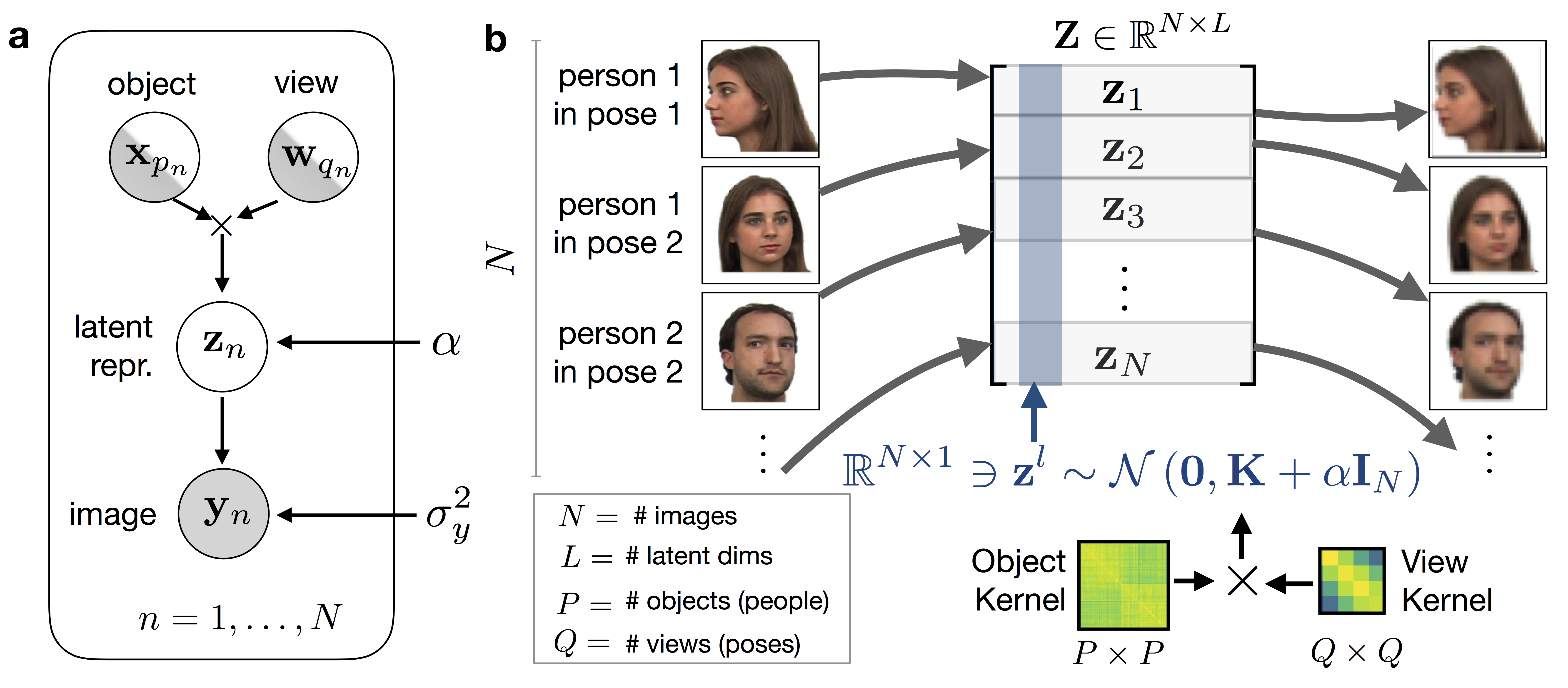

Code accompanying the paper Gaussian Process Prior Variational Autoencoder (GPPVAE) [1]. The implementation in this repository is in pytorch.

[1] Casale FP, Dalca AV, Saglietti L, Listgarten J, Fusi N. Gaussian Process Prior Variational Autoencoders, 32nd Conference on Neural Information Processing Systems, 2018, Montreal, Canada.

The dependencies can be installed using anaconda:

conda create -n gppvae python=3.6

source activate gppvae

conda install -y numpy scipy h5py matplotlib dask pandas

conda install -y pytorch=0.4.1 -c soumit

conda install -y torchvision=0.2.1The face dataset is available at https://wiki.cnbc.cmu.edu/Face_Place. The data can be preprocessed as follows:

cd GPPVAE/pysrc/faceplace

python preprocess_data.pyPlots and weights are dumped in the specified output dir every pre-determined number of epochs, which can be specified using the argument epoch_cb.

python train_vae.py --outdir ./out/vaeFor optimal performance, the autoencoder parameters of the GPPVAE model should be initialized to those of a pretrained VAE (see above). This can be done reusing the output of the run vae command. For example, if the vae results are contained in ./out/vae and one has trained VAE for 5000 epochs, then one can use:

python train_gppvae.py --outdir ./out/gppvae --vae_cfg ./out/vae/vae.cfg.p --vae_weights ./out/vae/weights.04999.ptIf you encounter any issue, please, report it.

This project is licensed under the Apache License (Version 2.0, January 2004) - see the LICENSE file for details

If you use any part of this code in your research, please cite our paper:

@article{casale2018gaussian,

title={Gaussian Process Prior Variational Autoencoders},

author={Casale, Francesco Paolo and Dalca, Adrian V and Saglietti, Luca and Listgarten, Jennifer and Fusi, Nicolo},

journal={32nd Conference on Neural Information Processing Systems},

year={2018}

}