PyTorch implementation of bidirectional generative adversarial network (BiGAN, a.k.a. ALI) trained using Wasserstein distance (see WGAN and WGAN-GP). The code has been tested in a conda environment with Python 3 and PyTorch >= 1.0.

This repository contains code for training BiGAN on SVHN, CIFAR-10 and Celeba datasets. Our implementation is different from the original BiGAN/ALI implementation in the following ways:

- We normalize pixel values to [-1, 1].

- Our training objective is Wasserstein distance, not Jenson-Shannon divergence. Our model hence has a critic network instead of a discriminator network.

- The critic network does NOT use normalization layers (batch norm, instance norm, etc.). We found that training fails if we incorporate normalization into the critic network.

- We apply gradient penalty to stablize training.

- Update the loading and saving paths.

- Check (and update) the hyperparameters.

- Train on SVHN

python ./wali_svhn.py- Train on CIFAR-10

python ./wali_cifar10.py- Train on Celeba

python ./wali_celeba.py- Interpolate between two randomly generated images

python ./interpolate.py --ckpt=[model path] --n=[number of interpolations] --save-path=[saving path]All models are trained using default hyperparameter settings for 20,000 iterations. Note that the network architectures and training procedures are not carefully optimized for best performance.

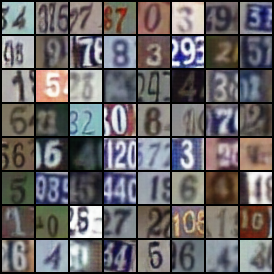

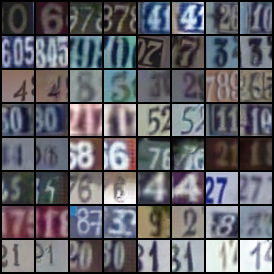

- SVHN (32 x 32)

| Generation | Reconstruction |

|---|---|

|

|

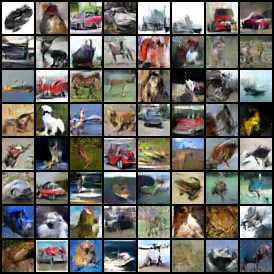

- CIFAR-10 (32 x 32)

| Generation | Reconstruction |

|---|---|

|

|

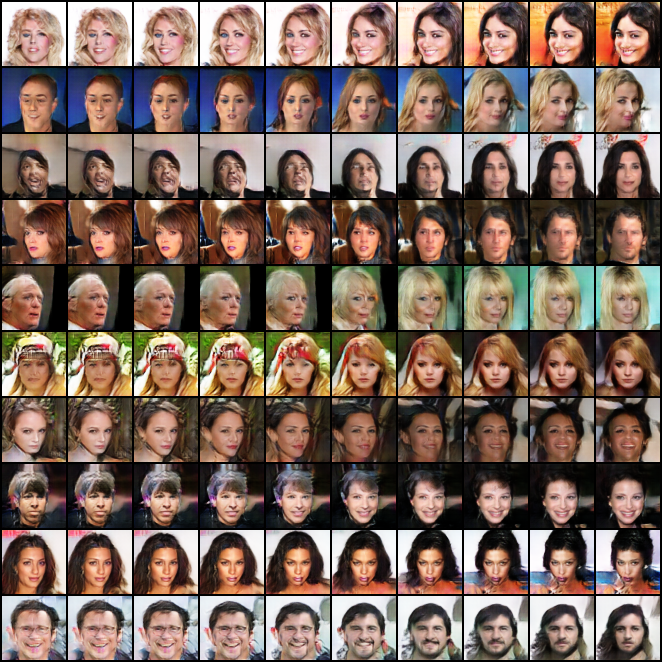

- Celeba (aligned, 64 x 64)

| Generation | Reconstruction |

|---|---|

|

|

We finally show the interpolation results for ten sets of randomly generated images.

Fangzhou Mu ([email protected])

- BiGAN https://github.com/jeffdonahue/bigan

- ALI https://github.com/IshmaelBelghazi/ALI

- WGAN https://github.com/martinarjovsky/WassersteinGAN

- WGAN-GP https://github.com/igul222/improved_wgan_training

@inproceedings{donahue2016adversarial,

title={Adversarial feature learning},

author={Donahue, Jeff and Kr{\"a}henb{\"u}hl, Philipp and Darrell, Trevor},

booktitle={International Conference on Learning Representations (ICLR)},

year={2017}

}

@inproceedings{dumoulin2016adversarially,

title={Adversarially learned inference},

author={Dumoulin, Vincent and Belghazi, Ishmael and Poole, Ben and Mastropietro, Olivier and Lamb, Alex and Arjovsky, Martin and Courville, Aaron},

booktitle={International Conference on Learning Representations (ICLR)},

year={2017}

}

@inproceedings{arjovsky2017wasserstein,

title={Wasserstein gan},

author={Arjovsky, Martin and Chintala, Soumith and Bottou, L{\'e}on},

booktitle={International Conference on Machine Learning (ICML)},

year={2017}

}

@inproceedings{gulrajani2017improved,

title={Improved training of wasserstein gans},

author={Gulrajani, Ishaan and Ahmed, Faruk and Arjovsky, Martin and Dumoulin, Vincent and Courville, Aaron C},

booktitle={Advances in neural information processing systems (NeurIPS)},

year={2017}

}