This work presents an extension of the initial OpenAI gym for robotics using ROS and Gazebo. A whitepaper about this work is available at https://arxiv.org/abs/1608.05742. Please use the following BibTex entry to cite our work:

@article{zamora2016extending,

title={Extending the OpenAI Gym for robotics: a toolkit for reinforcement learning using ROS and Gazebo},

author={Zamora, Iker and Lopez, Nestor Gonzalez and Vilches, Victor Mayoral and Cordero, Alejandro Hernandez},

journal={arXiv preprint arXiv:1608.05742},

year={2016}

}

gym-gazebo is a complex piece of software for roboticists that puts together simulation tools, robot middlewares (ROS, ROS 2), machine learning and reinforcement learning techniques. All together to create an environment whereto benchmark and develop behaviors with robots. Setting up gym-gazebo appropriately requires relevant familiarity with these tools.

Code is available "as it is" and currently it's not supported by any specific organization. Community support is available here. Pull requests and contributions are welcomed.

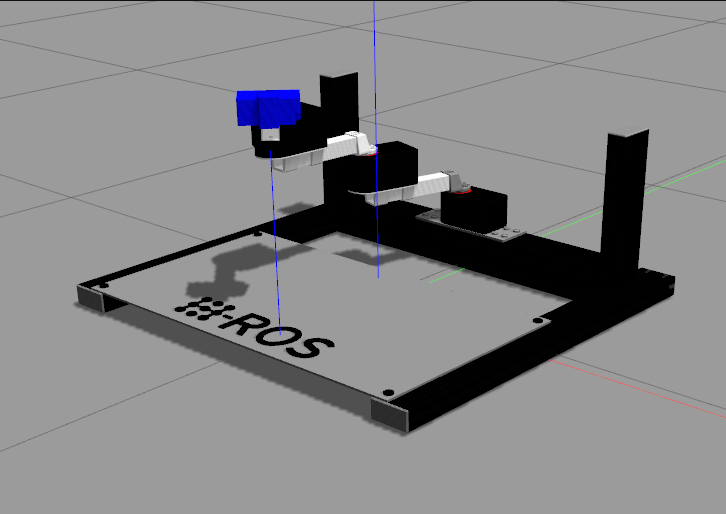

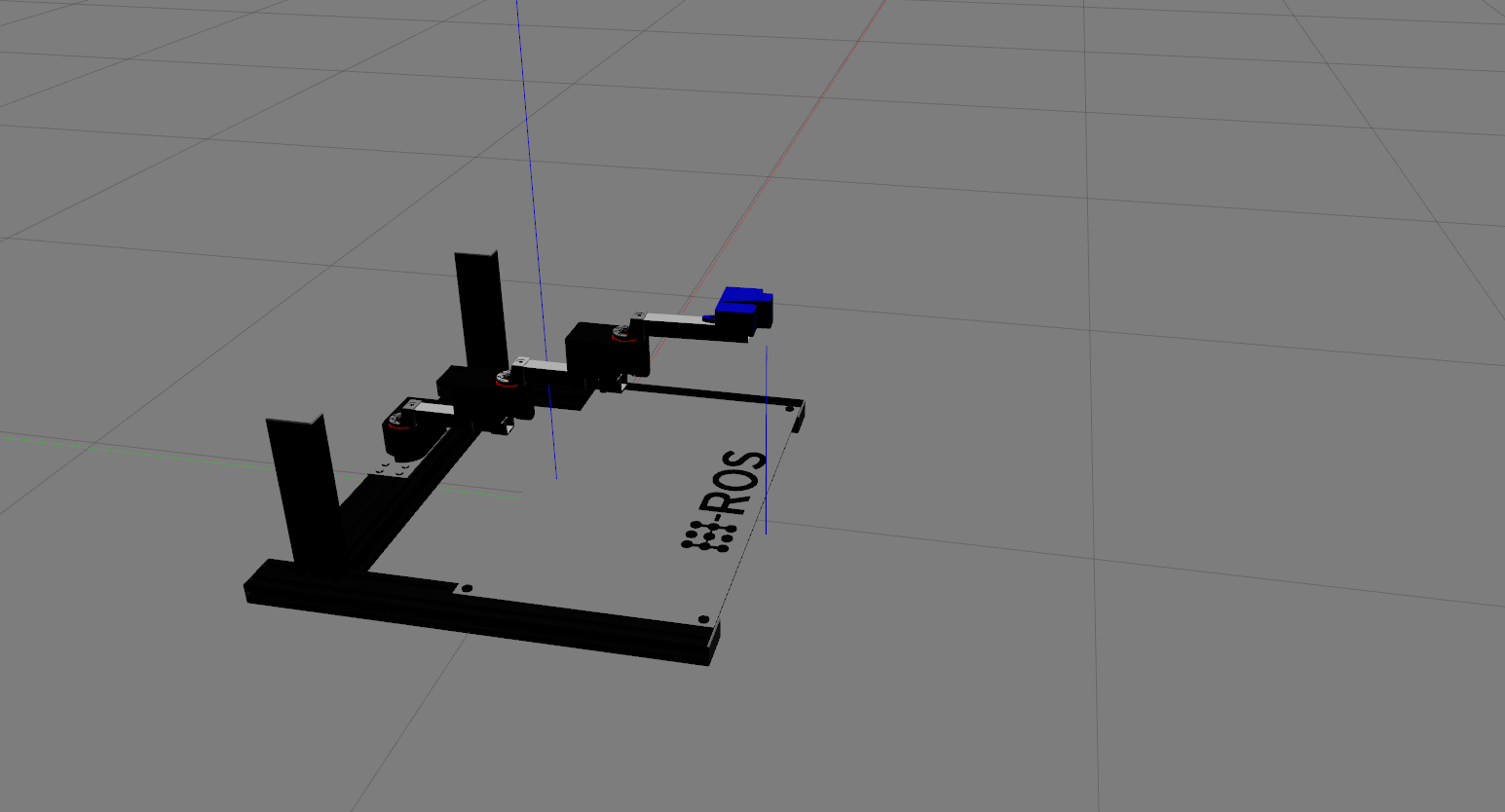

The following are some of the gazebo environments maintained by the community using gym-gazebo. If you'd like to contribute and maintain an additional environment, submit a Pull Request with the corresponding addition.

| Name | Middleware | Description | Observation Space | Action Space | Reward range |

|---|---|---|---|---|---|

GazeboCircuit2TurtlebotLidar-v0 |

ROS | A simple circuit with straight tracks and 90 degree turns. Highly discretized LIDAR readings are used to train the Turtlebot. Scripts implementing Q-learning and Sarsa can be found in the examples folder. | |||

GazeboCircuitTurtlebotLidar-v0.png |

ROS | A more complex maze with high contrast colors between the floor and the walls. Lidar is used as an input to train the robot for its navigation in the environment. | TBD | ||

GazeboMazeErleRoverLidar-v0 |

ROS, APM | Deprecated | |||

GazeboErleCopterHover-v0 |

ROS, APM | Deprecated |

The following table compiles a number of other environments that do not have community support.

Refer to INSTALL.md

In the root directory of the repository:

sudo pip install -e .- Load the environment variables corresponding to the robot you want to launch. E.g. to load the Turtlebot:

cd gym_gazebo/envs/installation

bash turtlebot_setup.bashNote: all the setup scripts are available in gym_gazebo/envs/installation

- Run any of the examples available in

examples/. E.g.:

cd examples/turtlebot

python circuit2_turtlebot_lidar_qlearn.pyTo see what's going on in Gazebo during a simulation, simply run gazebo client:

gzclientDisplay a graph showing the current reward history by running the following script:

cd examples/utilities

python display_plot.pyHINT: use --help flag for more options.

Sometimes, after ending or killing the simulation gzserver and rosmaster stay on the background, make sure you end them before starting new tests.

We recommend creating an alias to kill those processes.

echo "alias killgazebogym='killall -9 rosout roslaunch rosmaster gzserver nodelet robot_state_publisher gzclient'" >> ~/.bashrc