Linning Xu ·

Vasu Agrawal ·

William Laney ·

Tony Garcia ·

Aayush Bansal

Changil Kim ·

Samuel Rota Bulò ·

Lorenzo Porzi ·

Peter Kontschieder

Aljaž Božič ·

Dahua Lin ·

Michael Zollhöfer ·

Christian Richardt

ACM SIGGRAPH Asia 2023

| Scene | ver | cams | pos | img | 2K EXRs |

1K EXRs |

8K+ JPEGs |

4K JPEGs |

2K JPEGs |

1K JPEGs |

|---|---|---|---|---|---|---|---|---|---|---|

| apartment | v2 | 22 | 180 | 3,960 | 123 GB | 31 GB | 92 GB | 20 GB | 5 GB | 1.2 GB |

| kitchen | v2* | 19 | 318 | 6,024 | 190 GB | 48 GB | 142 GB | 29 GB | 8 GB | 1.9 GB |

| office1a | v1 | 9 | 85 | 765 | 24 GB | 6 GB | 15 GB | 3 GB | 1 GB | 0.2 GB |

| office1b | v2 | 22 | 71 | 1,562 | 49 GB | 13 GB | 35 GB | 7 GB | 2 GB | 0.4 GB |

| office2 | v1 | 9 | 233 | 2,097 | 66 GB | 17 GB | 46 GB | 9 GB | 2 GB | 0.5 GB |

| office_view1 | v2 | 22 | 126 | 2,772 | 87 GB | 22 GB | 63 GB | 14 GB | 4 GB | 0.8 GB |

| office_view2 | v2 | 22 | 67 | 1,474 | 47 GB | 12 GB | 34 GB | 7 GB | 2 GB | 0.5 GB |

| riverview | v2 | 22 | 48 | 1,008 | 34 GB | 8 GB | 24 GB | 5 GB | 2 GB | 0.4 GB |

| seating_area | v1 | 9 | 168 | 1,512 | 48 GB | 12 GB | 36 GB | 8 GB | 2 GB | 0.5 GB |

| table | v1 | 9 | 134 | 1,206 | 38 GB | 9 GB | 26 GB | 6 GB | 2 GB | 0.4 GB |

| workshop | v1 | 9 | 700 | 6,300 | 198 GB | 50 GB | 123 GB | 27 GB | 8 GB | 2.1 GB |

| raf_emptyroom | v2 | 22 | 365 | 8,030 | 252 GB | 63 GB | 213 GB | 45 GB | 12 GB | 2.5 GB |

| raf_furnishedroom | v2 | 22 | 154 | 3,388 | 106 GB | 27 GB | 90 GB | 19 GB | 5 GB | 1.1 GB |

| Total | 1,262 GB | 318 GB | 939 GB | 199 GB | 54 GB | 12.5 GB |

* v2 with 3 fewer cameras than standard configuration, i.e. only 19 cameras.

| apartment | kitchen | office1a | office1b |

|---|---|---|---|

|

|

|

|

| office2 | office_view1 | office_view2 | riverview |

|---|---|---|---|

|

|

|

|

| seating_area | table | workshop |

|---|---|---|

|

|

|

April 2024: The following two datasets accompany our paper Real Acoustic Fields (CVPR 2024):

| raf_emptyroom | raf_furnishedroom |

|---|---|

|

|

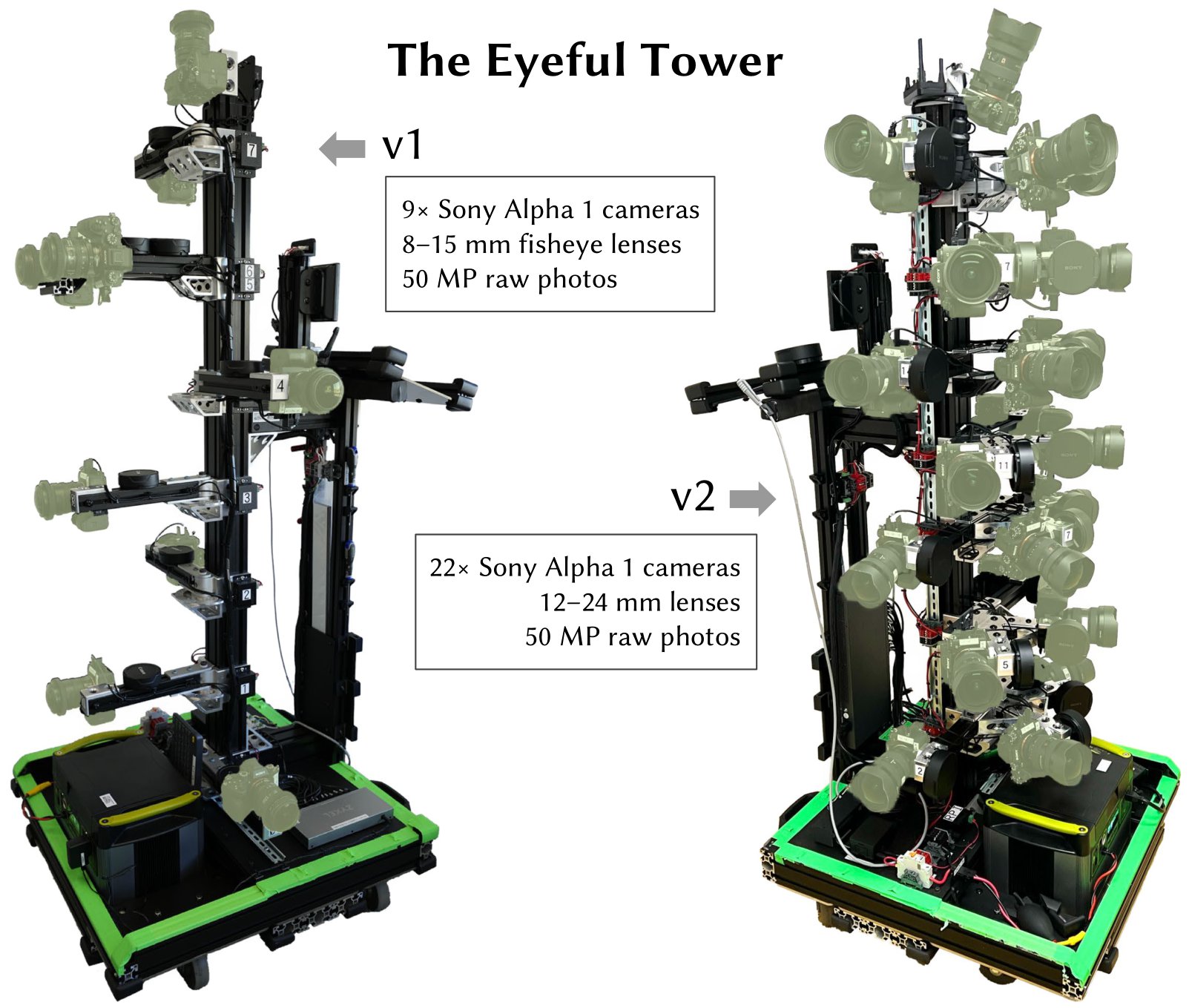

All images in the dataset were taken with either Eyeful Tower v1 or v2 (as specified in the overview table). Eyeful Tower v1 comprises 9 fisheye cameras, whereas Eyeful Tower v2 comprises 22 pinhole cameras (19 for “kitchen”).

The Eyeful Tower dataset is hosted on AWS S3, and can be explored with any browser or downloaded with standard software, such as wget or curl.

However, for the fastest, most reliable download, we recommend using the AWS command line interface (AWS CLI), see AWS CLI installation instructions.

Optional: Speed up downloading by increasing the number of concurrent downloads from 10 to 100:

aws configure set default.s3.max_concurrent_requests 100

aws s3 cp --recursive --no-sign-request s3:https://fb-baas-f32eacb9-8abb-11eb-b2b8-4857dd089e15/EyefulTower/apartment/images-jpeg-1k/ apartment/images-jpeg-1k/

Alternatively, use “sync” to avoid transferring existing files:

aws s3 sync --no-sign-request s3:https://fb-baas-f32eacb9-8abb-11eb-b2b8-4857dd089e15/EyefulTower/apartment/images-jpeg-1k/ apartment/images-jpeg-1k/

For those interested in experimenting with specific cameras, we recommend viewing the collage video first. This will help you identify which camera views you'd like to utilize. For example, for this apartment scene using the v2 capture rig, you might consider camera IDs 19, 20, 21 which are placed at the same height.

for dataset in apartment kitchen office1a office1b office2 office_view1 office_view2 riverview seating_area table workshop; do

mkdir -p $dataset/images-jpeg-1k;

aws s3 cp --recursive --no-sign-request s3:https://fb-baas-f32eacb9-8abb-11eb-b2b8-4857dd089e15/EyefulTower/$dataset/images-jpeg-1k/ $dataset/images-jpeg-1k/;

doneaws s3 sync --no-sign-request s3:https://fb-baas-f32eacb9-8abb-11eb-b2b8-4857dd089e15/EyefulTower/ .

Each scene is organized following this structure:

apartment

│

├── apartment-final.pdf # Metashape reconstruction report

├── cameras.json # Camera poses in KRT format (see below)

├── cameras.xml # Camera poses exported from Metashape

├── images-1k # HDR images at 1K resolution

│ ├── 10 # First camera (bottom-most camera)

│ │ ├── 10_DSC0001.exr # First image

│ │ ├── 10_DSC0010.exr # Second image

│ │ ├── [...] # More images

│ │ └── 10_DSC1666.exr # Last image

│ ├── 11 # Second camera

│ │ ├── 11_DSC0001.exr

│ │ ├── 11_DSC0010.exr

│ │ ├── [...]

│ │ └── 11_DSC1666.exr

│ ├── [...] # More cameras

│ └── 31 # Last camera (top of tower)

│ ├── 31_DSC0001.exr

│ ├── 31_DSC0010.exr

│ ├── [...]

│ └── 31_DSC1666.exr

├── images-2k [...] # HDR images at 2K resolution

├── images-jpeg [...] # Full-resolution JPEG images

├── images-jpeg-1k [...] # JPEG images at 1K resolution

├── images-jpeg-2k # JPEG images at 2K resolution

│ ├── [10 ... 31]

│ ├── [10 ... 31].mp4 # Camera visualization

│ └── collage.mp4 # Collage of all cameras

├── images-jpeg-4k [...] # JPEG images at 4K resolution

├── mesh.jpg # Mesh texture (16K×16K)

├── mesh.mtl # Mesh material file

├── mesh.obj # Mesh in OBJ format

└── splits.json # Training/testing splits

- High dynamic range images merged from 9-photo raw exposure brackets.

- Downsampled to “1K” (684×1024 pixels) or “2K” resolution (1368×2048 pixels).

- Color space: DCI-P3 (linear)

- Stored as EXR images with uncompressed 32-bit floating-point numbers.

- All image filenames are prefixed with the camera name, e.g.

17_DSC0316.exr. - Images with filenames ending in the same number are captured at the same time.

- Some images may be missing, e.g. due to blurry images or images showing the capture operator that were removed.

import os, cv2, numpy as np

# Enable OpenEXR support in OpenCV (https://github.com/opencv/opencv/issues/21326).

# This environment variable needs to be defined before the first EXR image is opened.

os.environ["OPENCV_IO_ENABLE_OPENEXR"] = "1"

# Read an EXR image using OpenCV.

img = cv2.imread("apartment/images-2k/17/17_DSC0316.exr", cv2.IMREAD_UNCHANGED)

# Apply white-balance scaling (Note: OpenCV uses BGR colors).

coeffs = np.array([0.726097, 1.0, 1.741252]) # apartment [RGB]

img = np.einsum("ijk,k->ijk", img, coeffs[::-1])

# Tonemap using sRGB curve.

linear_part = 12.92 * img

exp_part = 1.055 * (np.maximum(img, 0.0) ** (1 / 2.4)) - 0.055

img = np.where(img <= 0.0031308, linear_part, exp_part)

# Write resulting image as JPEG.

img = np.clip(255 * img, 0.0, 255.0).astype(np.uint8)

cv2.imwrite("apartment-17_DSC0316.jpg", img, params=[cv2.IMWRITE_JPEG_QUALITY, 100])-

We provide JPEG images at four resolution levels:

images-jpeg/: 5784 × 8660 = 50. megapixels — full original image resolutionimages-jpeg-4k/: 2736 × 4096 = 11.2 megapixelsimages-jpeg-2k/: 1368 × 2048 = 2.8 megapixelsimages-jpeg-1k/: 684 × 1024 = 0.7 megapixels

-

The JPEG images are white-balanced and tone-mapped versions of the HDR images. See the code above for the details.

-

Each scene uses white-balance settings derived from a ColorChecker, which individually scale the RGB channels as follows:

Scene RGB scale factors apartment 0.726097, 1.0, 1.741252kitchen 0.628143, 1.0, 2.212346office1a 0.740846, 1.0, 1.750224office1b 0.725535, 1.0, 1.839938office2 0.707729, 1.0, 1.747833office_view1 1.029089, 1.0, 1.145235office_view2 0.939620, 1.0, 1.273549riverview 1.077719, 1.0, 1.145992seating_area 0.616093, 1.0, 2.426888table 0.653298, 1.0, 2.139514workshop 0.709929, 1.0, 1.797705raf_emptyroom 0.718776, 1.0, 1.787020raf_furnishedroom 0.721494, 1.0, 1.793423

This JSON file has the basic structure {"KRT": [<one object per image>]}, where each image object has the following properties:

-

width: image width, in pixels (usually 5784) -

height: image height, in pixels (usually 8660) -

cameraId: filename component for this image (e.g."0/0_REN0001"); to get a complete path, use"{scene}/{imageFormat}/{cameraId}.{extension}"for:scene: any of the 11 scene names,imageFormat: one of"images-2k","images-jpeg-2k","images-jpeg-4k", or"images-jpeg"extension: file extension,jpgfor JPEGs,exrfor EXR images (HDR)

-

K: 3×3 intrinsic camera matrix for full-resolution image (column-major) -

T: 4×4 world-to-camera transformation matrix (column-major) -

distortionModel: lens distortion model used:"Fisheye"for fisheye images (Eyeful v1)"RadialAndTangential"for pinhole images (Eyeful v2)

-

distortion: lens distortion coefficients for use with OpenCV’scv2.undistortfunction- fisheye images (Eyeful v1):

[k1, k2, k3, _, _, _, p1, p2]- Note: The projection model is an ideal (equidistant) fisheye model.

- pinhole images (Eyeful v2):

[k1, k2, p1, p2, k3](same order ascv2.undistort)

- fisheye images (Eyeful v1):

-

frameId: position index during capture (consecutive integers)- all images taken at the same time share the same

frameId

- all images taken at the same time share the same

-

sensorId: Metashape sensor ID (aka camera) of this image- all images taken by the same camera share the same

sensorId

- all images taken by the same camera share the same

-

cameraMasterId(optional): Metashape camera ID for the master camera (in rig calibration) at this position/frame- all images taken at the same time share the same

cameraMasterId

- all images taken at the same time share the same

-

sensorMasterId(optional): Metashape sensor ID for the master camera in rig calibration- should have the same value for all cameras except the master camera (usually

"6"for Eyeful v1,"13"for Eyeful v2).

- should have the same value for all cameras except the master camera (usually

World coordinate system: right-handed, y-up, y=0 is ground plane, units are in meters.

- Camera calibration data exported directly from Metashape, using its proprietary file format.

-

Textured mesh in OBJ format, exported from Metashape and created from the full-resolution JPEG images.

-

World coordinate system: right-handed, y-up,

y=0is ground plane, units are in meters.

- Contains lists of images for training (

"train") and testing ("test"). - All images of one camera are held out for testing: camera

5for Eyeful v1, and camera17for Eyeful v2.

- 3 Nov 2023 – initial dataset release

- 18 Jan 2024 – added “1K” resolution (684×1024 pixels) EXRs and JPEGs for small-scale experimentation.

- 19 Apr 2024 – added two rooms from Real Acoustic Fields (RAF) dataset:

raf_emptyroomandraf_furnishedroom.

If you use any data from this dataset or any code released in this repository, please cite the VR-NeRF paper.

@InProceedings{VRNeRF,

author = {Linning Xu and

Vasu Agrawal and

William Laney and

Tony Garcia and

Aayush Bansal and

Changil Kim and

Rota Bulò, Samuel and

Lorenzo Porzi and

Peter Kontschieder and

Aljaž Božič and

Dahua Lin and

Michael Zollhöfer and

Christian Richardt},

title = {{VR-NeRF}: High-Fidelity Virtualized Walkable Spaces},

booktitle = {SIGGRAPH Asia Conference Proceedings},

year = {2023},

doi = {10.1145/3610548.3618139},

url = {https://vr-nerf.github.io},

}Creative Commons Attribution-NonCommercial (CC BY-NC) 4.0, as found in the LICENSE file.