This is the first project of the Deep Reinforcement Learning nanodegree. The goal of the agent is to gather yellow bananas and avoid the blue ones. The environment has 4 discrete actions (the agent's movement, forward, backward turn left and right) and a continuous observation space with 37 values representing the speed and the ray-based perception of objects around the agent's forward direction.

Unity brain name: BananaBrain

Number of Visual Observations (per agent): 0

Vector Observation space type: continuous

Vector Observation space size (per agent): 37

Number of stacked Vector Observation: 1

Vector Action space type: discrete

Vector Action space size (per agent): 4

Vector Action descriptions: , , ,

For each yellow banana that is collected, the agent is given a reward of +1. The blue ones give -1 reward. We consider that the problem is solved if the agent receives an average reward (over 100 episodes) of at least +13.

The project has been developed using the Udacity's Lunar Landing project solution as a foundation.

In order to prepare the environment, follow the next steps after downloading this repository:

- Create a new environment:

- Linux or Mac:

conda create --name drlnd python=3.6 source activate drlnd- Windows:

conda create --name dqn python=3.6 activate drlnd

- Min install of OpenAI gym

- If using Windows,

- download swig for windows and add it the PATH of windows

- install Microsoft Visual C++ Build Tools .

- then run these commands

pip install gym pip install gym[classic_control] pip install gym[box2d]

- If using Windows,

- Install the dependencies under the folder python/

cd python

pip install .- Create an IPython kernel for the

drlndenvironment

python -m ipykernel install --user --name drlnd --display-name "drlnd"-

Download the Unity Environment (thanks to Udacity) which matches your operating system

-

Unzip the downloaded file and move it inside the project's root directory

-

Change the kernel of you environment to

drlnd -

Open the main.py file and change the path to the unity environment appropriately (banana_executable_path)

If you want to train the agent, execute the main.py file setting the is_training variable to True. Otherwise if you want to test your agent then set that variable to False. In case of reaching the goal the weights of the neural network will be stored in the checkpoint file in the root folder.

By default, the main.py file is prepared to test our model.

- report.pdf: A document that describes the details of the implementation and future proposals.

- agents/deep_q_network: the implemented agent using a deep q network architecture

- models/neural_network: the neural network model

- utils/replay_buffer: a class for handling the experience replay

- python/: needed files to run the unity environment

- main.py: Entry point to train or test the agent

- checkpoint.pth: Our model's weights (Solved in less than 800 episodes)

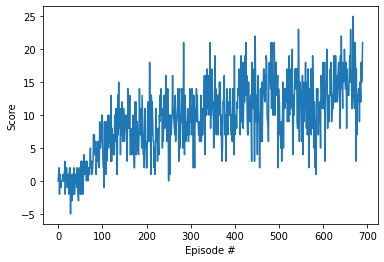

- Episode 100 Average Score: 1.52

- Episode 200 Average Score: 6.27

- Episode 300 Average Score: 8.84

- Episode 400 Average Score: 10.87

- Episode 500 Average Score: 11.69

- Episode 600 Average Score: 11.52

- Episode 700 Average Score: 13.79

- Episode 740 Average Score: 14.03

- Environment solved in 740 episodes! Average Score: 14.03

You can find an example of the trained agent here