Faster R-CNN for tensorflow keras, packaged as a library

View Jupyter Demo

·

Report Bug

·

Request Feature

Faster RCNN has been implemented to be used as a library, following Tensorflow Keras Model API as much as possible. This consistent interface will allow any user who is already familiar with Tensorflow Keras to use our APIs easily. To simplify the API, only basic configuration options would be available.

In order to make things easier for the user, we have also included useful features such as automatic saving of model and csv during training, as well as automatic continuation of training. This is especially useful if running in Google Colab GPU, as there are time limits for each session.

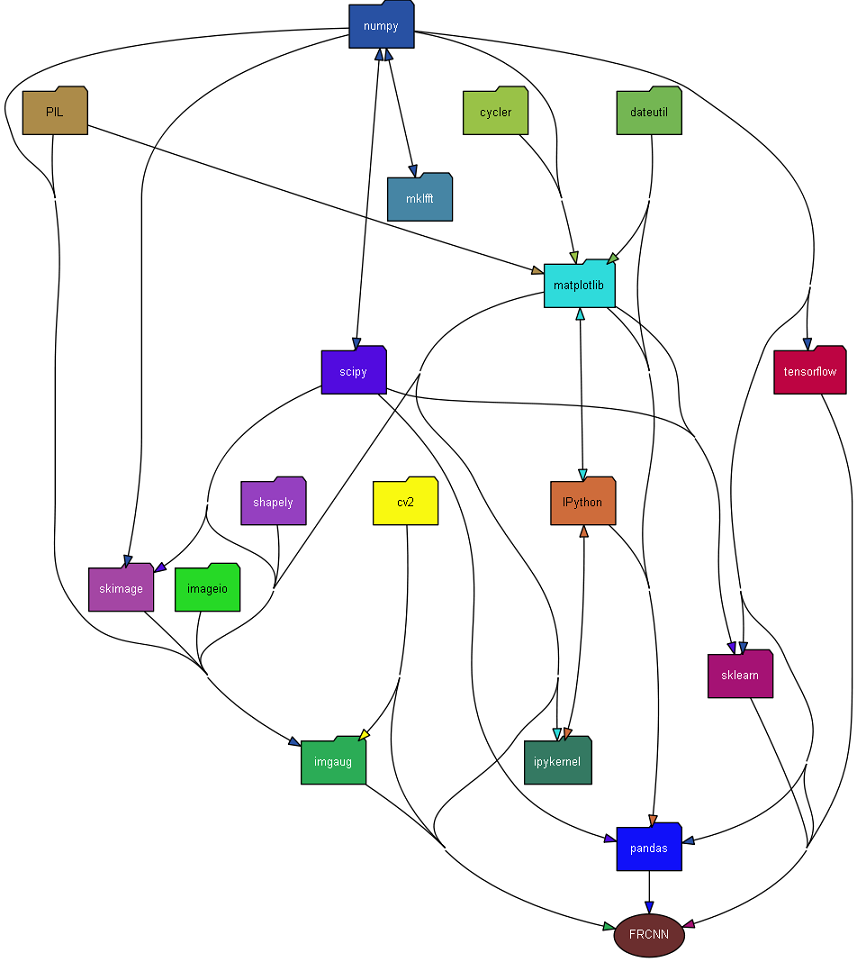

The dependencies for the library is shown in the figure below (Created via pydeps using command

pydeps FRCNN.py --max-bacon=4 --cluster))

- Install prerequisites following instructions in "conda setup.txt"

- Clone this repo:

git clone https://github.com/eleow/tfKerasFRCNN.gitSee _TrainAndTestFRCNN.py or _TrainAndTestFRCNN.ipynb for end-to-end example of how to use the library

If using annotation file in 'simple' format (ie. each line of the annotation file should contain filepath,x1,y1,x2,y2,class_name), parsing annotation file is as simple as:

from FRCNN import parseAnnotationFile

train_data, classes_count, class_mapping = parseAnnotationFile(annotation_train_path)If using Pascal VOC dataset, and filtering for certain classes of interest

from FRCNN import parseAnnotationFile

annotation_path = './Dataset VOC'

classes_of_interest = ['bicycle', 'bus', 'car', 'motorbike', 'person']

train_data, classes_count, class_mapping = parseAnnotationFile(annotation_path, mode='voc', filteredList=classes_of_interest)from FRCNN import viewAnnotatedImage

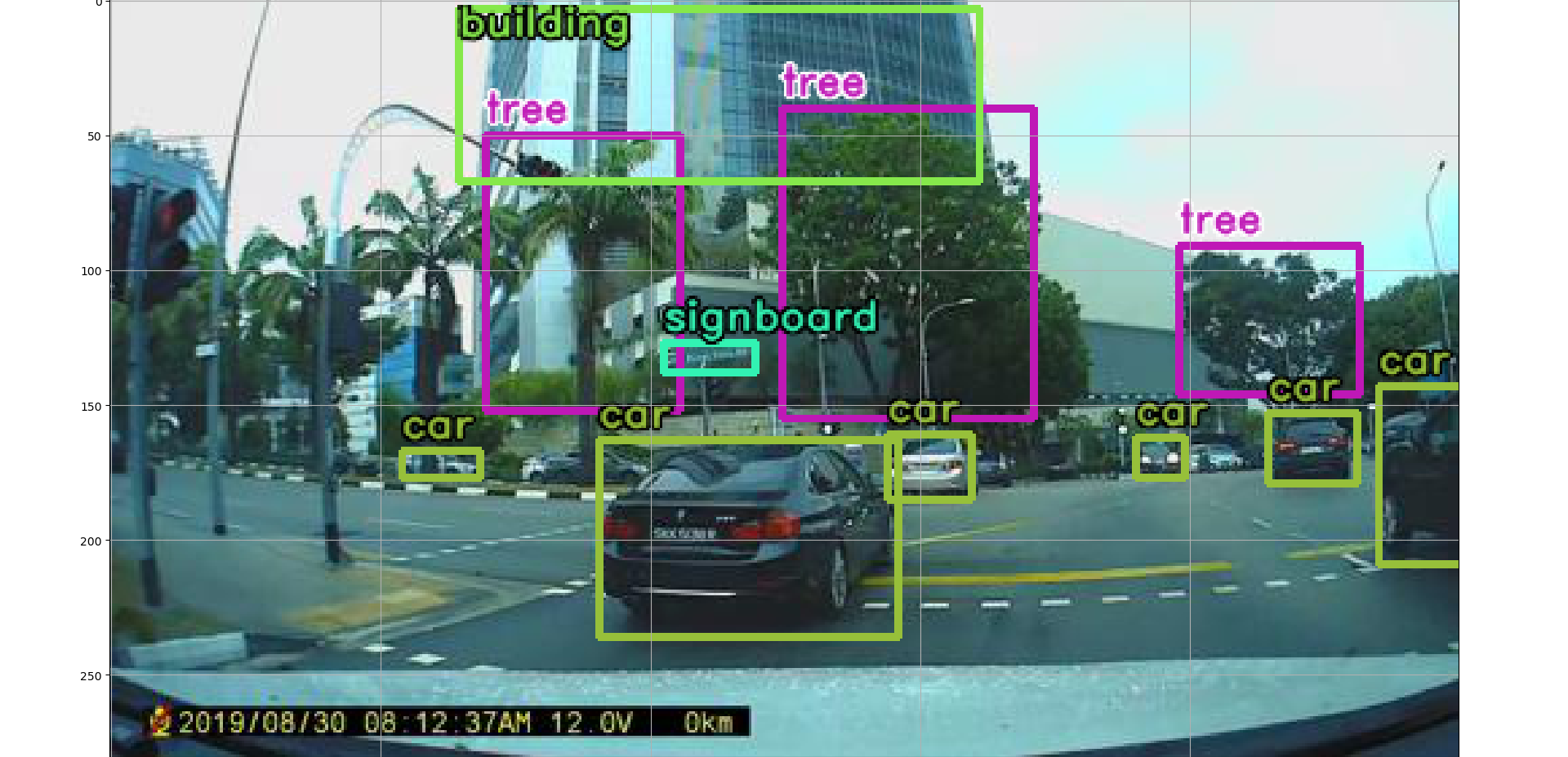

viewAnnotatedImage(annotation_train_path, 'path_to_image/image.png')You could create a FRCNN model with the default parameters used in the paper like this. Currently, only 'vgg' and 'resnet50' feature networks are supported.

from FRCNN import FRCNN

frcnn = FRCNN(base_net_type='vgg', num_classes = len(classes_count))

frcnn.compile()Alternatively, specify the parameters

frcnn = FRCNN(input_shape=(None,None,3), num_anchors=num_anchors, num_rois=num_rois, base_net_type=base_net_type, num_classes = len(classes_count))

frcnn.compile()First create the iterator for your dataset

from FRCNN import FRCNNGenerator, inspect, preprocess_input

train_it = FRCNNGenerator(train_data,

target_size= im_size,

horizontal_flip=True, vertical_flip = False, rotation_range = 0,

shuffle=False, base_net_type=base_net_type

)Then start training

# train model - initial_epoch = -1 --> will automatically resume training if csv and model already exists

steps = 1000

frcnn.fit_generator(train_it, target_size = im_size, class_mapping = class_mapping, epochs=num_epochs, steps_per_epoch=steps,

model_path=model_path, csv_path=csv_path, initial_epoch=-1)You can view the records of training and view the accuracy and loss

from FRCNN import plotAccAndLoss

plotAccAndLoss(csv_path)First create model for testing. Remember to load weights! (Note: class mapping and num_classes should be based on training set)

from FRCNN import FRCNN

frcnn_test = FRCNN(input_shape=(None,None,3), num_anchors=num_anchors, num_rois=num_rois, base_net_type=base_net_type, num_classes = len(classes_count))

frcnn_test.load_config(anchor_box_scales=anchor_box_scales, anchor_box_ratios=anchor_box_ratios, num_rois=num_rois, target_size=im_size)

frcnn_test.load_weights(model_path)

frcnn_test.compile()Perform predictions using test_data (first output from parseAnnotationFile, containing path to the images files)

predicts = frcnn_test.predict(test_data, class_mapping=class_mapping, verbose=2, bbox_threshold=0.5, overlap_thres=0.2)Alternatively, pass in samples as an array of img data.

from FRCNN import convertDataToImg

test_imgs = convertDataToImg(test_data)

predicts = frcnn_test.predict(test_imgs, class_mapping=class_mapping, verbose=2, bbox_threshold=0.5, overlap_thres=0.2)Or if images are in a folder without an annotation file

import cv2

imgPaths = [os.path.join('./pathToImages', s) for s in os.listdir('./pathToImages')]

test_imgs2 = []

for path in imgPaths:

test_imgs2.append(cv2.imread(path, cv2.IMREAD_UNCHANGED))

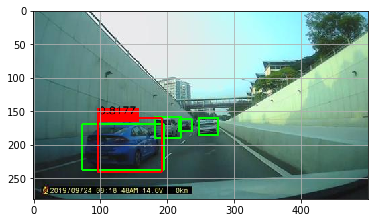

predicts = frcnn_test.predict(test_imgs2, class_mapping=class_mapping, verbose=2, bbox_threshold=0.5, overlap_thres=0.2)Get mAP based on VOC Pascal 2012 Challenge for your test dataset, and display predictions (red boxes) and ground-truth boxes (green boxes).

evaluate = frcnn_test.evaluate(test_data, class_mapping=class_mapping, verbose=2)- If you run out of memory, try reducing number of ROIs that are processing simultaneously.

- Alternatively, reduce image size from default value of 600. Remember to scale your anchor_box_scales accordingly as well.

Distributed under the MIT License

Faster RCNN code is modified and refactored based on original code by RockyXu66 and kbardool

Calculation of object detection metrics from Rafael Padilla

Default values are mostly based on original paper by Shaoqing Ren, Kaiming He, Ross B. Girshick, Jian Sun