Serving machine learning models production-ready, fast, easy and secure powered by the great FastAPI by Sebastián Ramírez](https://github.com/tiangolo).

This repository contains a skeleton app which can be used to speed-up your next machine learning project. The code is fully tested and provides a preconfigured tox to quickly expand this sample code.

To experiment and get a feeling on how to use this skeleton, a sample regression model for house price prediction is included in this project. Follow the installation and setup instructions to run the sample model and serve it aso RESTful API.

- Python 3.11+

- Poetry

Install the required packages in your local environment (ideally virtualenv, conda, etc.).

poetry install-

Duplicate the

.env.examplefile and rename it to.env -

In the

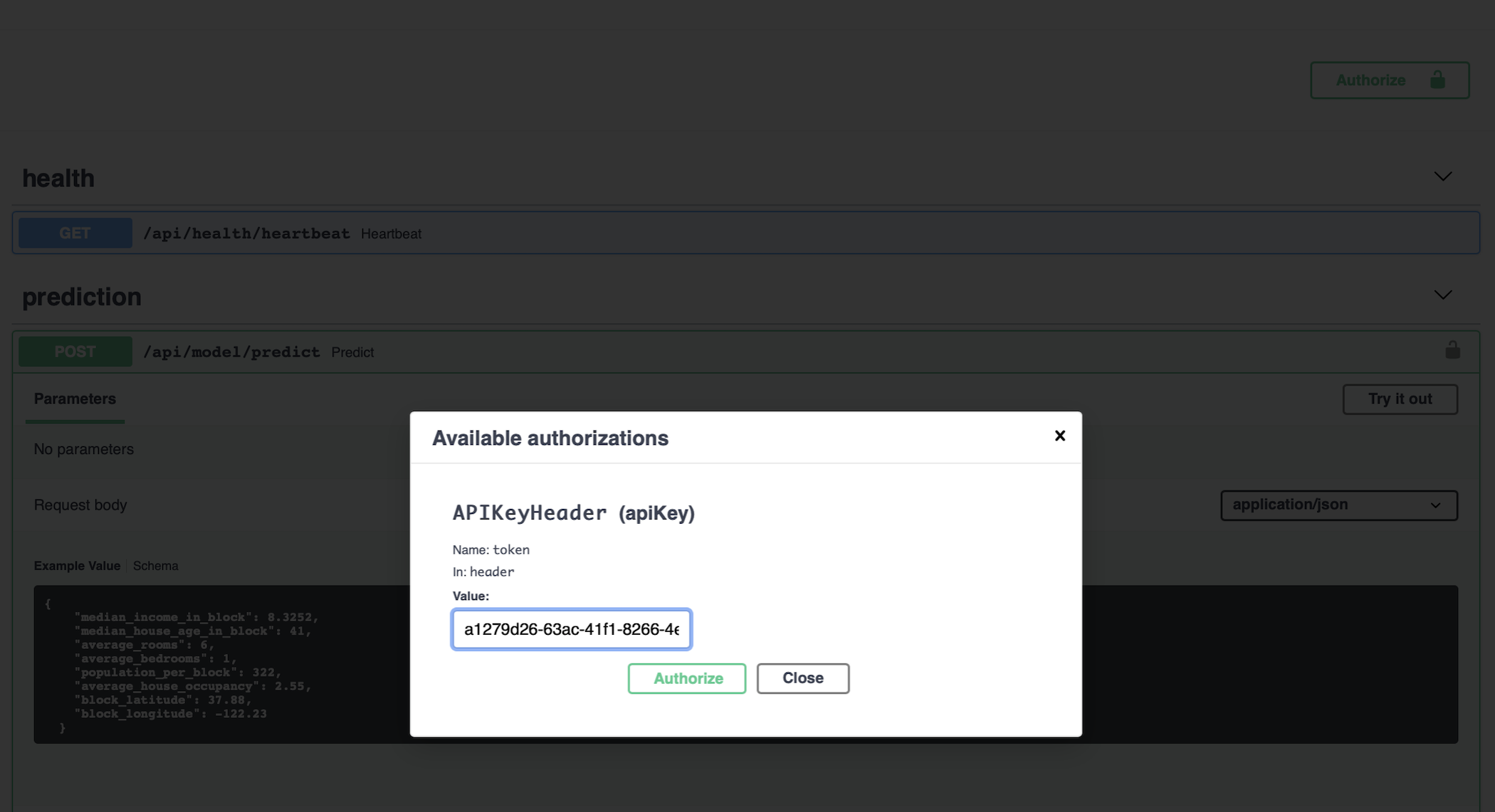

.envfile configure theAPI_KEYentry. The key is used for authenticating our API.

A sample API key can be generated using Python REPL:

import uuid

print(str(uuid.uuid4()))- Start your app with:

set -a

source .env

set +a

uvicorn fastapi_skeleton.main:app- Go to https://localhost:8000/docs.

- Click

Authorizeand enter the API key as created in the Setup step.

- You can use the sample payload from the

docs/sample_payload.jsonfile when trying out the house price prediction model using the API.

This skeleton code uses isort, mypy, flake, black, bandit for linting, formatting and static analysis.

Run linting with:

./scripts/linting.shRun your tests with:

./scripts/test.shThis runs tests and coverage for Python 3.11 and Flake8, Autopep8, Bandit.

v.1.0.0 - Initial release

- Base functionality for using FastAPI to serve ML models.

- Full test coverage

v.1.1.0 - Update to Python 3.11, FastAPI 0.108.0

- Updated to Python 3.11

- Added linting script

- Updated to pydantic 2.x

- Added poetry as package manager