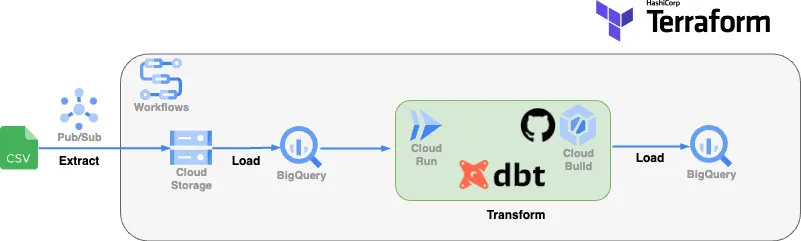

The ELT pipeline we’ve developed leverages several Google Cloud Services including Google Cloud Storage (GCS), BigQuery, Pub/Sub, Cloud Workflows, Cloud Run, and Cloud Build. We also use dbt for data transformation and Terraform for infrastructure as code.

Full article 👉 Medium

Navigate to infra folder, we gonna deploy the project using Terraform :

- Initialize your Terraform workspace, which will download the provider plugins for Google Cloud:

terraform init - Plan the deployment and review the changes:

terraform plan - If everything looks good, apply the changes:

terraform apply

Finally to test the workflow from end to end, we can lunch the script in scripts folder:

sh upload_include_dataset_to_gcs.sh