Picture Source: pexels

Unmanned aerial vehicles (UAVs), commonly known as drones, have become increasingly prevalent in various domains, including surveillance, photography, and delivery services. However, the rapid proliferation of drones raises concerns regarding security and privacy threats. To address these concerns, effective drone detection systems are crucial for identifying and tracking drones in real-time. In this research, we present a comprehensive dataset and propose a state-of-the-art drone detection model using the YOLOv7 architecture.

The widespread adoption of drones has led to an urgent need for reliable drone detection systems to ensure the safety and security of public spaces. Drone detection poses unique challenges due to the small size, fast movement, and diverse appearance of drones, making traditional object detection methods insufficient. Therefore, there is a growing demand for advanced detection models that can accurately identify drones in complex environments.

YOLO (You Only Look Once) is an object detection algorithm that was introduced in 2015 by Joseph Redmon et al. It revolutionized the field of computer vision by providing a real-time object detection solution with impressive accuracy.

To facilitate the development and evaluation of drone detection models, we introduce a novel and comprehensive dataset specifically curated for training and testing drone detection algorithms. The dataset, sourced from the publicly available "YOLO Drone Detection Dataset" on Kaggle, comprises a diverse set of annotated images captured in various environmental conditions and camera perspectives. The dataset includes instances of drones along with other common objects to enable robust detection and classification.

In this study, we employ the YOLOv7 architecture, a popular and highly efficient object detection framework, for drone detection. YOLOv7 stands for "You Only Look Once" version 7, which utilizes a single neural network to simultaneously predict bounding boxes and class probabilities for multiple objects in an image. This architecture offers real-time performance, making it ideal for drone detection applications.

To train and evaluate our drone detection model, we utilize the Colab platform, a cloud-based environment that provides access to powerful computing resources and deep learning libraries. Leveraging Colab's GPU acceleration capabilities, we train the YOLOv7 model using our curated dataset and fine-tune its parameters to optimize detection accuracy and efficiency.

-

Single Pass Detection: YOLO takes a different approach compared to traditional object detection methods that use region proposal techniques. Instead of dividing the image into regions and examining each region separately, YOLO performs detection in a single pass. It divides the input image into a grid and predicts bounding boxes and class probabilities for each grid cell.

-

Grid-based Prediction: YOLO divides the input image into a fixed-size grid, typically, say, 7x7 or 13x13. Each grid cell is responsible for predicting objects that fall within it. For each grid cell, YOLO predicts multiple bounding boxes (each associated with a confidence score) and class probabilities.

-

Anchor Boxes: To handle objects of different sizes and aspect ratios, YOLO uses anchor boxes. These anchor boxes are pre-defined boxes of different shapes and sizes. Each anchor box is associated with a specific grid cell. The network predicts offsets and dimensions for anchor boxes relative to the grid cell, along with the confidence scores and class probabilities.

-

Training: YOLO is trained using a combination of labeled bounding box annotations and classification labels. The training process involves optimizing the network to minimize the localization loss (related to the accuracy of bounding box predictions) and the classification loss (related to the accuracy of class predictions).

-

Speed and Accuracy Trade-off: YOLO achieves real-time object detection by sacrificing some localization accuracy compared to slower methods like Faster R-CNN. However, it still achieves competitive accuracy while providing significantly faster inference speeds, making it well-suited for real-time applications.

Since its introduction, YOLO has undergone several improvements and variations. Different versions such as YOLOv2, YOLOv3, and YOLOv4 have been developed, each introducing enhancements in terms of accuracy, speed, and additional features.

It's important to note that this is a high-level overview of YOLO, and the algorithm has many technical details and variations. For a more in-depth understanding, it's recommended to refer to the original YOLO papers and related resources.

- Drone detection

- YOLOv7

- Object detection

- Deep learning

- Surveillance

- Security

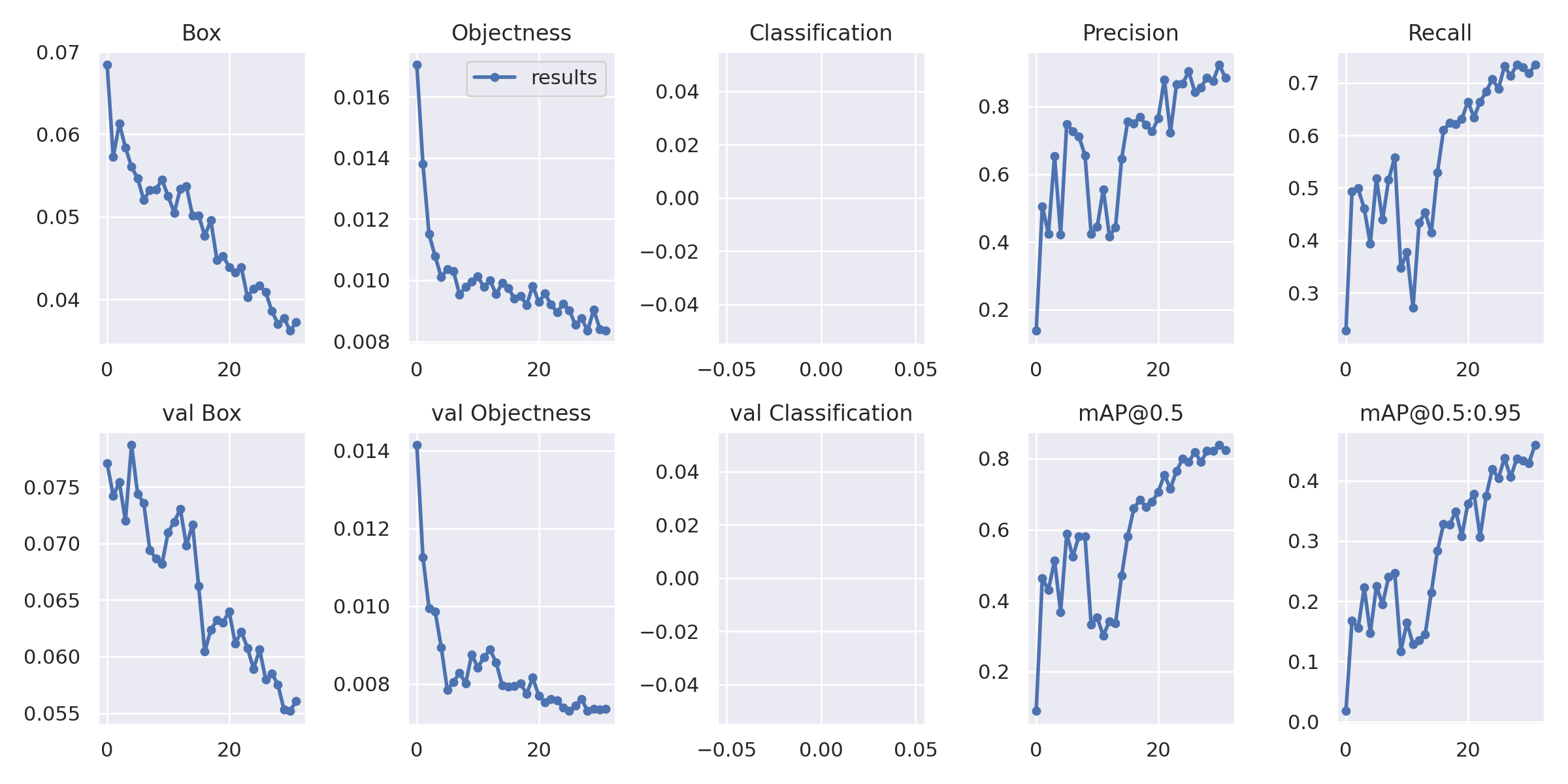

We present comprehensive results of our drone detection model's performance on both the training and testing datasets. The evaluation metrics include precision, recall, and F1-score, which are standard measures to assess the model's detection accuracy. Additionally, we analyze the model's performance across various environmental conditions and discuss its strengths and limitations.

Our research addresses the critical need for reliable drone detection systems by proposing a comprehensive dataset and a state-of-the-art detection model using the YOLOv7 architecture. The availability of our curated dataset and the promising performance of our model offer valuable contributions to the field of drone detection. The outcomes of this study can pave the way for enhanced security measures and privacy protection in areas where drones pose potential risks.

Drone detection prediction using YOLOv7x. Video footage by Nino Souza, sourced from Pexels.

Moving forward, further research can be conducted to explore the integration of our drone detection model into real-time surveillance systems and intelligent decision-making frameworks. Additionally, the dataset can be expanded to include a wider range of challenging scenarios and variations in drone types, enabling the development of more robust and generalized drone detection models.

In addition to this work, I have also developed a drone detection model using YOLOv8x. You can find that project or repository here.

If you have something to say to me please contact me:

- Twitter: Doguilmak

- Mail address: [email protected]