Huazhong University of Science and Technology

(*) equal contribution.

An officical implementation of "A Unified Framework for 3D Scene Understanding".

- Jul-19-24: Release the inference code and checkpoints.

- Jul-03-24: Release the paper.

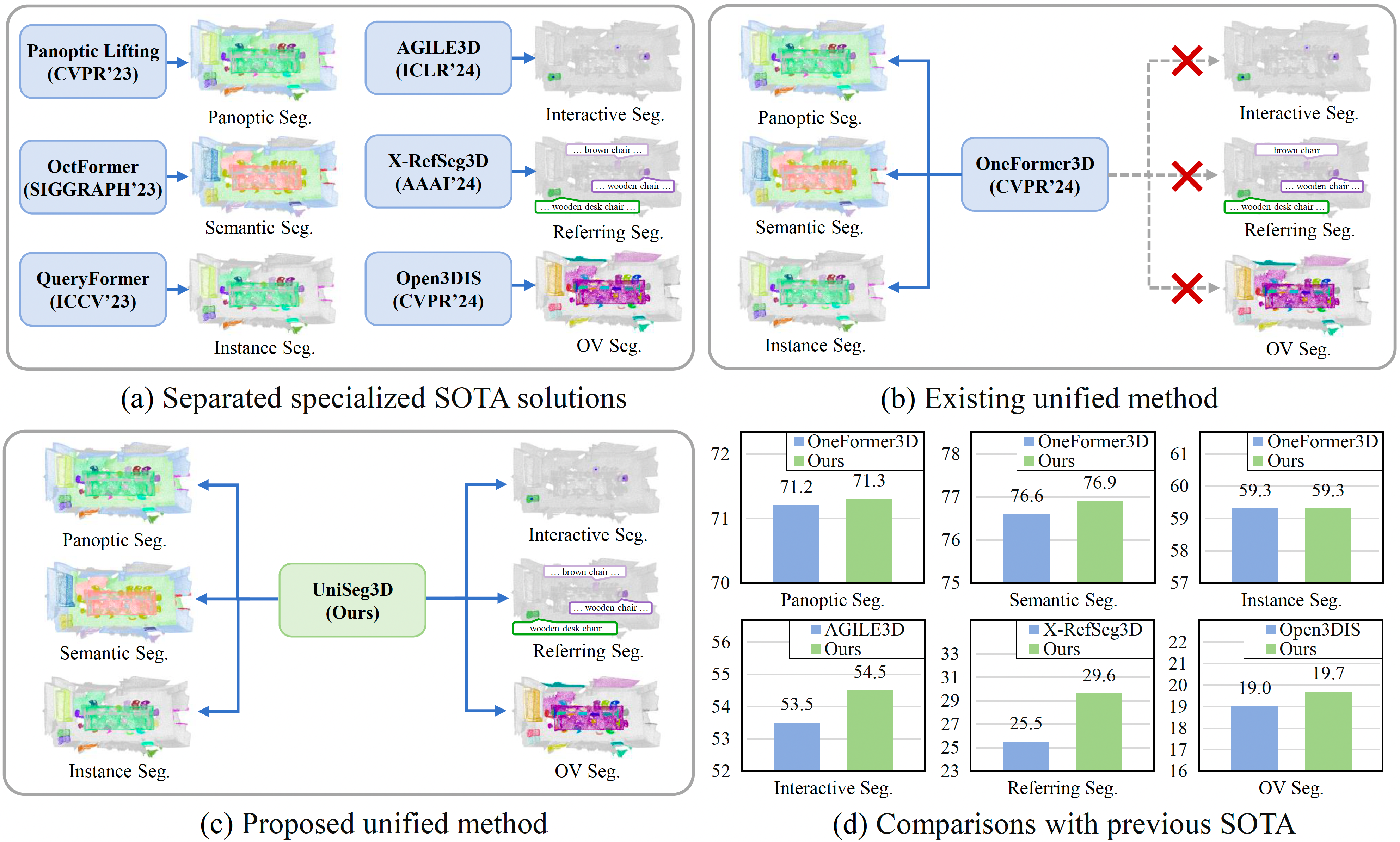

We propose UniSeg3D, a unified 3D segmentation framework that achieves panoptic, semantic, instance, interactive, referring, and open-vocabulary semantic segmentation tasks within a single model. Most previous 3D segmentation approaches are specialized for a specific task, thereby limiting their understanding of 3D scenes to a task-specific perspective. In contrast, the proposed method unifies six tasks into unified representations processed by the same Transformer. It facilitates inter-task knowledge sharing and, therefore, promotes comprehensive 3D scene understanding. To take advantage of multi-task unification, we enhance the performance by leveraging task connections. Specifically, we design a knowledge distillation method and a contrastive learning method to transfer task-specific knowledge across different tasks. Benefiting from extensive inter-task knowledge sharing, our UniSeg3D becomes more powerful. Experiments on three benchmarks, including the ScanNet20, ScanRefer, and ScanNet200, demonstrate that the UniSeg3D consistently outperforms current SOTA methods, even those specialized for individual tasks. We hope UniSeg3D can serve as a solid unified baseline and inspire future work.

- Release Inference Code.

- Release Checkpoints.

- Release Scripts for Open-Vocabulary Semantic Segmentation.

- Release Training Code.

- Demo Code.

-

Python 3.10

-

Pytorch 1.13

-

CUDA 11.7

-

Create a conda virtual environment

conda create -n uniseg3d python=3.10 conda activate uniseg3d -

Install Pytorch 1.13

pip install torch==1.13.0+cu117 torchvision==0.14.0+cu117 torchaudio==0.13.0 --extra-index-url https://download.pytorch.org/whl/cu117 -

Clone the repository

git clone https://github.com/dk-liang/UniSeg3D.git -

Install the OpenMMLab projects

pip install mmengine==0.10.3 pip install mmdet==3.3.0 pip install git+https://github.com/open-mmlab/mmdetection3d.git@fe25f7a51d36e3702f961e198894580d83c4387b pip install mmcv==2.1.0 -f https://download.openmmlab.com/mmcv/dist/cu117/torch1.13.0/index.html -

Install the MinkowskiEngine

conda install openblas-devel -c anaconda git clone https://github.com/NVIDIA/MinkowskiEngine.git cd MinkowskiEngine python setup.py install --blas_include_dirs=${CONDA_PREFIX}/include --blas=openblas -

Install segmentator from the segmentator

git clone https://github.com/Karbo123/segmentator.git cd segmentator && cd csrc && mkdir build && cd build cmake .. \ -DCMAKE_PREFIX_PATH=`python -c 'import torch;print(torch.utils.cmake_prefix_path)'` \ -DPYTHON_INCLUDE_DIR=$(python -c "from distutils.sysconfig import get_python_inc; print(get_python_inc())") \ -DPYTHON_LIBRARY=$(python -c "import distutils.sysconfig as sysconfig; print(sysconfig.get_config_var('LIBDIR'))") \ -DCMAKE_INSTALL_PREFIX=`python -c 'from distutils.sysconfig import get_python_lib; print(get_python_lib())'` make && make install # after install, please do not delete this folder (as we only create a symbolic link) -

Install the dependencies

pip install spconv-cu117 pip install torch-scatter==2.1.1 -f https://data.pyg.org/whl/torch-1.13.0+cu117.html pip install numpy==1.26.4 pip install timm==0.9.16 pip install ftfy==6.2.0 pip install regex==2023.12.25

-

Download ScanNet v2 data HERE. Link or move the

scansandscans_testfolders todata/scannet. -

In this directory, extract point clouds and annotations by running

python batch_load_scannet_data.py.cd data/scannet python batch_load_scannet_data.py -

Enter the project root directory, generate training data by running

python tools/create_data.py scannet --root-path ./data/scannet --out-dir ./data/scannet --extra-tag scannet -

Download the processed data infos

uniseg3d_infos_val.pklfrom link, and put it intodata/scannet.

Note: Theuniseg3d_infos_val.pklcontains:- ScanNet dataset information.

- Text prompts extracted from the ScanRefer dataset.

- Tokenized text prompts using the OpenCLIP.

-

Download processed class embeddings

scannet_cls_embedding.pthfrom link, and put it intodata/scannet. Note: Thescannet_cls_embedding.pthcontains:- Processed ScanNet class embeddings using the OpenCLIP.

The directory structure after pre-processing should be as below:

UniSeg3D

├── data

│ ├── scannet

│ │ ├── meta_data

│ │ ├── batch_load_scannet_data.py

│ │ ├── load_scannet_data.py

│ │ ├── scannet_utils.py

│ │ ├── scans

│ │ ├── scans_test

│ │ ├── scannet_instance_data

│ │ ├── points

│ │ │ ├── xxxxx.bin

│ │ ├── instance_mask

│ │ │ ├── xxxxx.bin

│ │ ├── semantic_mask

│ │ │ ├── xxxxx.bin

│ │ ├── super_points

│ │ │ ├── xxxxx.bin

│ │ ├─ uniseg3d_infos_val.pkl

│ │ ├─ scannet_cls_embedding.pth

-

Download pretrained CLIP model

open_clip_pytorch_model.binfrom OpenCLIP, and put it intowork_dirs/pretrained/convnext_large_d_320. -

Put checkpoint into

work_dirs/ckpts. -

Running the following instruction to evaluate the ckpts:

CUDA_VISIBLE_DEVICES=0 ./tools/dist_test.sh \ configs/uniseg3d_1xb4_scannet_scanrefer_scannet200_unified.py \ work_dirs/ckpts/model_best_2.pth \ 1

Note: The PS, SS, IS, Interactive, Referring segmentation tasks are evaluated in once inference. The evaluation scripts for the OVS task will be released later.

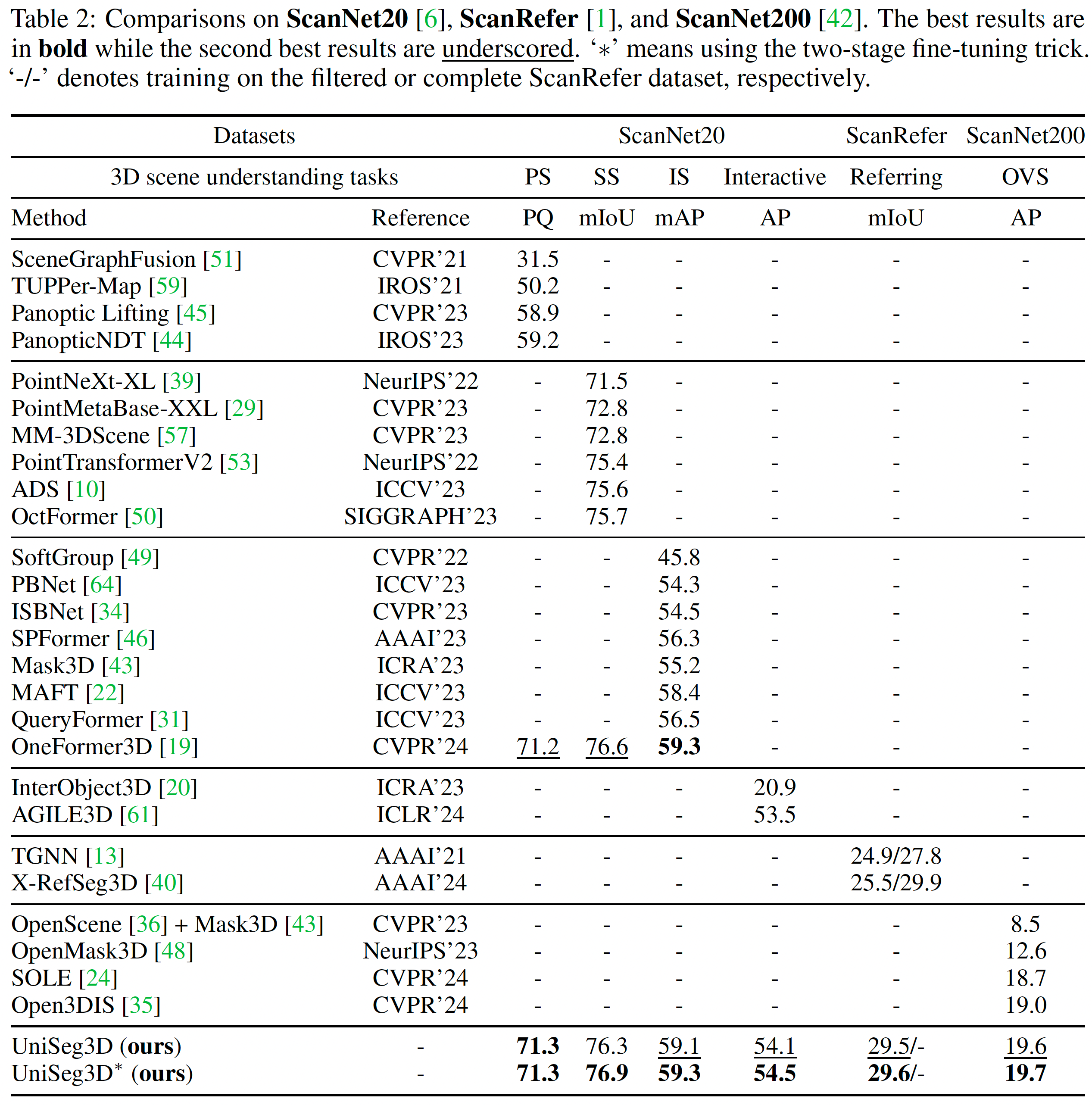

| Dataset | ScanNet20 | ScanRefer | ScanNet200 | Download | |||

|---|---|---|---|---|---|---|---|

| Task | PS | SS | IS | Interactive | Referring | OVS | ckpt |

| Metric | PQ | mIoU | mAP | AP | mIoU | AP | - |

| UniSeg3D | 71.3 | 76.3 | 59.1 | 54.1 | 29.5 | 19.6 | link |

| UniSeg3D* | 71.3 | 76.9 | 59.3 | 54.5 | 29.6 | 19.7 | link |

We are thankful to SPFormer, OneFormer3D, Open3DIS and OMG-Seg for releasing their models and code as open-source contributions.

@article{xu2024unified,

title={A Unified Framework for 3D Scene Understanding},

author={Xu, Wei and Shi, Chunsheng and Tu, Sifan and Zhou, Xin and Liang, Dingkang and Bai, Xiang},

journal={arXiv preprint arXiv:2407.03263},

year={2024}

}