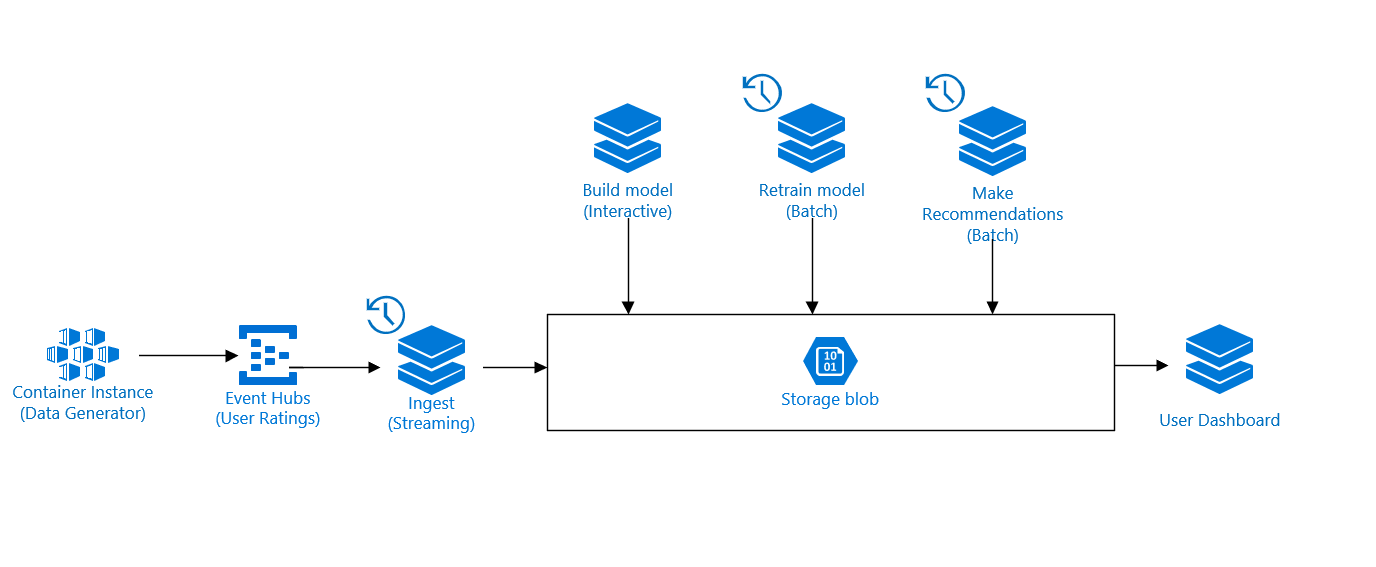

The following is a Movie Recommendation System Data pipeline implemented within Azure Databricks. This solution aims to demonstrate Databricks as a Unified Analytics Platform by showing an end-to-end data pipeline including:

- Initial ETL data loading process

- Ingesting succeeding data through Spark Structured Streaming

- Model training and scoring

- Persisting trained model

- Productionizing model through batch scoring jobs

- User dashboards

Movie ratings data is generated via a simple .NET core application running in an Azure Container instance which sends this data into an Azure Event Hub. The movie ratings data is then consumed and processed by a Spark Structured Streaming (Scala) job within Azure Databricks. The recommendation system makes use of a collaborative filtering model, specifically the Alternating Least Squares (ALS) algorithm implemented in Spark ML and pySpark (Python). The solution also contains two scheduled jobs that demonstrates how one might productionize the fitted model. The first job creates daily top 10 movie recommendations for all users while the second job retrains the model with the newly received ratings data. The solution also demonstrates Sparks Model Persistence in which one can load a model in a different language (Scala) from what it was originally saved as (Python). Finally, the data is visualized with a parameterize Notebook / Dashboard using Databricks Widgets.

DISCLAIMER: Code is not designed for Production and is only for demonstration purposes.

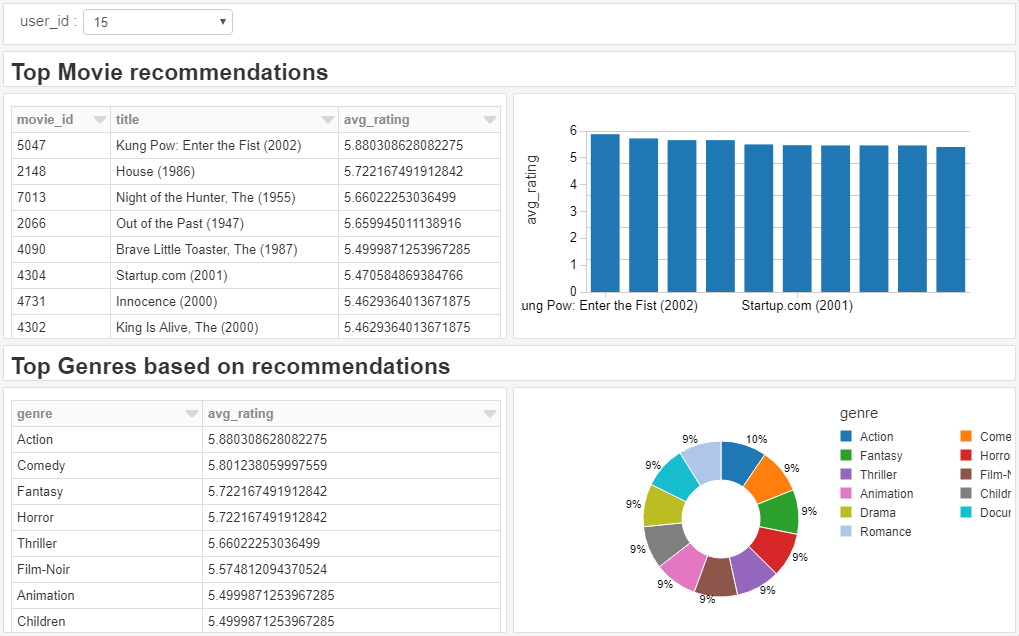

The following shows the Movie Recommendations dashboard by User Id.

To access the Dashboard, go to Workspace > recommender_dashboard > 07_user_dashboard then select View > User Recommendation Dashboard

You can use the following docker container to deploy the solution:

docker run -it devlace/azdatabricksrecommend

Or, alternatively, build and run the container locally with:

make deploy_w_docker

Ensure you are in the root of the repository and logged in to the Azure cli by running az login.

- Azure CLI 2.0

- Python virtualenv or Anaconda

- jq tool

- Check the requirements.txt for list of necessary Python packages. (will be installed by

make requirements)

- The following works with Windows Subsystem for Linux

- Clone this repository

cd azure-databricks-recommendationvirtualenv .This creates a python virtual environment to work in.source bin/activateThis activates the virtual environment.make requirements. This installs python dependencies in the virtual environment.

- To deploy the solution, simply run

make deployand fill in the prompts. - When prompted for a Databricks Host, enter the full name of your databricks workspace host, e.g.

https://southeastasia.azuredatabricks.net - When prompted for a token, you can generate a new token in the databricks workspace.

- To view additional make commands run

make

This solutions makes use of the MovieLens Dataset*

├── LICENSE

├── Makefile <- Makefile with commands like `make data` or `make deploy`

├── README.md <- The top-level README for developers using this project.

├── data

│ │

│ └── raw <- The original, immutable data dump.

├── deploy <- Deployment artifacts

│ │

│ └── databricks <- Deployment artifacts in relation to the Databricks workspace

│ │

│ └── deploy.sh <- Deployment script to deploy all Azure Resources

│ │

│ └── azuredeploy.json <- Azure ARM template w/ .parameters file

│ │

│ └── Dockerfile <- Dockerfile for deployment

│

├── notebooks <- Azure Databricks Jupyter notebooks.

│

├── references <- Contains the powerpoint presentation, and other reference materials.

│

├── requirements.txt <- The requirements file for reproducing the analysis environment, e.g.

│ generated with `pip freeze > requirements.txt`

│

├── src <- Source code for use in this project.

├── __init__.py <- Makes src a Python module

│

├── data <- Scripts to download or generate data

│

└── EventHubGenerator <- Visual Studio solution EventHub Data Generator (Ratings)

Project based on the cookiecutter data science project template. #cookiecutterdatascience

*F. Maxwell Harper and Joseph A. Konstan. 2015. The MovieLens Datasets: History and Context. ACM Transactions on Interactive Intelligent Systems (TiiS) 5, 4, Article 19 (December 2015), 19 pages. DOI=http:https://dx.doi.org/10.1145/2827872