- What is Baskerville

- Requirements

- Useful Definitions

- Baskerville Engine

- Installation

- Configuration

- How to run

- Testing

- Docs

- TODO

- Contributing

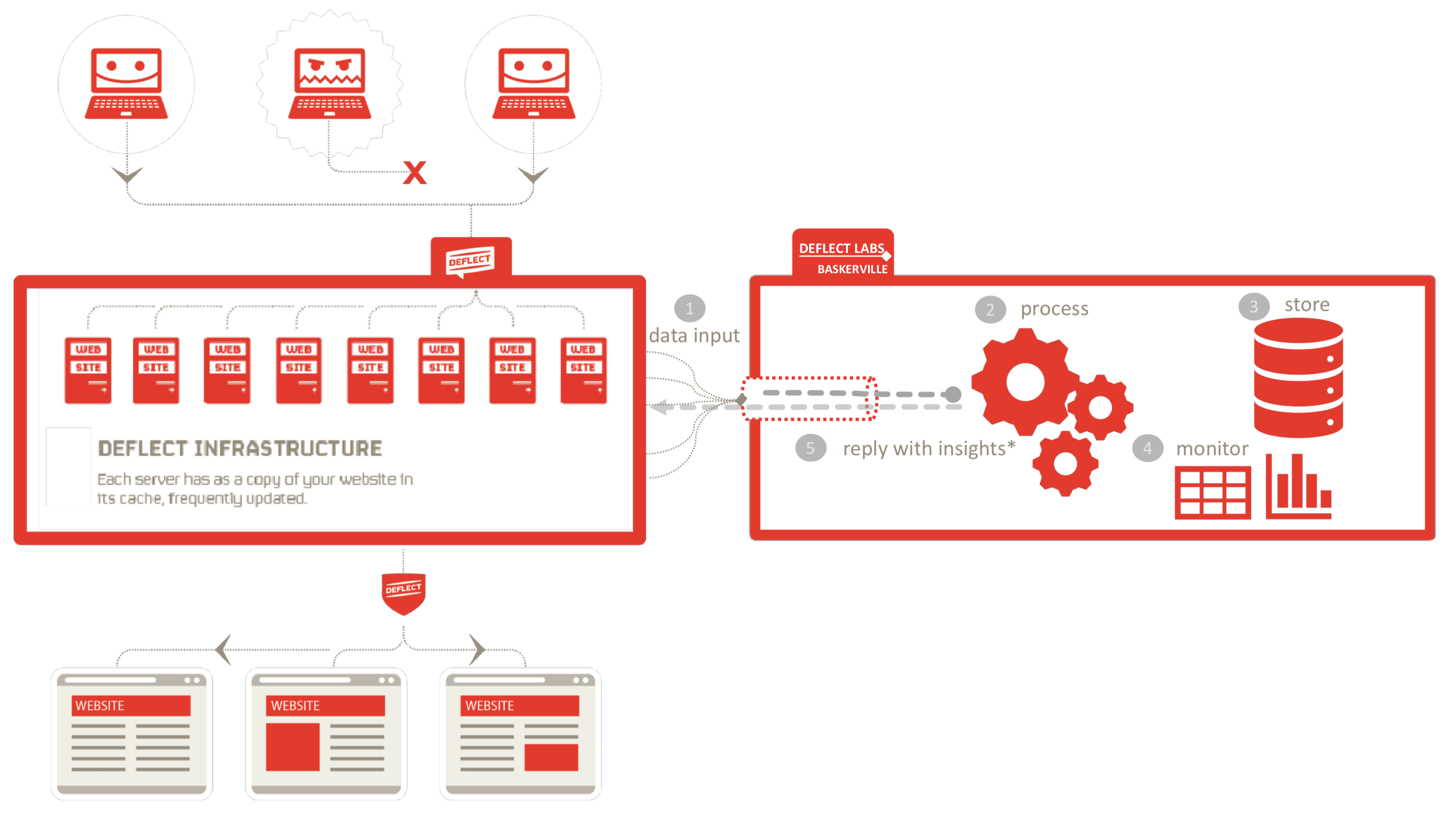

Manual identification and mitigation of (DDoS) attacks on websites is a difficult and time-consuming task with many challenges. This is why Baskerville was created, to identify the attacks directed to Deflect protected websites as they happen and give the infrastructure the time to respond properly. Baskerville is an analytics engine that leverages Machine Learning to distinguish between normal and abnormal web traffic behavior. In short, Baskerville is a Layer 7 (application layer) DDoS attack mitigation tool.

The challenges:

- Be fast enough to make it count

- Be able to adapt to traffic (Apache Spark, Apache Kafka)

- Provide actionable info (A prediction and a score for an IP)

- Provide reliable predictions (Probation period & feedback)

- As with any ML project: not enough labelled data (Using normal-ish data - the anomaly/ novelty detector can accept a small percentage of anomalous data in the dataset)

Baskerville is the component of the Deflect analysis engine that is used to decide whether IPs connecting to Deflect hosts are authentic normal connections, or malicious bots. In order to make this assessment, Baskerville groups incoming requests into request sets by requested host and requesting IP.

For each request set, a selection of features are computed. These are properties of the requests within the request set (e.g. average path depth, number of unique queries, HTML to image ratio...) that are intended to help differentiate normal request sets from bot request sets. A supervised novelty detector, trained offline on the feature vectors of a set of normal request sets, is used to predict whether new request sets are normal or suspicious. The request sets, their features, trained models, and details of suspected attacks and attributes, are all saved to a relational database (Postgres currently).

Put simply, the Baskerville engine is the workhorse for consuming, processing, and saving the output from input web logs. This engine can be run as Baskerville on-line, which enables the immediate identification of suspicious IPs, or as Baskerville off-line, which conducts this same analysis for log files saved locally or in an elasticsearch database.

- The first step is to get the data (Combined log format) and transport it to the processing system

- Once we get the logs, the processing system will start transforming the input to feature vectors and subsequently predictions for target-ip pairs

- The information that comes out from the processing is stored in a database

- At the same time, while the system is running, there are other systems in place to monitor its process and alert if anything is out of place

- And eventually, the insight we gained from the process will return to the source so that actions can be taken

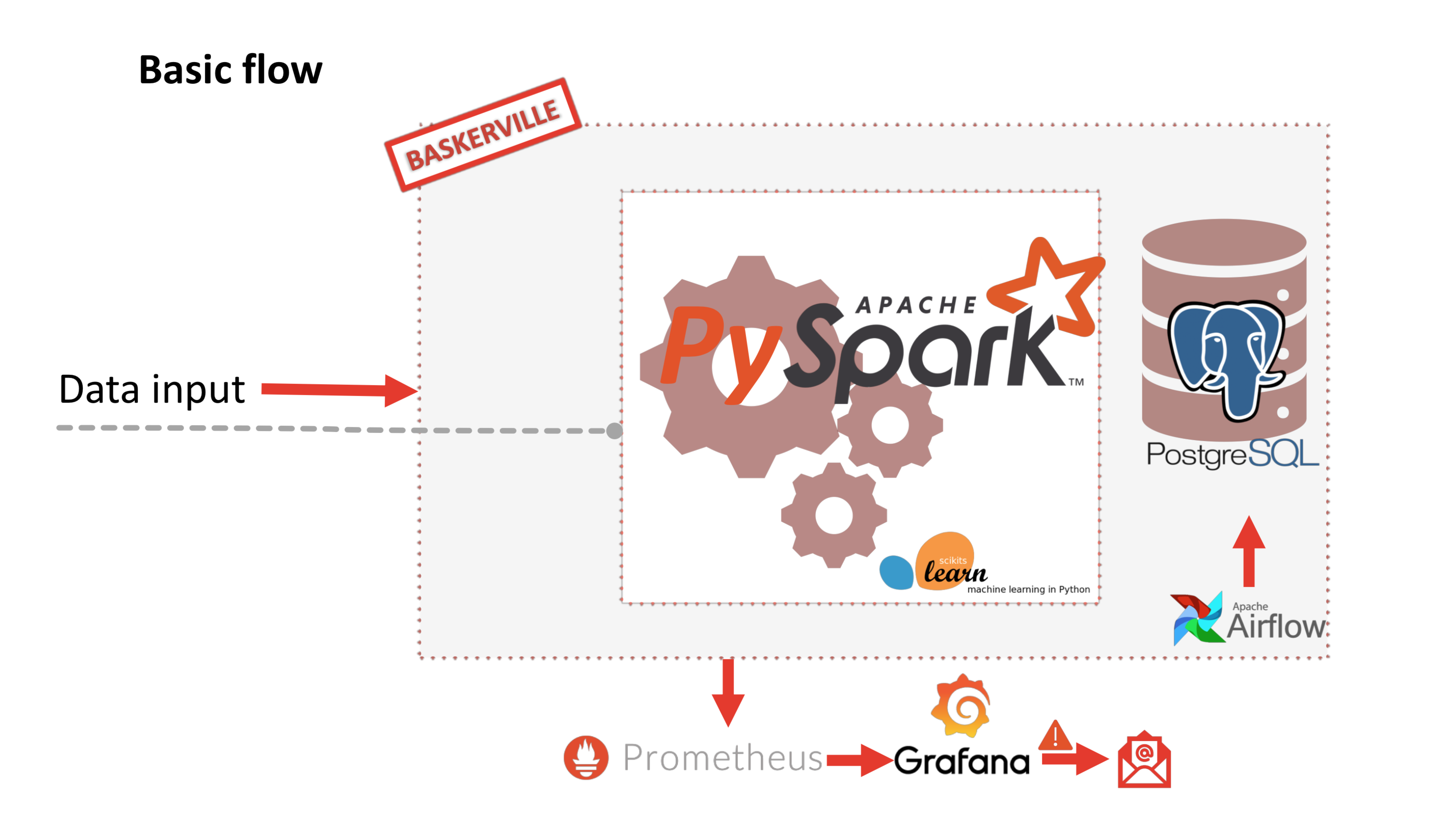

For the Machine Learning:

- Pyspark ML

- Isolation Forest (a slightly modified version)

- Scikit Learn (not actively used because of performance issues)

- Python >= 3.6 (ideally 3.6 because there have been some issues with 3.7 testing and numpy in the past)

- Postgres 10

- If you want to use the IForest model, then https://github.com/titicaca/spark-iforest is required (

pyspark_iforest.ml) - Java 8 needs to be in place (and in PATH) for Spark (Pyspark version >=2.4.4) to work,

- The required packages in

requirements.txt - Tests need

pytest,mockandspark-testing-base - For the Elastic Search pipeline: access to the esretriever repository

- Optionally: access to the Deflect analytics ecosystem repository (to run Baskerville dockerized components like Postgres, Kafka, Prometheus, Grafana etc).

For Baskerville to be fully functional we need to install the following:

# clone and install spark-iforest

git clone https://github.com/titicaca/spark-iforest

cd spark-iforest/python

pip install .

# clone and install esretriever - for the ElasticSearch pipeline

cd ../../

git clone https://github.com/equalitie/esretriever.git

cd esretriever

pip install .

# Finally, clone and install baskerville

cd ../

git clone https://github.com/equalitie/baskerville.git

cd baskerville

pip install .[test]Note: In Windows you might have to install pypandoc first for pyspark to install.

Since it is good practice to use environment variables for the configuration, the following can be set and used as follows:

cd baskerville

# must be set:

export BASKERVILLE_ROOT=$(pwd) # full path to baskerville folder

export DB_HOST=the db host (docker ip if local)

export DB_PORT=5432

export DB_USER=postgres user

export DB_PASSWORD=secret

# optional - pipeline dependent

export ELK_USER=elasticsearch

export ELK_PASSWORD=changeme

export ELK_HOST=the elasticsearch host:port (localhost:9200)

export KAFKA_HOST=kafka host:port (localhost:9092)A basic configuration for running the raw log pipeline is the following - rename baskerville/conf/conf_rawlog_example_baskerville.yaml to baskerville/conf/baskerville.yaml:

---

database:

name: baskerville # the database name

user: !ENV ${DB_USER}

password: !ENV '${DB_PASS}'

type: 'postgres'

host: !ENV ${DB_HOST}

port: !ENV ${DB_PORT}

maintenance: # Optional, for data partitioning and archiving

partition_table: 'request_sets' # default value

partition_by: week # partition by week or month, default value is week

partition_field: created_at # which field to use for the partitioning, this is the default value, can be omitted

strict: False # if False, then for the week partition the start and end date will be changed to the start and end of the respective weeks. If true, then the dates will remain unchanged. Be careful to be consistent with this.

data_partition: # Optional: Define the period to create partitions for

since: 2020-01-01 # when to start partitioning

until: "2020-12-31 23:59:59" # when to stop partitioning

engine:

storage_path: !ENV '${BASKERVILLE_ROOT}/data'

raw_log:

paths: # optional, a list of logs to parse - they will be parsed subsequently

- !ENV '${BASKERVILLE_ROOT}/data/samples/test_data_1k.json' # sample data to run baskerville raw log pipeline with

cache_expire_time: 604800 # sec (604800 = 1 week)

model_id: -1 # optional, -1 returns the latest model in the database

extra_features: # useful when we need to calculate more features than the model requests or when there is no model

- css_to_html_ratio # feature names have the following convention: class FeatureCssToHtmlRatio --> 'css_to_html_ratio'

- image_to_html_ratio

- js_to_html_ratio

- minutes_total

- path_depth_average

- path_depth_variance

- payload_size_average

data_config:

schema: !ENV '${BASKERVILLE_ROOT}/data/samples/sample_log_schema.json'

logpath: !ENV '${BASKERVILLE_ROOT}/baskerville.log'

log_level: 'ERROR'

spark:

app_name: 'Baskerville' # the application name - can be changed for two different runs - used by the spark UI

master: 'local' # the ip:port of the master node, e.g. spark:https://someip:7077 to submit to a cluster

parallelism: -1 # controls the number of tasks, -1 means use all cores - used for local master

log_level: 'INFO' # spark logs level

storage_level: 'OFF_HEAP' # which strategy to use for storing dfs - valid values are the ones found here: https://spark.apache.org/docs/2.4.0/api/python/_modules/pyspark/storagelevel.html default: OFF_HEAP

jars: !ENV '${BASKERVILLE_ROOT}/data/jars/postgresql-42.2.4.jar,${BASKERVILLE_ROOT}/data/spark-iforest-2.4.0.jar' # or /path/to/jars/mysql-connector-java-8.0.11.jar

spark_driver_memory: '6G' # depends on your dataset and the available ram you have. If running locally 6 - 8 GB should be a good choice, depending on the amount of data you need to process

metrics_conf: !ENV '${BASKERVILLE_ROOT}/data/spark.metrics' # Optional: required only to export spark metrics

jar_packages: 'com.banzaicloud:spark-metrics_2.11:2.3-2.0.4,io.prometheus:simpleclient:0.3.0,io.prometheus:simpleclient_dropwizard:0.3.0,io.prometheus:simpleclient_pushgateway:0.3.0,io.dropwizard.metrics:metrics-core:3.1.2' # required to export spark metrics

jar_repositories: 'https://raw.github.com/banzaicloud/spark-metrics/master/maven-repo/releases' # Optional: Required only to export spark metrics

event_log: True

serializer: 'org.apache.spark.serializer.KryoSerializer'In baskerville/conf/conf_example_baskerville.yaml you can see all the possible configuration options.

Example of configurations for the other pipelines:

- kafka:

baskerville/conf/conf_kafka_example_baskerville.yaml - es:

baskerville/conf/conf_es_example_baskerville.yaml - training:

baskerville/conf/conf_training_example_baskerville.yaml

In general, there are two ways to run Baskerville, a pure python one or using spark-submit, both are detailed below.

The full set of options:

usage: main.py [-h] [-s] [-e] [-t] [-c CONF_FILE] pipeline

positional arguments:

pipeline Pipeline to use: es, rawlog, or kafka

optional arguments:

-h, --help show this help message and exit

-s, --simulate Simulate real-time run using kafka

-e, --startexporter Start the Baskerville Prometheus exporter at the

specified in the configuration port

-t, --testmodel Add a test model in the models table

-c CONF_FILE, --conf CONF_FILE

Path to config file- For the python approach (which I find it easier when running locally):

cd baskerville/src/baskerville

python3 main.py [which pipeline to use] [should baskerville register and export metrics? if yes use the -e flag]

# which means:

python3 main.py [kafka||rawlog||es] [-e] [-t] [-s]Note: you can replace python3 main.py with baskerville like this:

baskerville [kafka||rawlog||es] [-e] [-t] [-s]- And for

spark-submit(which is usually used when you want to submit a Baskerville Application to a cluster):

cd baskerville

export BASKERVILLE_ROOT=$(pwd)

spark-submit --jars $BASKERVILLE_ROOT/data/jars/[relevant jars like postgresql-42.2.4.jar,spark-streaming-kafka-0-8-assembly_2.11-2.3.1.jar etc] --conf [spark configurations if any - note that these will override the baskerville.yaml configurations] $BASKERVILLE_ROOT/src/baskerville/main.py [kafka||rawlog||es] [-e] -c $BASKERVILLE_ROOT/conf/baskerville.yaml (or the absolute path to your configuration)Examples:

cd baskerville

export BASKERVILLE_ROOT = $(pwd) # or full path to baskerville

# minimal spark-submit for the raw logs pipeline:

spark-submit --jars $BASKERVILLE_ROOT/data/jars/postgresql-42.2.4.jar --conf spark.memory.offHeap.enabled=true --conf spark.memory.offHeap.size=2g $BASKERVILLE_ROOT/src/baskerville/main.py rawlog -c $BASKERVILLE_ROOT/conf/baskerville.yaml

# or

spark-submit --packages org.postgresql:postgresql:42.2.4 --conf spark.memory.offHeap.enabled=true --conf spark.memory.offHeap.size=2g $BASKERVILLE_ROOT/src/baskerville/main.py rawlog -c $BASKERVILLE_ROOT/conf/baskerville.yaml

# minimal spark-submit for the elastic search pipeline:

spark-submit --jars $BASKERVILLE_ROOT/data/jars/postgresql-42.2.4.jar,$BASKERVILLE_ROOT/data/jars/elasticsearch-spark-20_2.11-5.6.5.jar --conf spark.memory.offHeap.enabled=true --conf spark.memory.offHeap.size=2g $BASKERVILLE_ROOT/src/baskerville/main.py es -c $BASKERVILLE_ROOT/conf/baskerville.yaml

# minimal spark-submit for the kafka pipeline:

spark-submit --jars $BASKERVILLE_ROOT/data/jars/postgresql-42.2.4.jar,$BASKERVILLE_ROOT/data/jars/spark-streaming-kafka-0-8-assembly_2.11-2.3.1.jar --conf spark.memory.offHeap.enabled=true --conf spark.memory.offHeap.size=2g $BASKERVILLE_ROOT/src/baskerville/main.py kafka -c $BASKERVILLE_ROOT/conf/baskerville.yaml

# note the spark.memory.offHeap.size does not have to be 2G and it depends on the size of your dataThe paths in spark-submit must be absolute and accessible from all the workers.

Note for Windows:

In Winows Spark might not initialize. If so, set $HADOOP_HOME as follows and download the appropriate winutils.exe from https://github.com/steveloughran/winutils

mkdir c:\hadoop\bin

export HADOOP_HOME=c:\hadoop

cp $HOME\Downloads\winutils.exe $HADOOP_HOME\binFor Baskerville kafka you'll need:

- Kafka

- Zookeeper

- Postgres

- Prometheus [optional]

- Grafana [optional]

For Baskerville rawlog you'll need:

- Postgres

- Elastic Search

- Prometheus [optional]

- Grafana [optional]

For Baskerville es you'll need:

- Postgres

- Elastic Search

- Prometheus [optional]

- Grafana [optional]

An ElasticSearch service is not provided.

For Baskerville training you'll need:

- Postgres

- Prometheus [optional]

- Grafana [optional]``

Under baskerville/container there is a Dockerfile that sets up the appropriate version of Java, Python and sets up Baskerville.

To run this, run docker-compose up in the directory where the docker-compose.yaml is.

Note that you will have to provide the relevant environment variables as defined in the docker-compose file under args.

An easy way to do this is to use a .env file. Rename the dot_enf_file to .env and modify it accordingly:

# must be set:

BASKERVILLE_BRANCH=e.g. master, develop etc, the branch to pull from

DB_HOST=the db host (docker ip if local)

DB_PORT=5432

DB_USER=postgres

DB_PASSWORD=secret

# optional - pipeline dependent

ELK_USER=elasticsearch

ELK_PASSWORD=changeme

ELK_HOST=the elasticsearch host (docker ip if local):port (default port is 9200)

KAFKA_HOST=kafka ip (docker ip if local):port (default:9092)The docker ip can be retrieved by $(ipconfig getifaddr en0), assuming en0 is you active network interface.

The ${DOCKER_IP} is used when the systems are running on your local docker. Otherwise, just use the appropriate environment variable.

E.g. - ELK_HOST=${ELK_HOST}, where ${ELK_HOST} is set in .env to the ip of the elasticsearch instance.

The command part is how you want baskerville to run. E.g. for the rawlog pipeline - with metrics exporter:

command: python ./main.py -c /app/baskerville/conf/baskerville.yaml rawlog -eOnce you have test data in Postgres, you can train:

command: python ./main.py -c /app/baskerville/conf/baskerville.yaml trainingOr use the provided test model:

command: python ./main.py -c /app/baskerville/conf/baskerville.yaml rawlog -e -tAnd so on. See Running section for more options.

Make sure you have a Postgres instance running. You can use the deflect-analytics-ecosystem's docker-compose to spin up a postgres instance

In deflect-analytics-ecosystem you can find a docker-compose that creates all the aforementioned components and runs Baskerville too. It should be the simplest way to test Baskerville out. To launch the relevant services, comment out what you don't need from the docker-compose.yaml and run

docker-compose upin the directory with the docker-compose.yaml file.

Note: For the Kafka service, before you run docker-compose up set:

export DOCKER_KAFKA_HOST=currentl-local-ip or $(ipconfig getifaddr en0) # where en0 is your current active interfaceThe simplest way is to check the database.

-- Give baskerville is the database name defined in thec configuration:

SELECT count(id) from request_sets where id_runtime in (select max(id) from runtimes); -- if you used the test data, it should be 1000 after a full successful execution

SELECT * FROM baskerville.request_sets limit 10; -- fields should be complete, e.g. features should be something like the followingRequest_set features example:

{

"top_page_to_request_ratio": 1,

"response4xx_total": 0,

"unique_path_total": 2,

"request_interval_variance": 0,

"unique_path_to_request_ratio": 1,

"image_total": 2,

"css_total": 0,

"js_total": 0,

"css_to_html_ratio": 0,

"unique_query_to_unique_path_ratio": 0,

"unique_ua_rate": 1,

"top_page_total": 2,

"js_to_html_ratio": 0,

"request_total": 2,

"image_to_html_ratio": 200,

"request_interval_average": 0,

"unique_path_rate": 1,

"minutes_total": 0,

"unique_query_rate": 0,

"html_total": 0,

"path_depth_variance": 50,

"path_depth_average": 5,

"unique_query_total": 0,

"unique_ua_total": 2,

"payload_size_average": 10.40999984741211,

"response4xx_to_request_ratio": 0,

"payload_size_log_average": 9.250617980957031

}

Grafana is a metrics visualization web application that can be configured to

display several dashboards with charts, raise alerts when metric crosses a user defined threshold and notify through mail or other means. Within Baskerville, under data/metrics, there is an importable to Grafana dashboard which presents the statistics of the Baskerville engine in a customisable manner. It is intended to be the principal visualisation and alerting tool of incoming Deflect traffic, displaying metrics in graphical form.

Prometheus is the metric storage and aggregator that provides Grafana with the charts data.

Besides the spark ui - which usually is under https://localhost:4040, there is a set of metrics for the Baskerville engine set up, using the Python Prometheus library. To see those metrics,

include the -e flag and go to the configured localhost port (https:// localhost:8998/metrics by default). You will need a configured Prometheus instance and a Grafana instance to be able to

visualize them using the auto generated baskerville dashboard, which is saved in the data directory, and can

be imported in Grafana.

There is also an Anomalies Dashboard and a Kafka Dashboard under data/metrics.

To view the spark generated metrics, you have to include the configuration for the jar packages:

metrics_conf: !ENV '${BASKERVILLE_ROOT}/data/metrics/spark.metrics'

jar_packages: 'io.prometheus:simpleclient:0.3.0,io.prometheus:simpleclient_dropwizard:0.3.0,io.prometheus:simpleclient_pushgateway:0.3.0,io.dropwizard.metrics:metrics-core:3.1.2,com.banzaicloud:spark-metrics_2.11:2.3-2.0.4'

jars_repositories: 'https://raw.github.com/banzaicloud/spark-metrics/master/maven-repo/releases'And also have a prometheus push gateway set up. You can use the deflect-analytics-ecosystem's docker-compose for that.

Unit Tests: Basic unittests for the features and the pipelines have been implemented. To run them:

python3 -m pytest tests/

Note: Many of the tests have to use a spark session, so unfortunately it will take some time for all to complete. (~=2 minutes currently)

Functional Tests: No functional tests exist yet. They will be added as the structure stabilizes.

We use pydoc to generate docs for Baskerville under baskerville/docs folder.

# use --force to overwrite docs

pdoc3 --html --force --output-dir docs baskervilleThen open (docs/baskerville/index.html)[docs/baskerville/index.html] with a browser.

-

Runtime: The time and details for each time Baskerville runs, from start to finish of a Pipeline. E.g. start time, number of subsets processed etc.

-

Model: The Machine Learning details that are stored in the database.

-

Anomaly detector: The structure that holds the ML related steps, like the algoithm, the respective scaler etc. Current algorithm is Isolation Forest (scikit or pyspark implementation). OneClassSvm was a candidate but it was slow and the results less accurate.

-

Request set: A request set is the behaviour (the characteristics of the requests being made) of a specific IP towards a specific target for a certain amount of time (runtime).

-

Subset: Given that a request set has a duration of a runtime, then the subset is the behaviour of the IP-to-target for a specific time-window (

time_bucket) -

Time bucket: It is used to define how often should Baskerville consume and process logs. The default value is

120(seconds).

The main Baskerville engine consumes web logs and uses them to compute request sets (i.e. the groups of requests made by each IP-host pair) and extract the request set features. It applies a trained novelty detection algorithm to predict whether each request set is normal or anomalous within the current time window. It saves the request set features and predictions to the Baskerville storage database. It can also cross-references incoming IP addresses with attacks logged in the database, to determine if a label (known malicious or known benign) can be applied to the request set.

Each request-set is divided into subsets. Subsets have a two-minute length (configurable), and the request set features (and prediction) are updated at the end of each subset using a feature-specific update method (discussed here).

For nonlinear features, the feature value will be dependent on the subset length, so for this reason, logs are processed in two-minute subsets even when not being consumed live. This is also discussed in depth in the feature document above.

The Baskerville engine utilises Apache Spark; an analytics framework designed for the purpose of large-scale data processing. The decision to use Spark in Baskerville was made to ensure that the engine can achieve a high enough level of efficiency to consume and process web logs in real time, and thus run continuously as part of the Deflect ecosystem.

There are four main pipelines. Three have to do with the different kinds of input and the fourth one with the ML.

Baskerville is designed to consume web logs from various sources in predefined intervals (time bucket set to 120 seconds by default)

Read from json logs, process, store in postgres.

Read from Elastc Search splitting the period in smaller periods so as not to overload the ELK cluster, process, store in postgres. (optionally store logs locally too)

Read from a topic every time_bucket seconds, process, store in postgres.

The pipeline used to train the machine learning model. Reads preprocessed data from Postgres, trains, saves model.

Prediction is optional in the first 3 pipelines - which means you may run the pipelines only to process the data, e.g. historic data, and then use the training pipeline to train/ update the model.

Because of the lack of a carefully curated labelled dataset, the approach being used here is to have an anomaly / novelty detector, like OneClassSVM or Isolation Forest trained on mostly normal traffic. We can train on data from days we know there have been no major incidents and still be accurate enough. More details here: Deflect Labs Report #5

The output of the prediction process is the prediction and an anomaly score to help indicate confidence in prediction. The output accompanies a specific request set, an IP-target pair for a specific (time_bucket) window.

Note: Isolation Forest was preferred to OneClassSVM because of speed and better accuracy.

Under src/baskerville/features you can find all the currently implemented features, like:

- Css to html ratio

- Image to html ratio

- Js to html ratio

- Minutes total

- Path depth average

- Path depth variance

- Payload size average

- Payload size log average

- Request interval average

- Request interval variance

- Request total

- Response 4xx to request ratio

- Top page to request ratio

- Unique path rate

- Unique path to request ratio

- Unique query rate

- Unique query to unique path ratio

and many more.

Most of the features are updateable, wich means, they take the past into consideration. For this purpose, we keep a request set cache for a predefined amount of time (1 week by default), where we store the details and feature vectors for previous request sets, in order to be used in the updating process. This cache is a two layer cache, one layer has all the unique request sets (unique ip-host pairs) for the week and the other one has only the unique ip-host pairs and their respective details for the current time window. More on feature updating here.

- build spark image https://levelup.gitconnected.com/spark-on-kubernetes-3d822969f85b

wget https://archive.apache.org/dist/spark/spark-2.4.6/spark-2.4.6-bin-hadoop2.7.tgz

mkdir spark

mv spark-2.4.6-bin-hadoop2.7.tgz spark

tar -xvzf spark-2.4.6-bin-hadoop2.7.tgz

export SPARK_HOME=/root/spark/spark-2.4.6-bin-hadoop2.7

alias spark-shell=”$SPARK_HOME/bin/spark-shell”

$SPARK_HOME/bin/docker-image-tool.sh -r baskerville -t spark2.4.6 -p $SPARK_HOME/kubernetes/dockerfiles/spark/bindings/python/Dockerfile build

docker tag baskerville/spark-py:v2.4.6 equalitie/baskerville:spark246

- build Baskerville worker image

docker build -t equalitie/baskerville:worker dockerfiles/worker/

- build the latest Baskerville image with your local changes

docker build -t equalitie/baskerville:latest .

docker push equalitie/baskerville:latest

- ES Retriever: https://github.com/equalitie/esretriever: A spark wrapper to retrieve data from ElastiSearch

- Deflect Analysis Ecosystem: https://github.com/equalitie/deflect-analysis-ecosystem: Docker files for all the components baskerville might need.

- Baskerville client: https://github.com/equalitie/baskerville_client

- Implement full suite of unit and entity tests.

- Conduct model tuning and feature selection / engineering.

If there is an issue or a feature suggestion, please, use the issue list with the appropriate labels.

The standard process is to create the issue, get feedback for it wherever necessary, create a branch named issue_issuenumber_short_description,

implement the solution for the issue there, along with the relevant tests and then create an MR, so that it can be code reviewed and merged.

This work is copyright (c) 2020, eQualit.ie inc., and is licensed under a Creative Commons Attribution 4.0 International License.