this is a re-implementation of nerf variant 《NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections》.

Nerf is a novel view rendering method for creating realistic 3D images from a set of 2D views. A Nerf uses neural networks MLPs to implicitly represent the scene as a continuous 5D function(3D location and 2D direction), which can capture the complex geometry and appearance of the real scene such as reflections, shadows and transparency. Nerf takes the 5D coordinates as input and output the color and density of the scene in that point. By querying many points along a camera ray, a NeRF can render a novel view of the scene using volume rendering.

What you need to train a NeRF:

- A set of images of the same scene from different viewpoints.

- Camera parameters for each image.

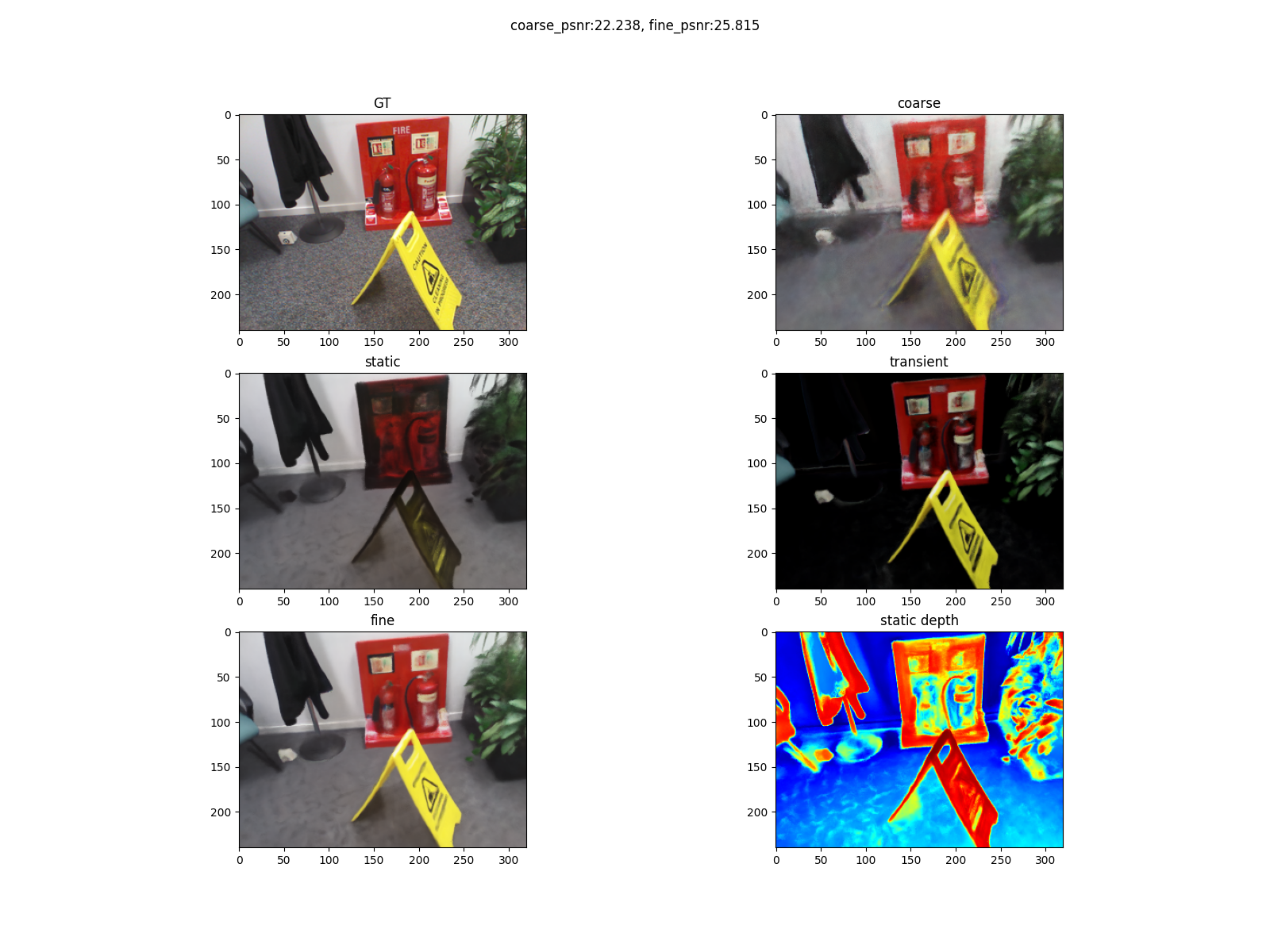

Nerf-w proposed in 2021 is a variant of NeRF that can be trained on unconstrained image collections. Unlimited real-world images are often taken with tons of variations like lighting, weather, camera parameters, exposure times, also sometimes it contains dynamic objects like moving vehicles, pedestrians, which partially or completely occlude the scene, causing artifacts like ghosting and blurring.

NeRF-w assumes that the original NeRF models a totally static scene, then it uses two embeddings respectively appearance and transient embeddings to handle those variations.

- Appearance embedding: It describes the individual attributes like illumination and exposure of each unlimited view.

- Transient embedding: Along with transient embedding, NeRF-w individually models the transient objects using a separate MLP θ3 for each image, the same way as the original NeRF models the static scene.

Visual localization is the task of estimating the camera pose of a query image with respect to a known 3D scene.

After training a NeRF-w model, we can synthesize novel views of the scene from any camera pose(limited by scene density and train views). So we can use the synthesized views to train a visual localization model.

Referring 《LENS: Localization enhanced by NeRF synthesis》

- more on visual localization

- python 3.8.10

./requirements.txt

support multi gpus training with pytorch DDP strategy, I use 4 RTX3080 to train the model, 1 gpu to test, and 4 gpus to synthesize novel views.

Tips:

- The parameters

encode_aandencode_tare for the fine. - Default no appearance embedding for the coarse, you can add it by setting coarse model

encode_ato True to enable appearance. - You can even add 2 or more fine models in the

NeRFWSystem, just to add moreCustomNeRFWwhen initializing theNeRFWSystemand modify the forward function ofNeRFWSystem. nerfw_system_updated.pyis the latest version of the model, which supports the appearance embedding and transient embedding for the coarse model and supports extension of the multi-fine models. The released model is trained with the old versionnerfw_system.pystructure.

Steps

6 scenes: KingsCollege, OldHospital, ShopFacade, StMarysChurch, Street, GreatCourt. link: Cambridge Landmarks

example:

python train_nerfw_DDP.py \

--root_dir ./runs/nerf --exp_name exp \

--batch_size 1024 --chunk 4*1024 --epochs 20 --lr 0.0005 \

--num_gpus 4 \

--img_downscale 3 \

--data_root_dir $Cambridge_DIR --scene SyMarysChurch \

--use_cache False --if_save_cache True \

--N_c 64 --N_f 128 \

--perturb 1.0 \

--encode_a True --encode_t True --a_dim 48 --t_dim 16 \

--beta_min 0.1 --lambda_u 0.01tips:

encode_aorencode_t: whether to use appearance or transient embedding- when first training, set

use_cacheto False andif_save_cacheto True, then the program will save the cache file to speed up the training process next time. - structure of the dataset:

Cambridge ├── SyMarysChurch/KingsCollege... │ ├── seq1 │ │ ├── 000000.jpg │ │ ├── 000001.jpg │ │ ├── ... │ ├── seq2... │ ├── dataset_train.txt │ ├── dataset_test.txt │ ├── cache │ │ ├── rays cache file... - how to resume? set

last_epoch> 0 and setckptto the path of the checkpoint file saved everysave_latest_freqsteps. - see

./option/nerf_option.pyfor more configurations, tensorboard is supported in the experiment dir.

Steps

7 scenes: Fire, Heads, Office, Pumpkin, Redkitchen, Stairs, Storage.

link: 7 scenes

re-localization depth: 7 scenes re-localization depth

example:

python train_nerfw_DDP.py \

--root_dir ./runs/nerf --exp_name exp \

--batch_size 1024 --chunk 4*1024 --epochs 20 --lr 0.0005 \

--num_gpus 4 \

--img_downscale 2 \

--data_root_dir $7scenes_DIR --scene Fire \

--use_cache False --if_save_cache True \

--N_c 64 --N_f 128 \

--perturb 1.0 \

--encode_a True --encode_t True --a_dim 48I haven't tried any more datasets yet, but I think it's very simple to implement others.

Just to write a dataset class and prepare all the rays, poses, etc. with the same format as the Cambridge or 7 scenes datasets.

inference.ipynbfile, containing some visualization and evaluation code of the trained NeRFW.visualize_camera.pyis used to visualize the camera pose of the Cambridge or 7 scenes dataset, output acamera_views.plyfor meshlab.predict_sigma.py、select_novel_viewsandgene_synthesis_datasetare used to generate the novel views for visual localization.

mainly refers to nerf_pl project, thanks to the author @kwea123.