Chen Wang, Minpeng Liao, Zhongqiang Huang, Jinliang Lu, Junhong Wu, Yuchen Liu, Chenqing Zong, Jiajun Zhang

Institute of Automation, Chinese Academy of Sciences

Machine Intelligence Technology Lab, Alibaba DAMO Academy

Note: we fix a bug in modeling_blsp.py, which causes slower convergence but has little impact on the final performance. We will release the new model and results as soon as possible.

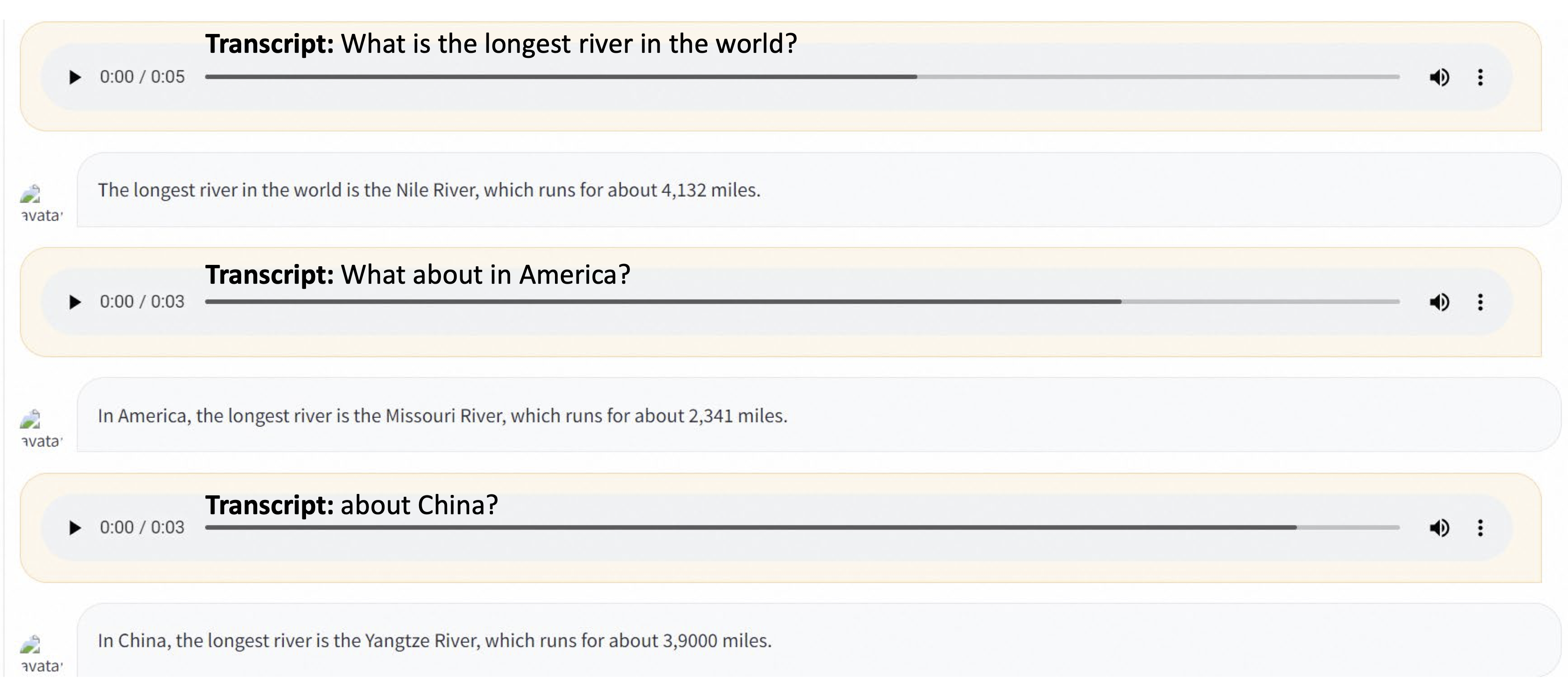

- BLSP extends the language capabilities of LLMs to speech, enabling iteraction with LLMs through spoken languages.

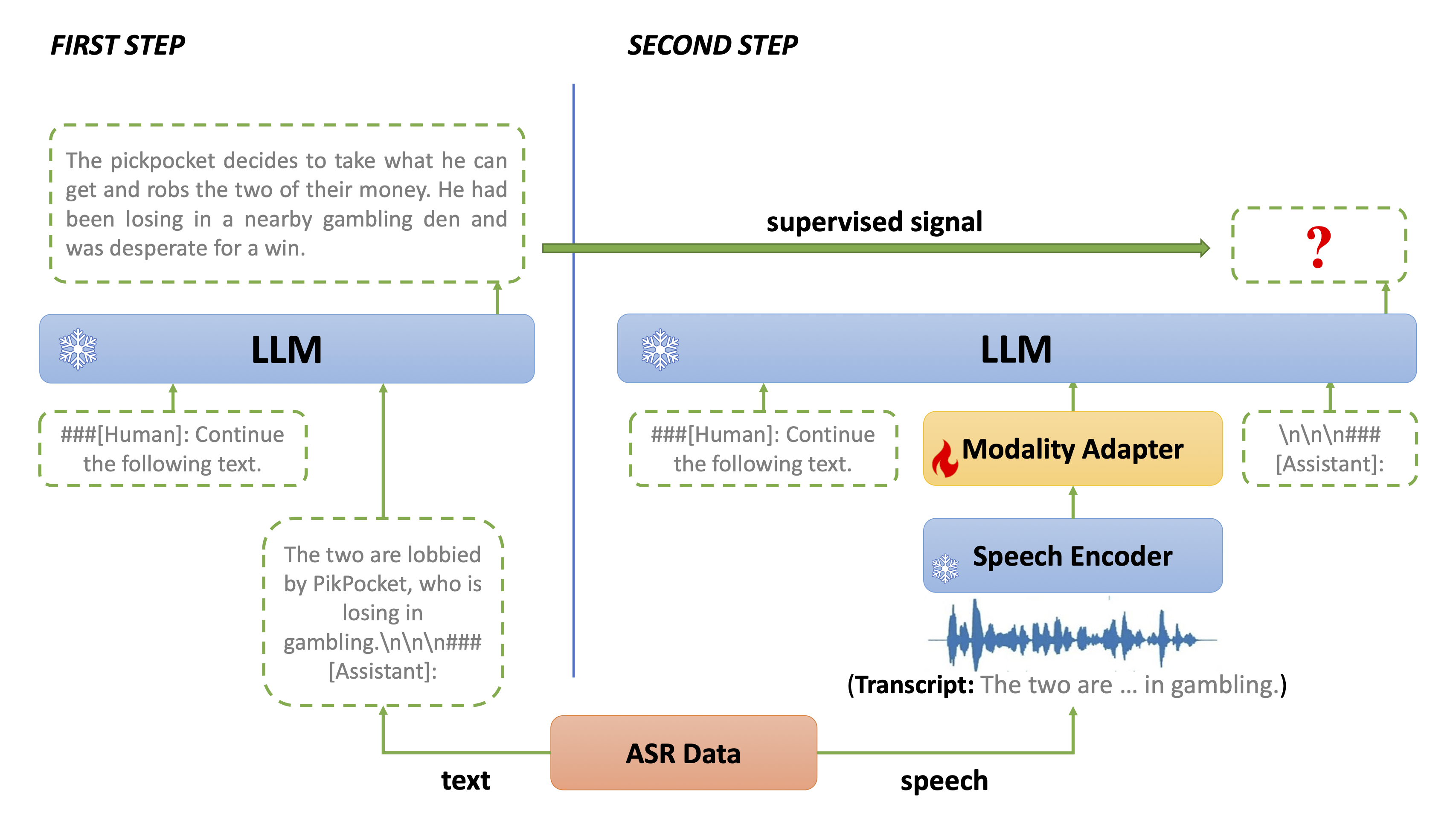

- We learn the BLSP model via behavior alignment of continuation writing. Ideally, LLMs should have the same behavior regardless of the modality of input, a speech segment or its transcript.

- The first step utilizes LLMs to generate text with the transcript as prefix, obtaining the continuations.

- The second step leverages the text continuations as supervised signals to learn the modality adapter by requiring LLMs to predict the text continuations given the speech segment.

More examples with video presentations can be found in the project page.

All experiments are carried out in the following environment.

- Python==3.8

- torch==1.13, torchaudio==0.13.0, cuda==11.6

- transformers==4.31.0

- soundfile==0.12.1, openai-whisper

- datasets==2.12.0, accelerate==0.21.0, deepspeed==0.9.3

- evaluate==0.4.0, sentencepiece==0.1.99

- fire==0.5.0, gradio==3.41.2

Download the pretrained BLSP model link

We release the inference code for evaluation.

The supported input file is .jsonl format. Here is an example of ST task. Each line of input file looks like

{"audio": "/home/data/eval/1.wav"}Then run the generation code

python3 blsp/generate.py \

--input_file "test.jsonl" \

--output_file "test_out.jsonl" \

--blsp_model $blsp_path \

--instruction "Please translate the following audio into German text."You can try out our demo by

export CUDA_VISIBLE_DEVICES=0

python blsp/chat_demo.py \

--blsp_model $blsp_pathThe training of BLSP contains two stages.

- Download the text instruction data Alpaca-52k to

~/data/alpaca_data.jsonand run the process script.

mkdir -p ~/data/stage1

python data_process/prepare_alpaca.py \

--input_file ~/data/alpaca_data.json \

--output_file ~/data/stage1/train_alpaca.jsonl- Obtain Llama2-7B Model to

~/pretrained_models/llama2-7b-hf. Then run the training script to perform text instruction tuning.

export llama_path=~/pretrained_models/llama2-7b-hf

export DATA_ROOT=~/data/stage1

export SAVE_ROOT=~/checkpoints/stage1

bash blsp/scripts/train_stage1_ddp.shThis step takes about 2 hours on 8 A100.

- Download the ASR dataset GigaSpeech, LibriSpeech, Common Voice 2.0 to

~/data/gigaspeech,~/data/librispeech,~/data/common_voicerespectively. Then run the process scripts.

mkdir -p ~/data/stage2

python data_process/prepare_gigaspeech.py \

--input_dir ~/data/gigaspeech \

--output_file ~/data/stage2/train_gigaspeech.jsonl

python data_process/prepare_librispeech.py \

--input_dir ~/data/librispeech \

--output_file ~/data/stage2/train_librispeech.jsonl

python data_process/prepare_common_voice.py \

--input_dir ~/data/common_voice \

--output_file ~/data/stage2/train_common_voice.jsonl- Use the stage1 model to continue the transcription of ASR data. Here we take GigaSpeech dataset as example.

mkdir -p ~/data/stage2/labels

export CUDA_VISIBLE_DEVICES=0

python3 -u data_process/asr_text_generation.py continue_writing \

--llm_path ~/checkpoints/stage1 \

--manifest ~/data/stage2/train_gigaspeech.jsonl \

--lab_dir ~/data/stage2/labels \

--nshard 8 \

--rank 0 &

.

.

.

export CUDA_VISIBLE_DEVICES=7

python3 -u data_process/asr_text_generation.py continue_writing \

--llm_path ~/checkpoints/stage1 \

--manifest ~/data/stage2/train_gigaspeech.jsonl \

--lab_dir ~/data/stage2/labels \

--nshard 8 \

--rank 7 &- (Optional) We recommend that you process the data offline and save to disk. The preprocessing process will filter out speech-text pairs that cannot be loaded by soundfile.

python blsp/src/speech_text_paired_dataset.py offline \

--dataroot ~/data/stage2/labels \

--manifest_files *.jsonl \

--lm_path ~/checkpoints/stage1 \

--instruction "Continue the following text in a coherent and engaging style with less than 40 words." \

--num_proc 32- Download whisper-small to

~/pretrained_models/whisper-smalland then run the training script.

export llama_path=~/checkpoints/stage1

export whisper_path=~/pretrained_models/whisper-small

export DATA_ROOT=~/data/stage2/labels

export SAVE_ROOT=~/checkpoints/stage2

bash blsp/scripts/train_stage2_ddp.shThe training process takes about 2.5 days on 8 A100.

- The license of our project is Apache License 2.0

- Our models are based on Llama2 and Whisper. If you want to use our models, please do not violate the MIT License of whisper and the License of LLaMA-2