Marcos V. Conde, Gregor Geigle, Radu Timofte

Computer Vision Lab, University of Wuerzburg | Sony PlayStation, FTG

Video courtesy of Gradio (see their post about InstructIR). Also shoutout to AK -- see his tweet.

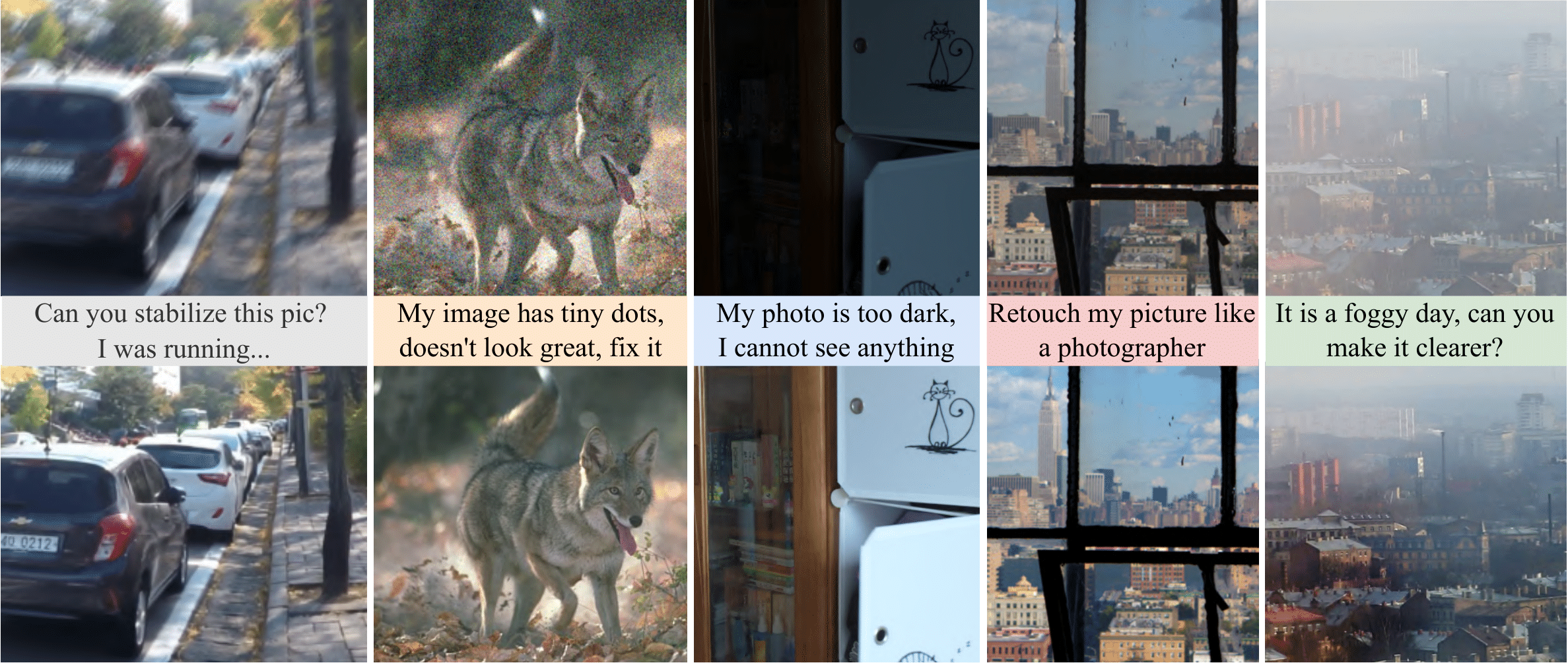

InstructIR takes as input an image and a human-written instruction for how to improve that image. The neural model performs all-in-one image restoration. InstructIR achieves state-of-the-art results on several restoration tasks including image denoising, deraining, deblurring, dehazing, and (low-light) image enhancement.

🚀 You can start with the demo tutorial

Abstract (click me to read)

Image restoration is a fundamental problem that involves recovering a high-quality clean image from its degraded observation. All-In-One image restoration models can effectively restore images from various types and levels of degradation using degradation-specific information as prompts to guide the restoration model. In this work, we present the first approach that uses human-written instructions to guide the image restoration model. Given natural language prompts, our model can recover high-quality images from their degraded counterparts, considering multiple degradation types. Our method, InstructIR, achieves state-of-the-art results on several restoration tasks including image denoising, deraining, deblurring, dehazing, and (low-light) image enhancement. InstructIR improves +1dB over previous all-in-one restoration methods. Moreover, our dataset and results represent a novel benchmark for new research on text-guided image restoration and enhancement.

-

Upload Model weights and results for other InstructIR variants (3D, 5D).

-

download all the test datasets for all-in-one restoration.

-

check the instructions below to run

eval_instructir.pyand get all the metrics and results for all-in-one restoration. -

You can download all the qualitative results here instructir_results.zip

-

Upload models to HF 🤗 (download the models here)

-

🤗 Hugging Face Demo try it now

-

Google Colab Tutorial (check demo.ipynb)

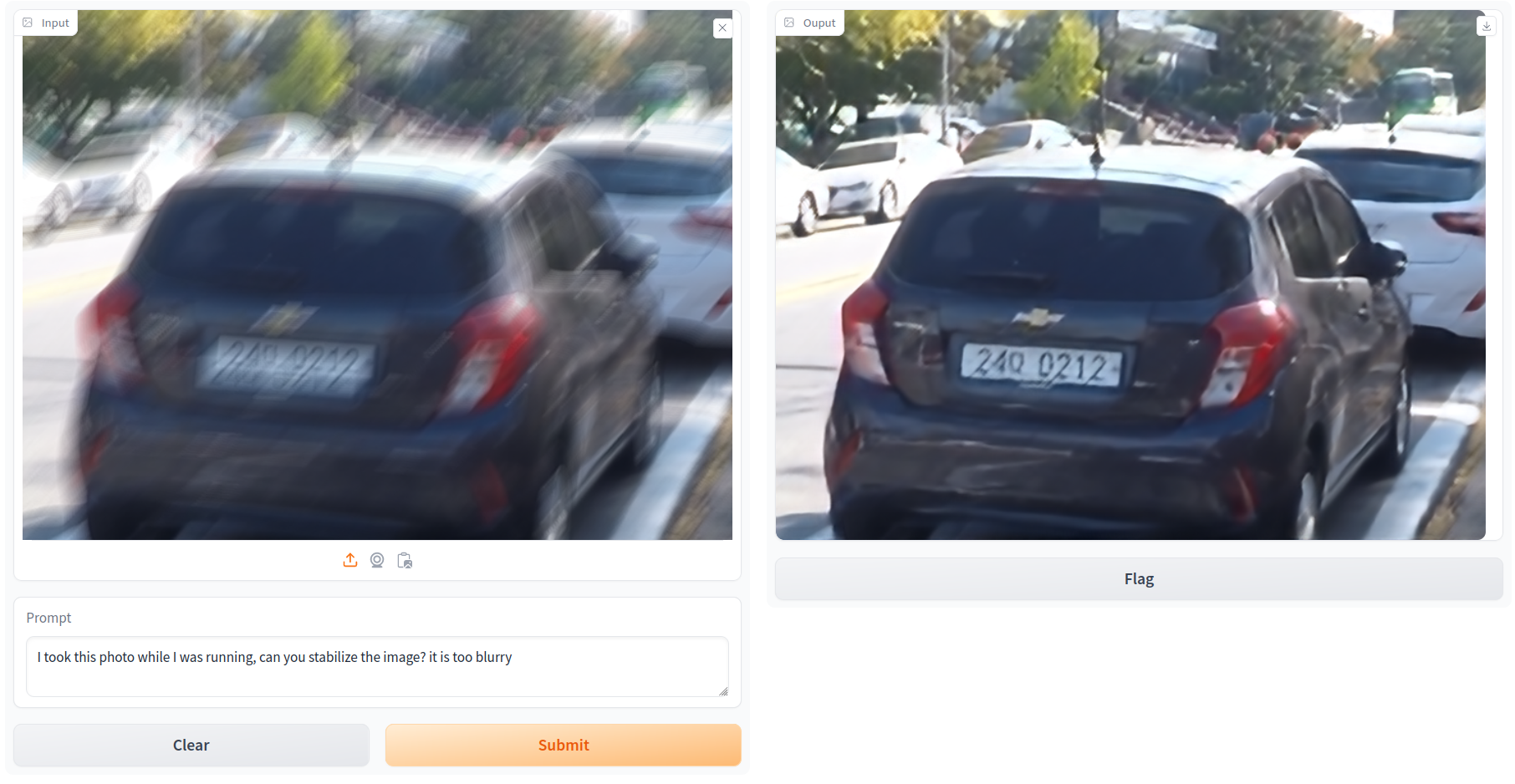

Try it directly on 🤗 Hugging Face at no cost, no code.

🚀 You can start with the demo tutorial. We also host the same tutorial on google colab so you can run it using free GPUs!.

Check test.py and eval_instructir.py. The following command provides all the metric for all the benchmarks using the pre-trained models in models/. The results from InstructIR are saved in the indicated folder results/

python eval_instructir.py --model models/im_instructir-7d.pt --lm models/lm_instructir-7d.pt --device 0 --config configs/eval5d.yml --save results/

An example of the output log is:

>>> Eval on CBSD68_15 noise 0

CBSD68_15_base 24.84328738380881

CBSD68_15_psnr 33.98722295200123 68

CBSD68_15_ssim 0.9315137801801457

....

You can download all the test datasets, and locate them in test-data/. Make sure the paths are updated in the config file configs/eval5d.yml.

You can download all the paper results -check releases-. We test InstructIR in the following benchmarks:

| Dataset | Task | Test Results |

|---|---|---|

| BSD68 | Denoising | Download |

| Urban100 | Denoising | Download |

| Rain100 | Deraining | Download |

| GoPro | Deblurring | Download |

| LOL | Lol Image Enhancement | Download |

| MIT5K | Image Enhancement | Download |

In releases or clicking the link above you can download instructir_results.zip which includes all the qualitative results for those datasets [1.9 Gbs].

Sometimes the blur, rain, or film grain noise are pleasant effects and part of the "aesthetics". Here we show a simple example on how to interact with InstructIR.

As you can see our model accepts diverse humman-written prompts, from ambiguous to precise instructions. How does it work? Imagine we have the following image as input:

Now we can use InstructIR. with the following prompt (1):

I love this photo, could you remove the raindrops? please keep the content intact

Now, let's enhance the image a bit further (2).

Can you make it look stunning? like a professional photo

The final result looks indeed stunning 🤗 You can do it yourself in the demo tutorial.

Disclaimer: please remember this is not a product, thus, you will notice some limitations. As most all-in-one restoration methods, it struggles to generalize on real-world images -- we are working on improving it.

-

How should I start? Check our demo Tutorial and also our google collab notebook.

-

How can I compare with your method? You can download the results for several benchmarks above on Results.

-

How can I test the model? I just want to play with it: Visit our 🤗 Hugging Face demo and test ir for free,

-

Why aren't you using diffusion-based models? (1) We want to keep the solution simple and efficient. (2) Our priority is high-fidelity --as in many industry scenarios realted to computational photography--.

We made a simple Gradio demo you can run (locally) on your machine here. You need Python>=3.9 and these requirements for it: pip install -r requirements_gradio.txt

python app.py

This work was partly supported by the The Humboldt Foundation (AvH). Marcos Conde is also supported by Sony Interactive Entertainment, FTG.

This work is inspired in InstructPix2Pix.

For any inquiries contact Marcos V. Conde: marcos.conde [at] uni-wuerzburg.de

@misc{conde2024instructir,

title={High-Quality Image Restoration Following Human Instructions},

author={Marcos V. Conde, Gregor Geigle, Radu Timofte},

year={2024},

journal={arXiv preprint},

}