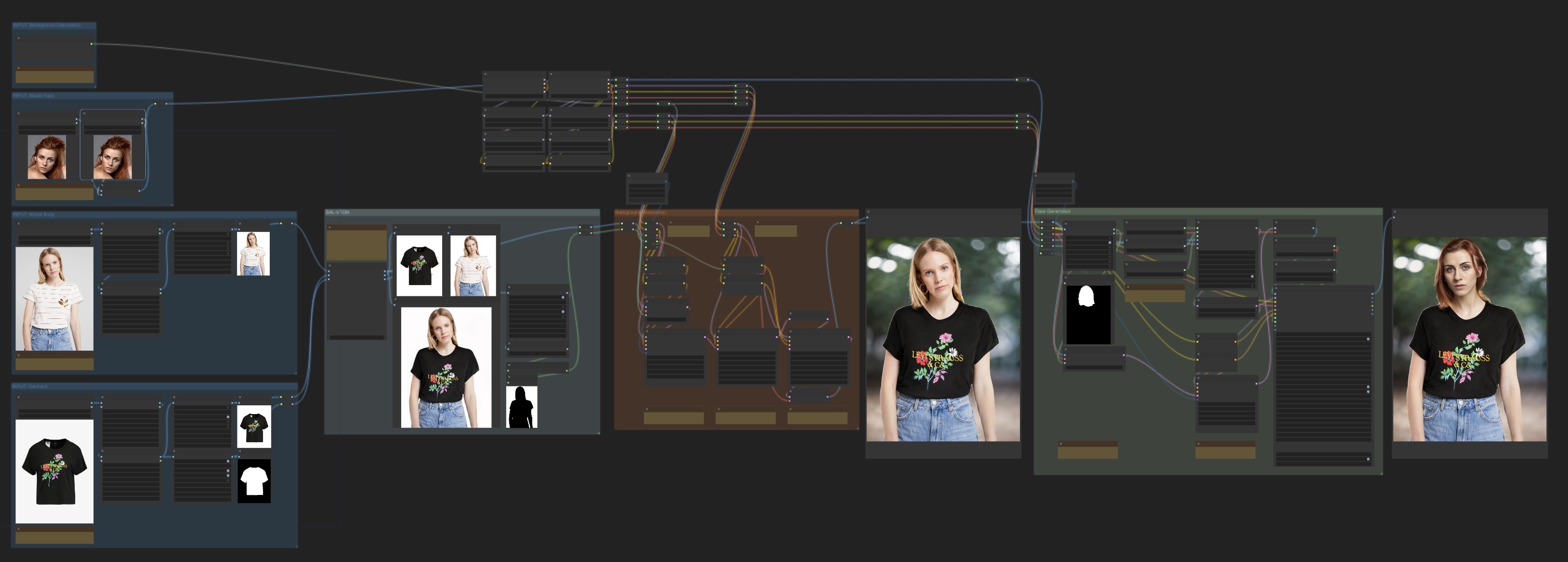

A ComfyUI Workflow for swapping clothes using SAL-VTON.

Generates backgrounds and swaps faces using Stable Diffusion 1.5 checkpoints.

Made with 💚 by the CozyMantis squad.

- The Cozy SAL-VTON node for swapping clothes

- Image Resize for ComfyUI for cropping/resizing

- WAS Node Suite for removing the background

- ComfyUI Essentials for the mask preview and remove latent mask nodes

- ComfyUI IPAdapter plus for face swapping

- Impact Pack for face detailing

- Cozy Human Parser for getting a mask of the head

- rgthree for seed control

- If you need the background generation and face swap parts of the workflow, we recommend downloading Realistic Vision v6.0 and its inpainting version, and placing them in your

models/checkpointsdirectory.

- A model image (the person you want to put clothes on)

- A garment product image (the clothes you want to put on the model)

- Garment and model images should be close to 3:4 aspect ratio, and at least 768x1024 px.

- (Optional) One or two portraits for face-swapping

Important

Make sure you own the rights to the images you use in this workflow. Do not use images that you do not have permission to use.

The SAL-VTON models have been trained on the VITON-HD dataset, so for best results you'll want:

- images that have a white/light gray background

- upper-body clothing items (tops, tshirts, bodysuits, etc.)

- an input person standing up straight, pictured from the knees/thighs up.

To help with the first point, this workflow includes a background removal pre-processing step for the inputs.

This stage uses the Cozy SAL-VTON node to run the virtual try-on model on the input images. The model will swap the clothes from the garment product image onto the model image.

Node that SAL-VTON relies on landmark detection to align the garment and model images. The landmark coordinates will be auto-generated the first time you run the workflow. If needed, you can correct the fit by manually adjusting the landmark coordinates and re-running the workflow. Press the "Update Landmarks" button in the Cozy SAL-VTON node to bring up the landmark editor.

Based on a text input, a background is generated for the dressed model using the following steps:

- with an inpainting model, inpaint the background at full noise

- with a regular model, do another pass at less noise on the background to add more details

- with a regular model, do a very low noise pass on the entire image, to fix small artifacts without changing the cloth details

Here we use IPAdapter and inpainting to swap the face of the model with the face provided in the input portraits. This step is optional and can be skipped if you don't have a virtual-influencer-type scenario.

Made with 💚 by the CozyMantis squad. Check out our ComfyUI nodes and workflows!

Based on the excellent paper "Linking Garment With Person via Semantically Associated Landmarks for Virtual Try-On" by Keyu Yan, Tingwei Gao, HUI ZHANG, Chengjun Xie.

Please check licenses and terms of use for each of the nodes and models required by this workflow.

The workflow should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.