*This is a pytorch realization of Residual Steps Network which won 2019 COCO Keypoint Challenge and ranks 1st place on both COCO test-dev and test-challenge datasets as shown in COCO leaderboard.

- 2020.09 : Our RSN has been integrated into the great MMPose framework. Thanks to their effort. Welcome to use their codebase with their pre-trained model zoo. ⭐

- 2020.07 : Our paper has been accepted as Spotlight by ECCV 2020 🚀

- 2019.09 : Our work won the First place and Best Paper Award in COCO 2019 Keypoint Challenge 🏆

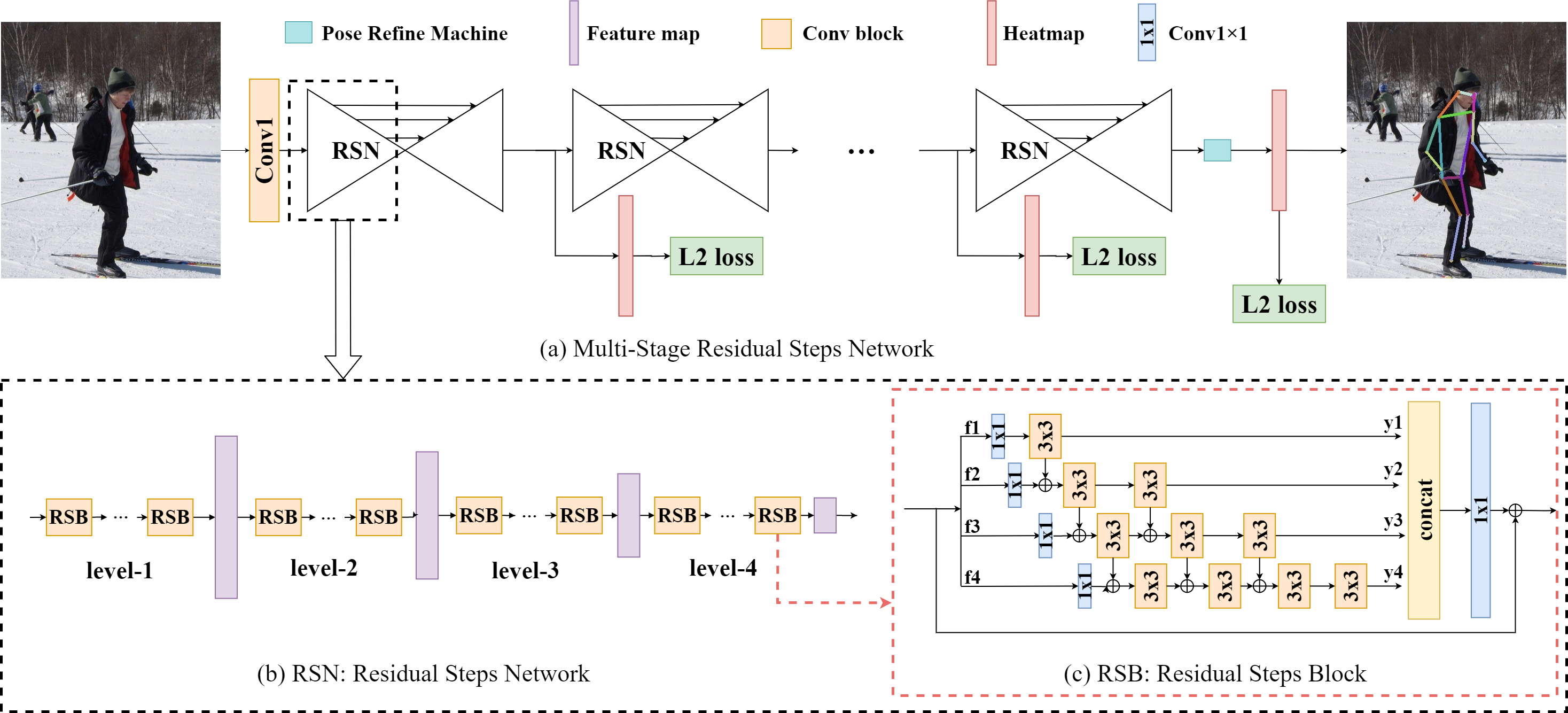

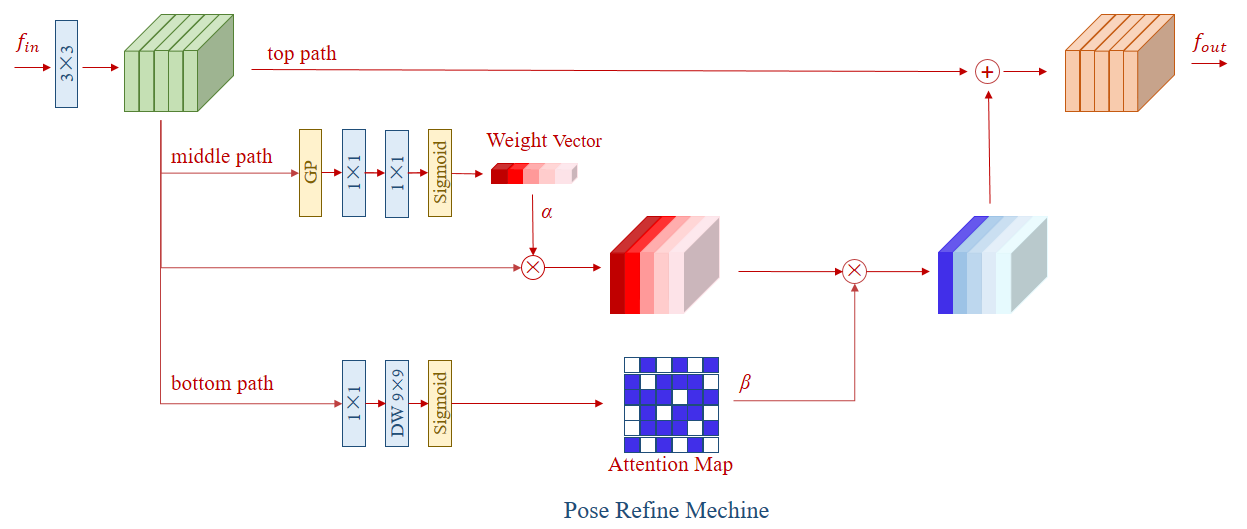

Abstract: In this paper, we propose a novel method called Residual Steps Network (RSN). RSN aggregates features with the same spatialsize (Intra-level features) efficiently to obtain delicate local representations, which retain rich low-level spatial information and result in precise keypoint localization. In addition, we propose an efficient attention mechanism - Pose Refine Machine (PRM) to further refine the keypoint locations. Our approach won the 1st place of COCO Keypoint Challenge 2019 and achieves state-of-the-art results on both COCO and MPII benchmarks, without using extra training data and pretrained model. Our single model achieves 78.6 on COCO test-dev, 93.0 on MPII test dataset. Ensembled models achieve 79.2 on COCO test-dev, 77.1 on COCO test-challenge dataset.

| Model | Input Size | GFLOPs | AP | AP50 | AP75 | APM | APL | AR |

|---|---|---|---|---|---|---|---|---|

| Res-18 | 256x192 | 2.3 | 70.7 | 89.5 | 77.5 | 66.8 | 75.9 | 75.8 |

| RSN-18 | 256x192 | 2.5 | 73.6 | 90.5 | 80.9 | 67.8 | 79.1 | 78.8 |

| RSN-50 | 256x192 | 6.4 | 74.7 | 91.4 | 81.5 | 71.0 | 80.2 | 80.0 |

| RSN-101 | 256x192 | 11.5 | 75.8 | 92.4 | 83.0 | 72.1 | 81.2 | 81.1 |

| 2×RSN-50 | 256x192 | 13.9 | 77.2 | 92.3 | 84.0 | 73.8 | 82.5 | 82.2 |

| 3×RSN-50 | 256x192 | 20.7 | 78.2 | 92.3 | 85.1 | 74.7 | 83.7 | 83.1 |

| 4×RSN-50 | 256x192 | 29.3 | 79.0 | 92.5 | 85.7 | 75.2 | 84.5 | 83.7 |

| 4×RSN-50 | 384x288 | 65.9 | 79.6 | 92.5 | 85.8 | 75.5 | 85.2 | 84.2 |

| Model | Input Size | GFLOPs | AP | AP50 | AP75 | APM | APL | AR |

|---|---|---|---|---|---|---|---|---|

| RSN-18 | 256x192 | 2.5 | 71.6 | 92.6 | 80.3 | 68.8 | 75.8 | 77.7 |

| RSN-50 | 256x192 | 6.4 | 72.5 | 93.0 | 81.3 | 69.9 | 76.5 | 78.8 |

| 2×RSN-50 | 256x192 | 13.9 | 75.5 | 93.6 | 84.0 | 73.0 | 79.6 | 81.3 |

| 4×RSN-50 | 256x192 | 29.3 | 78.0 | 94.2 | 86.5 | 75.3 | 82.2 | 83.4 |

| 4×RSN-50 | 384x288 | 65.9 | 78.6 | 94.3 | 86.6 | 75.5 | 83.3 | 83.8 |

| 4×RSN-50+ | - | - | 79.2 | 94.4 | 87.1 | 76.1 | 83.8 | 84.1 |

| Model | Input Size | GFLOPs | AP | AP50 | AP75 | APM | APL | AR |

|---|---|---|---|---|---|---|---|---|

| 4×RSN-50+ | - | - | 77.1 | 93.3 | 83.6 | 72.2 | 83.6 | 82.6 |

| Model | Split | Input Size | Head | Shoulder | Elbow | Wrist | Hip | Knee | Ankle | Mean |

|---|---|---|---|---|---|---|---|---|---|---|

| 4×RSN-50 | val | 256x256 | 96.7 | 96.7 | 92.3 | 88.2 | 90.3 | 89.0 | 85.3 | 91.6 |

| 4×RSN-50 | test | 256x256 | 98.5 | 97.3 | 93.9 | 89.9 | 92.0 | 90.6 | 86.8 | 93.0 |

| Model | Input Size | GFLOPs | AP | AP50 | AP75 | APM | APL | AR |

|---|---|---|---|---|---|---|---|---|

| Res-18 | 256x192 | 2.3 | 65.2 | 87.3 | 71.5 | 61.2 | 72.2 | 71.3 |

| RSN-18 | 256x192 | 2.5 | 70.4 | 88.8 | 77.7 | 67.2 | 76.7 | 76.5 |

- + means using ensemble models.

- All models are trained on 8 V100 GPUs

- We done all the experiments using our orginal DL-Platform, all results in our paper are reported on this DL-Platform. There are some differences between it and Pytorch.

This repo is organized as following:

$RSN_HOME

|-- cvpack

|

|-- dataset

| |-- COCO

| | |-- det_json

| | |-- gt_json

| | |-- images

| | |-- train2014

| | |-- val2014

| |

| |-- MPII

| |-- det_json

| |-- gt_json

| |-- images

|

|-- lib

| |-- models

| |-- utils

|

|-- exps

| |-- exp1

| |-- exp2

| |-- ...

|

|-- model_logs

|

|-- README.md

|-- requirements.txt

-

Install Pytorch referring to Pytorch website.

-

Clone this repo, and config RSN_HOME in /etc/profile or ~/.bashrc, e.g.

export RSN_HOME='/path/of/your/cloned/repo'

export PYTHONPATH=$PYTHONPATH:$RSN_HOME

- Install requirements:

pip3 install -r requirements.txt

- Install COCOAPI referring to cocoapi website, or:

git clone https://github.com/cocodataset/cocoapi.git $RSN_HOME/lib/COCOAPI

cd $RSN_HOME/lib/COCOAPI/PythonAPI

make install

-

Download images from COCO website, and put train2014/val2014 splits into $RSN_HOME/dataset/COCO/images/ respectively.

-

Download ground truth from Google Drive or Baidu Drive (code: fc51), and put it into $RSN_HOME/dataset/COCO/gt_json/.

-

Download detection result from Google Drive or Baidu Drive (code: fc51), and put it into $RSN_HOME/dataset/COCO/det_json/.

-

Download images from MPII website, and put images into $RSN_HOME/dataset/MPII/images/.

-

Download ground truth from Google Drive or Baidu Drive (code: fc51), and put it into $RSN_HOME/dataset/MPII/gt_json/.

-

Download detection result from Google Drive or Baidu Drive (code: fc51), and put it into $RSN_HOME/dataset/MPII/det_json/.

Create a directory to save logs and models:

mkdir $RSN_HOME/model_logs

Go to specified experiment repository, e.g.

cd $RSN_HOME/exps/RSN50.coco

and run:

python config.py -log

python -m torch.distributed.launch --nproc_per_node=gpu_num train.py

the gpu_num is the number of gpus.

python -m torch.distributed.launch --nproc_per_node=gpu_num test.py -i iter_num

the gpu_num is the number of gpus, and iter_num is the iteration number you want to test.

Please considering citing our projects in your publications if they help your research.

@inproceedings{cai2020learning,

title={Learning Delicate Local Representations for Multi-Person Pose Estimation},

author={Yuanhao Cai and Zhicheng Wang and Zhengxiong Luo and Binyi Yin and Angang Du and Haoqian Wang and Xinyu Zhou and Erjin Zhou and Xiangyu Zhang and Jian Sun},

booktitle={ECCV},

year={2020}

}

@inproceedings{cai2019res,

title={Res-steps-net for multi-person pose estimation},

author={Cai, Yuanhao and Wang, Zhicheng and Yin, Binyi and Yin, Ruihao and Du, Angang and Luo, Zhengxiong and Li, Zeming and Zhou, Xinyu and Yu, Gang and Zhou, Erjin and others},

booktitle={Joint COCO and Mapillary Workshop at ICCV},

year={2019}

}