This project is to build a web application that scrapes various websites for data related to the Mission to Mars and displays the information in a single HTML page.

Application is deployed as a Heroku app https://missionmars.herokuapp.com/. Due to the limitation of the free version, performance is not as good as it is hosted locally. Also, some necessary changes are done for the sake of compatibility with chrome drivers in the Heroku dynos!

Initial scraping using Jupyter Notebook, BeautifulSoup, Pandas, and Requests/Splinter.

- Codebase is here

- All the urls used in the code are kept in urls_list.py

The following data is scraped and retrieved in the format mentioned below.

- Scraped the NASA Mars News Site and collected the latest News Title and Paragraph Text.

# Example:

news_title = "NASA's Next Mars Mission to Investigate Interior of Red Planet"

news_p = "Preparation of NASA's next spacecraft to Mars, InSight, has ramped up this summer, on course for launch next May from Vandenberg Air Force Base in central California -- the first interplanetary launch in history from America's West Coast."-

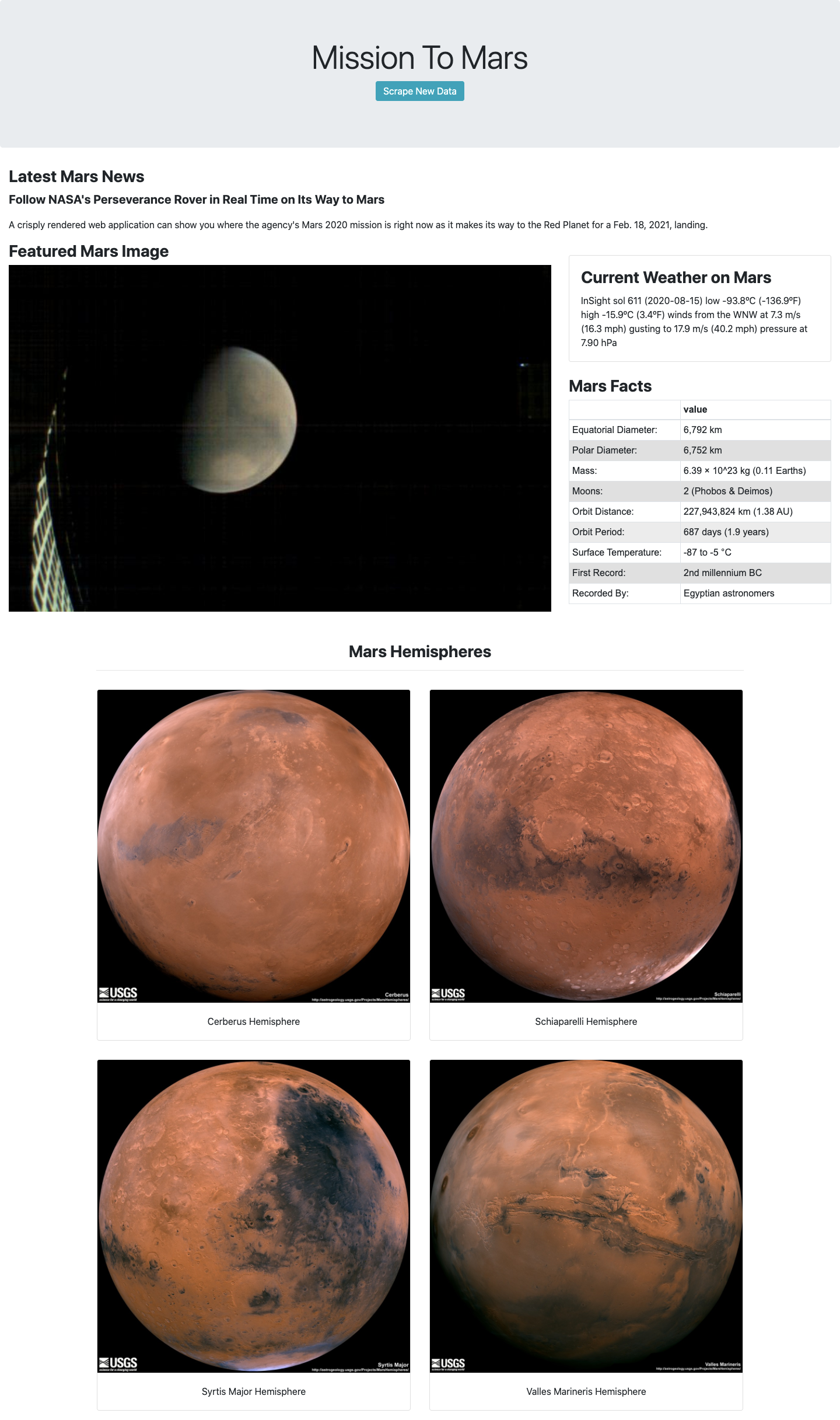

JPL Featured Space Image is loacted here.

-

Used splinter to navigate and find the image url for the current Featured Mars Image and retrieved full size image.

# Example:

featured_image_url = 'https://www.jpl.nasa.gov/spaceimages/images/largesize/PIA16225_hires.jpg'- Mars Weather information is retrieved from twitter account here and scraped the latest Mars weather tweet from the page.

# Example:

mars_weather = 'Sol 1801 (Aug 30, 2017), Sunny, high -21C/-5F, low -80C/-112F, pressure at 8.82 hPa, daylight 06:09-17:55'- Mars Facts are located here.

- Used Pandas to retrieve the html table as dataframe and also to convert the data to a HTML table string.

-

Used USGS Astrogeology site here to obtain high resolution images for each of Mar's hemispheres and retrieved the full resolution images.

-

Appended the dictionary with the image url string and the hemisphere titles to a list. This list contains one dictionary for each hemisphere.

# Example:

hemisphere_image_urls = [

{"title": "Valles Marineris Hemisphere", "img_url": "..."},

{"title": "Cerberus Hemisphere", "img_url": "..."},

{"title": "Schiaparelli Hemisphere", "img_url": "..."},

{"title": "Syrtis Major Hemisphere", "img_url": "..."},

]- Scrape Codebase is here

- Flask app is here

- All the urls used in the code are kept in urls_list.py

- Please note that a config file needs to be created as

config.pywhich containsmongo_uri. In my case, I have used Free Mongo Cloud, hence didn't keep the config file here to secure the password. When you use a new DB, please ensure to click scrape button first

Use MongoDB with Flask templating to create a new HTML page that displays all of the information that was scraped from the URLs above.

-

A Consolidated code file is created

scrape_mars.pywith a function calledscrapethat will execute all of the scraping code from above and return one Python dictionary containing all of the scraped data.- I have used some useful features in this script.

Multi threading: To scrape simultaneously.Retry function: As sometimes error occurs because of the webpages do not load fast. This function retries the scraping with extended wait times.

- I have used some useful features in this script.

-

Next, create a flask app.

- I have used

catch allroute to define the routes. This is to ensure there is a default route.

- I have used

-

Create a root route

/that will query your Mongo database and pass the mars data into an HTML template to display the data. -

Create a template HTML file called

index.htmlthat will take the mars data dictionary and display all of the data in the appropriate HTML elements.- I have added a small

JS functionalityto scrape button (To disable it for 30 sec once it gets clicked. This is to avoid user clicking it continuously.)

- I have added a small

-

Create a default page

default.html(when user tries to access some random url, this page should pop up)

I have made this app responsive, using Bootstrap. The pictures above are when the app is in larger screens. In between two specific screen sizes, the app would display the quote displayed here. I will let you explore that yourself by playing around the screen sizes!!!