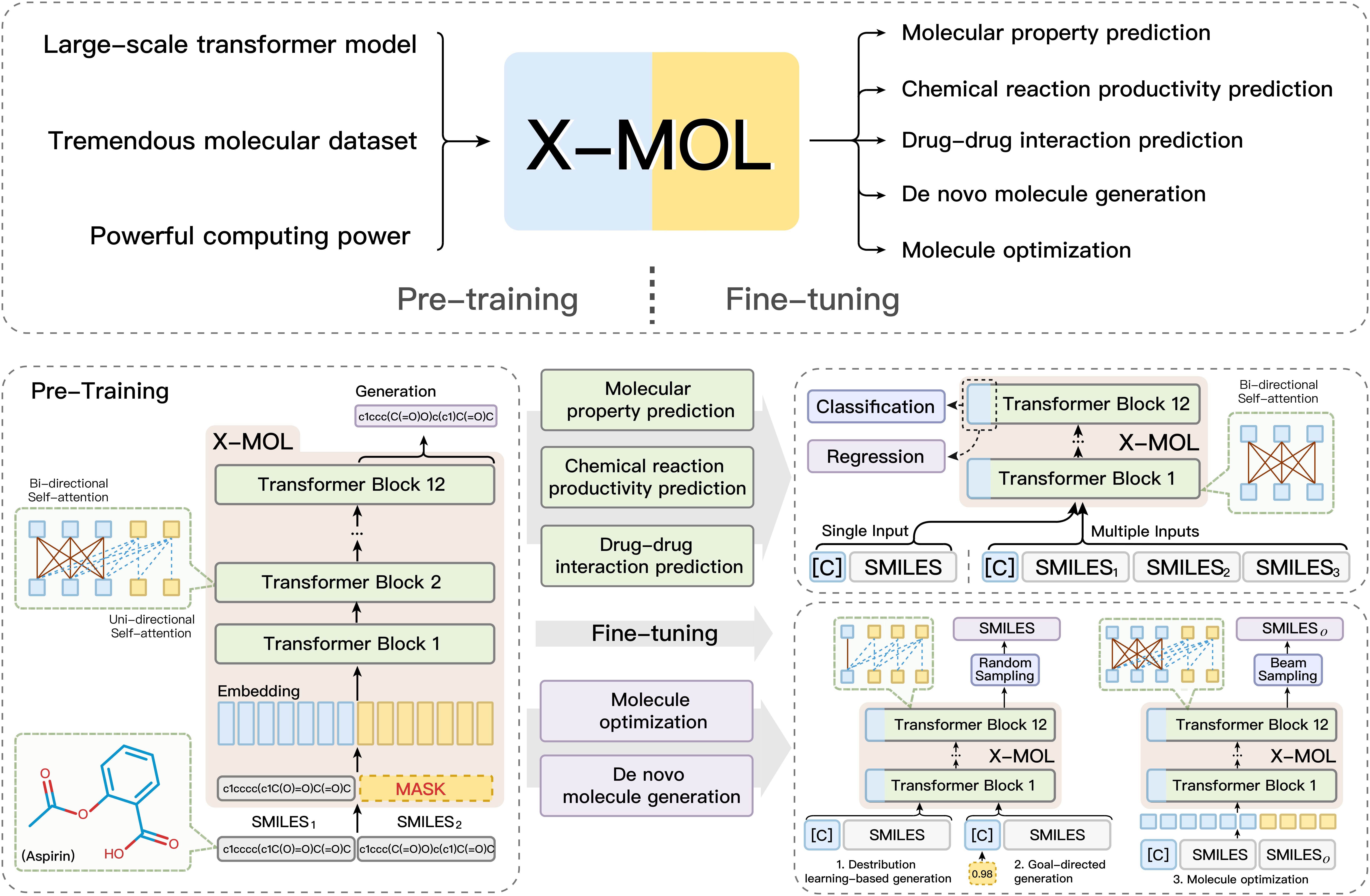

In silico modelling and analysis of small molecules substantially accelerates the process of drug development. Representing and understanding molecules is the fundamental step for various in silico molecular analysis tasks. Traditionally, these molecular analysis tasks have been investigated individually and separately. In this study, we presented X-MOL, which applies large-scale pre-training technology on 1.1 billion molecules for molecular understanding and representation, and then, carefully designed fine-tuning was performed to accommodate diverse downstream molecular analysis tasks, including molecular property prediction, chemical reaction analysis, drug-drug interaction prediction, de novo generation of molecules and molecule optimization. As a result, X-MOL was proven to achieve state-of-the-art results on all these molecular analysis tasks with good model interpretation ability. Collectively, taking advantage of super large-scale pre-training data and super-computing power, our study practically demonstrated the utility of the idea of "mass makes miracles" in molecular representation learning and downstream in silico molecular analysis, indicating the great potential of using large-scale unlabelled data with carefully designed pre-training and fine-tuning strategies to unify existing molecular analysis tasks and substantially enhance the performance of each task.

In our study, X-MOL adopts a well-designed pre-training strategy to learn and understand the SMILES representation efficiently. Specifically, X-MOL designs a generative model during pre-training. In this way, the model is trained to generate a valid and equivalent SMILES representation from an input SMILES representation of the same molecule. This generative training strategy ultimately results in a pre-trained model with a good understanding of the SMILES representation, and it can generate the correct SMILES of the given molecule quite well. As a result, X-MOL builds a super large-scale pre-training model based on the Transformer, which is composed of 12 encoder-decocder layers, 768-dimensional hidden units and 12 attention heads.

Specifically, our generative pre-training strategy is implemented by an encoder-decoder architecture, but it is different from traditional encoder-decoder architectures such as those used in neural machine translation (NMT), as the encoder and decoder in X-MOL share the same layers. In X-MOL, the input random SMILES and output random SMILES are sent into the model simultaneously, and the output random SMILES is totally masked. In addition, only a unidirectional attention operation can be performed within the output random SMILES, which means that each character in the output random SMILES can pay attention only to itself and the previously generated characters. In this way, the shared-layer encoder-decoder architecture in X-MOL is able to unifiy the semantic comprehension of encoder and decoder, also the shared-layer architecture could reduce the number of parameters significantly compared with traditional encoder-decoder architectures.

.......Pre-training.................................Fine-tuning...........

.........................................|Molecular property prediction...

..........Tremendous data|...............|Drug-drug inteartion prediction.

..Large-scale transformer|---> X-MOL --->|Chemical reaction prediction....

.Powerful computing power|...............|Molecule generation.............

.........................................|Molecule optimization...........

We provide the pre-trained X-MOL and the script of fine-tuning X-MOL as well as the environment

Environment:

The fine-tuning of X-MOL to prediction tasks and generation tasks are two irrelevant and independent part, the environment (including python and nccl) should be downloaded and decompressed into both the two folders

The provided environment :

- Pre_trained X-MOL : https://1drv.ms/u/s!BIa_gVKaCDngi2S994lMsp-Y3TWK?e=l5hbxi

- Environment-python : https://1drv.ms/u/s!Aoa_gVKaCDngi2U1ip8w2HxjIt4-?e=koGl4c

- Environment-nccl : https://1drv.ms/u/s!Aoa_gVKaCDngi2J7pOh7WdKR-pMa?e=GVlYbd

Requirements :

- Python3.7 (although the environment of model traininng, python2, is provided above, the process of preprocessing data and model evaluation is based on a python3 environment)

- RDKit (2019.09.1.0)

- Modify the configuration file :

conf_pre/ft_conf.sh

The terms that need to be modified are high-lighted, like :

### attention, this term need to be modified

vocab_path="./package/molecule_dict_zinc250k"

### attention, this term need to be modified

CONFIG_PATH="./package/ernie_zinc250k_config.json"

- Fine-tuning to classification/regression :

Modify themain()inrun_classifier.py

- For classification :

task_type = 'cls' - For regression :

task_type = 'reg'

- For classification :

- Fine-tuning to single-input/multiple-input :

Modify themain()inrun_classifier.py

- For single-input :

multi_input = False - For multiple-input :

multi_input = True

Modify themain()infinetune_launch.py:

extend_sent = True

Modify the"type_vocab_size"in model config

- For single-input :

- For molecule property prediction task :

- Repeat training:

Modifyfinetune_launch.py, the code inif __name__ == "__main__"::

while fine_tune_rep < the_numeber_of_repeating_times: - Random/scaffold split:

- Modify

finetune_launch.py, the code inif __name__ == "__main__"::

Keep thesubprocess.call("python3 pt_scaffold_split.py", shell=True) - Modify

pt_scaffold_split.py, the code inif __name__ == "__main__"::

sep_file_ex('path_to_training_data_folder', split_func='scaffold', amp=False, ampn=(0,0,0))

- Modify

- Repeat training:

- If the vocab list needs to be extended :

Modify themain()infinetune_launch.py:

extend_vocab = False

- Run :

sh train_ft.sh

sh train_lrtemb.sh(knowlegde embedding)

- Modify the configuration file :

ft_conf

The terms that need to be modified are high-lighted, like :

### attention, this term need to be modified

vocab_path="./package/molecule_dict_zinc250k"

### attention, this term need to be modified

CONFIG_PATH="./package/ernie_zinc250k_config.json"

- If the vocab list needs to be extended :

Modify themain()infinetune_launch_local.py:

extend_vocab = True

extend_fc = True

- Run :

sh train_ft.sh(DL&GD generation tasks)

sh train_opt.sh(optimization tasks)

For both the two type tasks :

Modify finetune_launch.py (finetune_launch_local.py in generation tasks)

Valid value of the two arguments in the argparse term multip_g

1. nproc_per_node : GPU numbers

2. selected_gpus : GPU ids

The rules in the extension of vocabulary list :

1. The extension must based on the X-MOL_dict, as well as the vocabularg list used in pre_training.

2. The extended vocab must be placed behind the original vocabs (the index of new vocabs is start from 122).

3. Do not forget to turn on the extend_vocab in the finetune_launch.py/finetune_launch_local.py.

4. Do not forget to modify the "vocab_size" in model config

5. Once the vocabulary list is extended, the pre-trained model will be changed, please make sure you have a good backup of X-MOL.

Path of saving the log file and saved model :

1. Log files are saved in ./log/, a launching log and n running log will be saved (n = the number of GPUs).

2. The saved model (parameter SAVE_STEPS=1000 in ft_conf.sh incidates that the model will be stored every 1000 steps during the training process) will be stored in ./checkpoints/.

Warm start :

Fine-tuning the model on the basis of X-MOL

Set parameter init_model in ft_conf/ft_conf.sh as init_model="path/to/decompressed/X-MOL"

Cold start :

Training the model from scratch

Set parameter init_model in ft_conf/ft_conf.sh as init_model=""