🔥 Real-CUGAN🔥 is an AI super resolution model for anime images, trained in a million scale anime dataset, using the same architecture as Waifu2x-CUNet. It supports 2x\3x\4x super resolving. For different enhancement strength, now 2x Real-CUGAN supports 5 model weights, 3x/4x Real-CUGAN supports 3 model weights.

Real-CUGAN packages an executable environment for windows users. GUI and web version are also supported now.

Update progress

2022-02-07:Windows-GUI/Web versions

2022-02-09:Colab demo file

2022-02-17:NCNN version:AMD graphics card users and mobile phone users can use Real-CUGAN now.

2022-02-20:Low memory mode added. Now you can super resolve very large resolution images. You can download 20220220 updated packages to use it.

2022-02-27:Faster low memory mode added, 25% slower than baseline mode; enhancement strength config alpha added. You can download 20220227 updated packages to use it.

If you find Real-CUGAN helpful for your anime videos/projects, please help by starring ⭐ this repo or sharing it with your friends, thanks!

demo-video.mp4

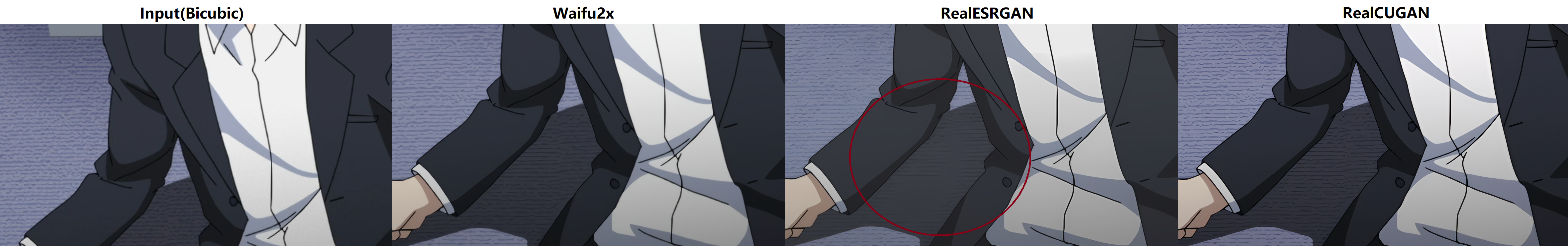

- Visual effect comparison

texture challenge case line challenge case

line challenge case

heavy artifact case

heavy artifact case

bokeh effect case

bokeh effect case

- Detailed comparison

| Waifu2x(CUNet) | Real-ESRGAN(Anime6B) | Real-CUGAN | |

|---|---|---|---|

| training set | Private anime training set, unknown magnitude and quality | Private anime training set, unknown magnitude and quality | Million scale high quality patch training set |

| speed(times) | Baseline | 2.2x | 1x |

| visual effect | can't deblur; artifacts are not completely removed | The sharpening strength is the largest. The painting style may be changed. The lines may be incorrectly reconstructed. The bokeh effect region may be forced to be clear. | Sharper line style than waifu2x. Bokeh effect region maintained better. Texture preserved better. |

| compatibility | numbers of existing windows-apps, Caffe,PyTorch,NCNN, VapourSynth |

PyTorch,VapourSynth,NCNN | the same as waifu2x |

| enhancement | supports multiple denoise strength | only support default enhancement strength | 5 different enhancement strength for 2x, 3 for 3x, 3 for 4x now |

| SR resolution | 1x+2x | 4x | 2x+3x+4x are supported now, and 1x model is training |

Modify config.py, and double click go.bat to execute Real-CUGAN.

-

BaiduDrive(extract code:ds2a) 🔗|GithubRelease 🔗 | GoogleDrive 🔗

-

- ✔️ Tested in windows10 64bit.

- ✔️ CPU with SSE4 and AVX support.

- ✔️ Light version: cuda >= 10.0. 【Heavy version: cuda >= 11.1】

- ✔️ If you use Nvidia cards, 1.5G video memory is needed.

- ❗ **Note that 30 series nvidia GPU only supports heavy version. Nvidia GPU (which is <2 series) users are recommended to use light version. **

-

- mode: image/video;

- model_path: the path of model weights (2x model has 4 weight files, 3x/4x model only has 1 weight file);

- device: cuda device number. If you want to use multiple GPUs to super resolve images, it's recommended to manually divide the tasks into different folders and fill in different cuda device numbers;

- you need fill in the input and output dir path for image task, and the input and output video file path for video task.

- half: FP16 inference or FP32 inference. 'True' is recommended.

- cache_mode: Default 0. Memory needed:0>1>>2=3, speed:0>1(+15%time)>2(+25%time)>3(+150%time). You can super resolve very large resolution images using mode2/3.

- tile: The bigger the number, less video memory is needed, and lower inference speed it is.

- alpha: The bigger the number, the enhancement strength is smaller, more blurry the output images are; the smaller the number, the enhancement strength is bigger, more sharpen image will be generated. Default 1 (don't adjust it). Recommended range: (0.75,1.3)

- nt: the threading number of each GPU. If the video memory is enough, >=2 is recommended (for faster inference).

- n_gpu: the number of GPUs you will use.

- encode_params: if you don't know how to use ffmpeg, you shouldn't change it.

✅ torch>=1.0.0

✅ numpy

✅ opencv-python

✅ moviepy

upcunet_v3.py: model file and image inference script

inference_video.py: a simple script for inferencing anime videos using Real-CUGAN.

Please see Readme

NCNN version:AMD graphics card users and mobile phone users can use Real-CUGAN now.

🔥 Real-CUGAN2x standard version and 🔥 Real-CUGAN2x no crop line version

BaiduDrive(extract code:ds2a) 🔗|GithubRelease 🔗 | GoogleDrive 🔗

Users can replace the original weights with the new ones (remember to backup the original weights if you want to reuse them), and use the original setting to super resolve images.

❗❗❗ due to the crop mechanism of waifu2x-caffe, for standard version,big crop_size is recommanded, or the crop line artifact may be caused. If crop line edge artifact is found, please use our windows package or the 'no crop line' version. The tile_mode of windows package version is lossless. The 'no edge artifact' version waifu2x-caffe weights may cause more texture loss.

For developers, it is recommended to use the whole image as input. Pytorch version (tile mode) is recommended if you want the program to require less video memory.

You can download the weights from netdisk links.

| 1x | 2x | 3x/4x | |

|---|---|---|---|

| denoise strength | only no denoise version supported, training... | no denoise/1x/2x/3x are supported now | no denoise/3x are supported now, 1x/2x:training... |

| conservative version | training... | supported now | |

| fast model | under investigation | ||

- Lightweight/fast version

- Low (video card) memory mode

- Adjustable denoise, deblur, sharpening strength

- Super resolve the image to specified resolution end to end

- Optimize texture retention and reduce AI processing artifacts

- Simple GUI

The training code is from but not limited to:RealESRGAN.

The original waifu2x-cunet architecture is from:CUNet.

Update progress:

- Windows GUI (PyTorch version), Squirrel Anime Enhance v0.0.3

- nihui achieves RealCUGAN-NCNN Ver.. AMD graphics card users and mobile phone users can use Real-CUGAN now.

- Web service (CPU-PyTorch version),by mnixry

Thanks for their contribution!