fsspark is a python module to perform feature selection and machine learning based on spark.

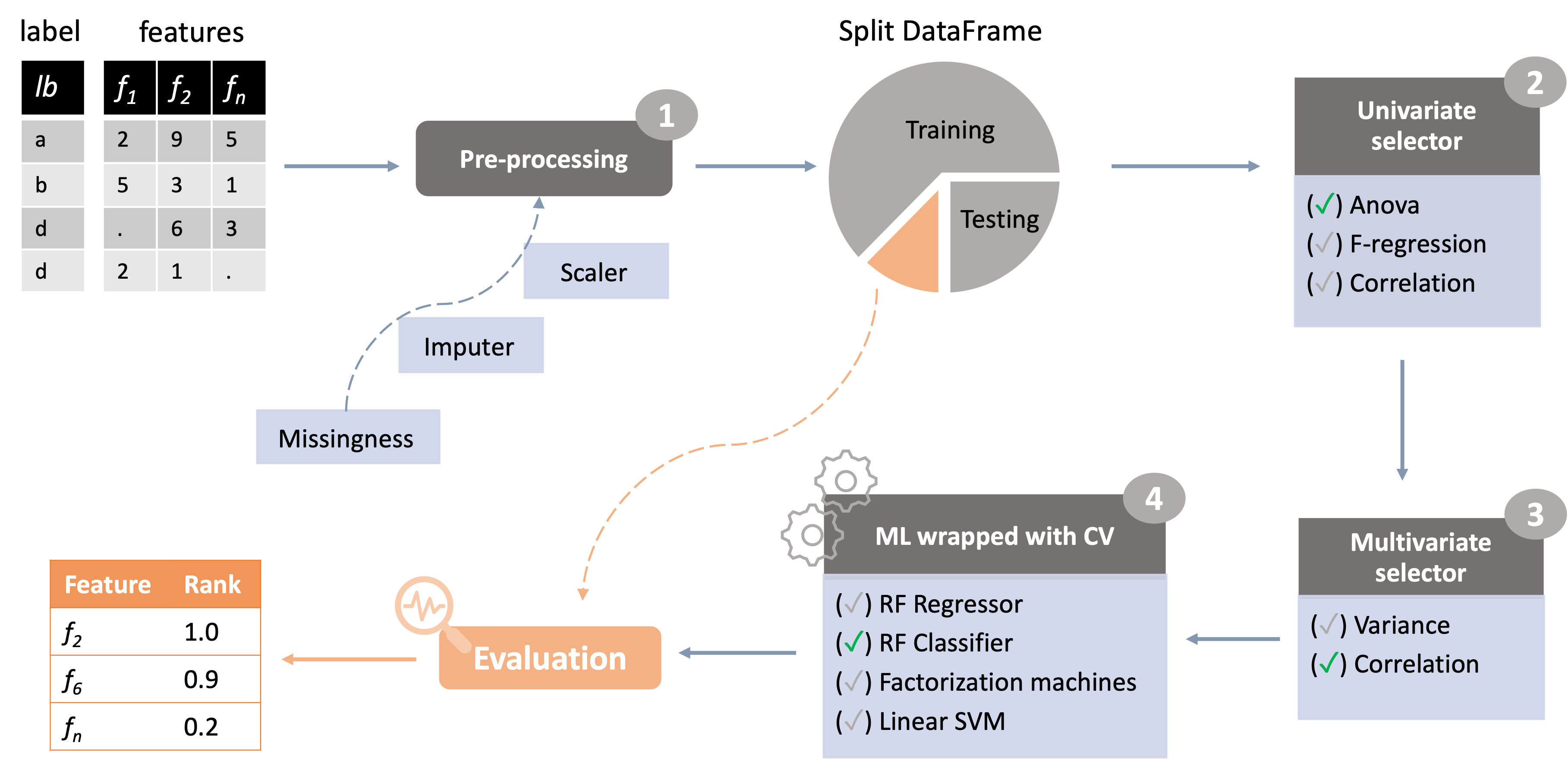

Pipelines written using fsspark can be divided roughly in four major stages: 1) data pre-processing, 2) univariate

filters, 3) multivariate filters and 4) machine learning wrapped with cross-validation (Figure 1).

Figure 1. Feature selection workflow example implemented in fsspark.

Figure 1. Feature selection workflow example implemented in fsspark.

The package documentation describes the data structures and

features selection methods implemented in fsspark.

- pip

git clone https://github.com/enriquea/fsspark.git

cd fsspark

pip install . -r requirements.txt- conda

git clone https://github.com/enriquea/fsspark.git

cd fsspark

conda env create -f environment.yml

conda activate fsspark-venv

pip install . -r requirements.txt- Enrique Audain (https://github.com/enriquea)

- Yasset Perez-Riverol (https://github.com/ypriverol)