This repository contains code for paper Self-supervised Representation Learning for Evolutionary Neural Architecture Search.

If you use the code please cite our paper.

@article{Chen2020SSNENAS,

title={Self-supervised Representation Learning for Evolutionary Neural Architecture Search},

author={Chen Wei and Yiping Tang and Chuang Niu and Haihong Hu and Yue Wang and Jimin Liang},

journal={ArXiv},

year={2020},

volume={abs/2011.00186}

}

- Python 3.7

- Pytorch 1.3

- Tensorflow 1.14.0

- ptflops

pip install --upgrade git+https://github.com/sovrasov/flops-counter.pytorch.git - torch-scatter

pip install torch-scatter==1.4.0 - torch-sparse

pip install torch-sparse==0.4.3 - torch-cluster

pip install torch-cluster==1.4.5 - torch-spline-conv

pip install torch-spline-conv==1.1.1

- Ubuntu 18.04

- cuda 10.0

- cudnn 7.5.1

git clone https://github.com/auroua/SSNENAS

cd SSNENAS- Down load

NASBench-101dataset first. We only use thenasbench_only108.tfrecordfile. - Set the variable

nas_bench_101_base_pathinconfig.pyto point to the folder containing the filenasbench_only108.tfrecord. - Run the following command to generate data files that are required by the code.

python nas_lib/data/nasbench_101_init.py- Down load the

NASBench-201dataset first. In this experiment, we use theNASBench-201dataset with versionv1_1-096897, and the file name isNAS-Bench-201-v1_1-096897.pth. - Set the variable

nas_bench_201_base_pathinconfig.pyto point to the folder containing the fileNAS-Bench-201-v1_1-096897.pth. - Run the following command to generate data files that are required by the code.

python nas_lib/data/nasbench_201_init.py --dataname cifar10-valid

python nas_lib/data/nasbench_201_init.py --dataname cifar100

python nas_lib/data/nasbench_201_init.py --dataname ImageNet16-120Run the following command to train the model that contains the architecture embedding part of the neural predictor.

# save_dir: the output of model weights

python tools_predictors/train_predictor_rl.py --save_dir '/home/albert_wei/Disk_A/train_output_ssne_nas/test/' --search_space 'nasbench_101' # save_dir: the output of model weights

# dataname: [`cifar10-valid`, `cifar100`, `ImageNet16-120`]

python tools_predictors/train_predictor_rl.py --save_dir '/home/albert_wei/Disk_A/train_output_ssne_nas/test/' --search_space 'nasbench_201' --dataname 'cifar10-valid' Run the following command to train the model that contains the architecture embedding part of the neural predictor.

# batch-size: Parameter N in Algorithm 1. The training batch size that determines the size of the negative pair. If you are using multiple gpus, multiple batch-size with the gpu count.

# train_samples: Parameter M in Algorithm 1.

# min_negative_size: Determine the number of negative pairs, which is slightly small than batch-size.

# multiprocessing-distributed: Using multiple gpus, set to True, else set to False.

# gpu: multiple gpus set to None, else set to 0.

python tools_predictors/train_predictor_ccl.py --search_space 'nasbench_101' --batch-size 40000 --train_samples 20000 --batch_step 1000 --min_negative_size 39850 --gpu 0 --multiprocessing-distributed False --save_dir '/home/albert_wei/Disk_A/train_output_ssne_nas/test/'# batch-size: Parameter N in Algorithm 1. The training batch size that determines the size of the negative pair. If you are using multiple gpus, multiple batch-size with the gpu count.

# train_samples: Parameter M in Algorithm 1.

# min_negative_size: Determine the number of negative pairs, which is slightly small than batch-size.

# multiprocessing-distributed: Using multiple gpus, set to True, else set to False.

# gpu: multiple gpus set to None, else set to 0.

python tools_predictors/train_predictor_ccl.py --search_space 'nasbench_201' --batch-size 10000 --train_samples 5000 --batch_step 1000 --min_negative_size 9850 --gpu 0 --multiprocessing-distributed False --save_dir '/home/albert_wei/Disk_A/train_output_ssne_nas/test/'After the above pre-training, update the parameters ss_rl_nasbench_101, ss_rl_nasbench_201, ss_ccl_nasbench_101, and ss_ccl_nasbench_201 to the pre-trained model file which is generated by the above code.

# predictor_list: The predictor type, it is a list type.

# load_dir: the pre-trained model paths, which is corresponding with the predictor types.

python tools_predictors/predictor_finetune.py --predictor_list 'SS_RL' 'SS_CCL' --search_space 'nasbench_101' --save_dir '/home/albert_wei/Disk_A/train_output_ssne_nas/test/'# predictor_list: The predictor type, it is a list type.

# load_dir: the pre-trained model paths, which is corresponding with the predictor types.

# dataname: [`cifar10-valid`, `cifar100`, `ImageNet16-120`]

python tools_predictors/predictor_finetune.py --predictor_list 'SS_RL' 'SS_CCL' --search_space 'nasbench_201' --save_dir '/home/albert_wei/Disk_A/train_output_ssne_nas/test/'Run the following command to visualize the comparison of algorithms

# result_path: the save_dir of the above commands.

python tools_predictors/predictor_finetune.py --result_path '/home/albert_wei/Disk_A/train_output_ssne_nas/test/'# gpus: the number of gpus used to execute searching.

# save_dir: the output path.

python tools_nas/close_domain/train_multiple_gpus_close_domain.py --trials 600 --search_space nasbench_101 --algo_params nasbench_101 --gpus 1 --save_dir /home/albert_wei/Disk_A/train_output_ssne_nas/test/# gpus: the number of gpus used to execute searching.

# save_dir: the output path.

python tools_nas/close_domain/train_multiple_gpus_close_domain.py --trials 600 --search_space nasbench_101 --algo_params nasbench_101_fixed --gpus 1 --save_dir /home/albert_wei/Disk_A/train_output_ssne_nas/test/# gpus: the number of gpus used to execute searching.

# save_dir: the output path.

# dataname: [`cifar10-valid`, `cifar100`, `ImageNet16-120`]

python tools_nas/close_domain/train_multiple_gpus_close_domain.py --trials 600 --search_space nasbench_201 --algo_params nasbench_201 --dataname 'cifar10-valid' --gpus 1 --save_dir /home/albert_wei/Disk_A/train_output_ssne_nas/test/Run the following command to visualize the comparison of algorithms. Change the save_dir to the path of save_dir in the above step.

python tools_close_domain/visualize_results.py --search_space nasbench_201 --save_dir /home/albert_wei/Disk_A/train_output_npenas/npenas_201/ --draw_type ERRORBAR# search_space: ['nasbench_101', 'nasbench_201']

python tools_predictors/encoding_compare.py --search_space nasbench_201# modify the save_dir parameter

python tools_predictors/predictor_comparison.py --search_space nasbench_101 --gpu 0 --save_dir /home/aurora/data_disk_new/train_output_2021/darts_save_path/# modify the save_dir parameter

python tools_predictors/predictor_comparison_ged.py --gpu 0 --save_dir /home/aurora/data_disk_new/train_output_2021/darts_save_path/# modify the save_dir parameter

python tools_darts/gen_darts_archs.py --nums 50000 --save_dir '/home/aurora/data_disk_new/train_output_2021/darts_save_path/darts.pkl'# save_dir: point to the folder that contains the genotype files generated by the above step

python tools_darts/convert_gen_data_to_architectures.py --save_dir /home/aurora/data_disk_new/train_output_2021/darts_save_path/models/# darts_arch_path: point to the folder that generated by the above step

# The meaning of parameter batch-size, train-samples, min_negative_size can be found in our paper

python tools_predictors/train_predictor_ccl.py --darts_arch_path /home/aurora/data_disk_new/train_output_2021/darts_save_path/architectures/part3_partial.pkl --save_dir /home/aurora/data_disk_new/train_output_2021/darts_save_path/ -b 10000 --train_samples 4000 -bs 500 --min_negative_size 9900# darts_arch_path: point to the folder that generated by the above step

# The meaning of parameter batch-size, train-samples, min_negative_size can be found in our paper

python tools_darts/train_multiple_gpus_open_domain.py --gpus 1 --seed 11 --budget 100 --save_dir /home/aurora/data_disk_new/train_output_2021/darts_save_path/The parameter of how many neural architectures evaluated can be modidfied in file nas_lib/params.py line 171 the parameter fixed_num

How to rank the search results, train the best model and evaluate the model can reference NPENAS.

model_name: the id of the searched architecture

save_dir: The model file should in a folder, and the folder name is `model_pkl`. This dir point to the path that contains the `model_pkl` folder.

model_path: this path points to the saved weights file.

python tools_darts/test_darts_cifar10.py --model_name e678ee620e52436f6e2f36d0396c1f9baf994a78e64242d629aad927b0eb0057.pkl --save_dir /home/albert_wei/Desktop/CIM-Revise-R2/ssnenas_darts_results_new/models/ --model_path /home/albert_wei/Desktop/CIM-Revise-R2/ssnenas_darts_results_new/seed_1/model_best.pth.tar

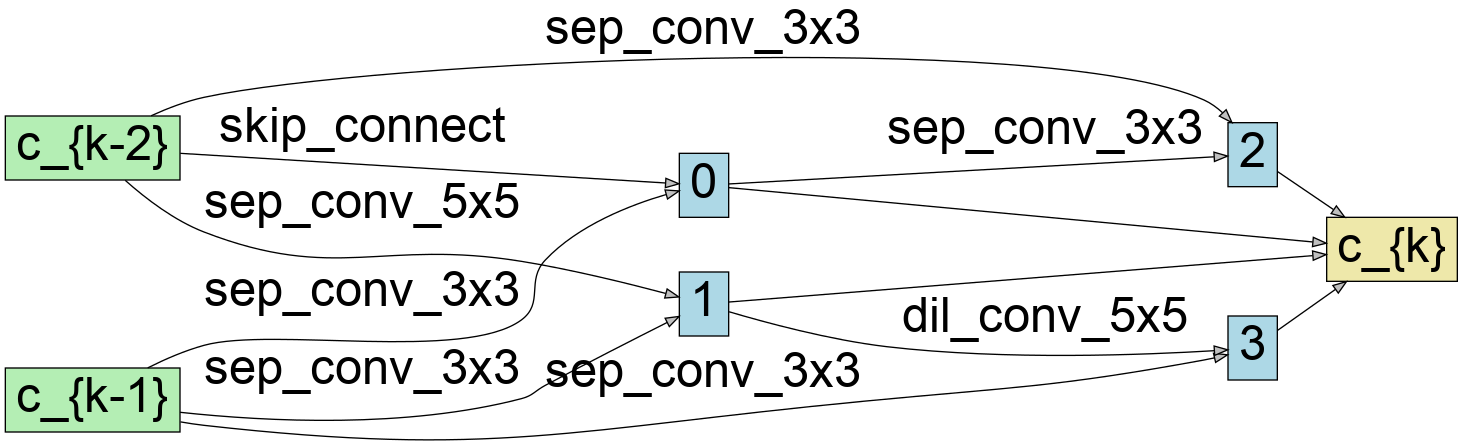

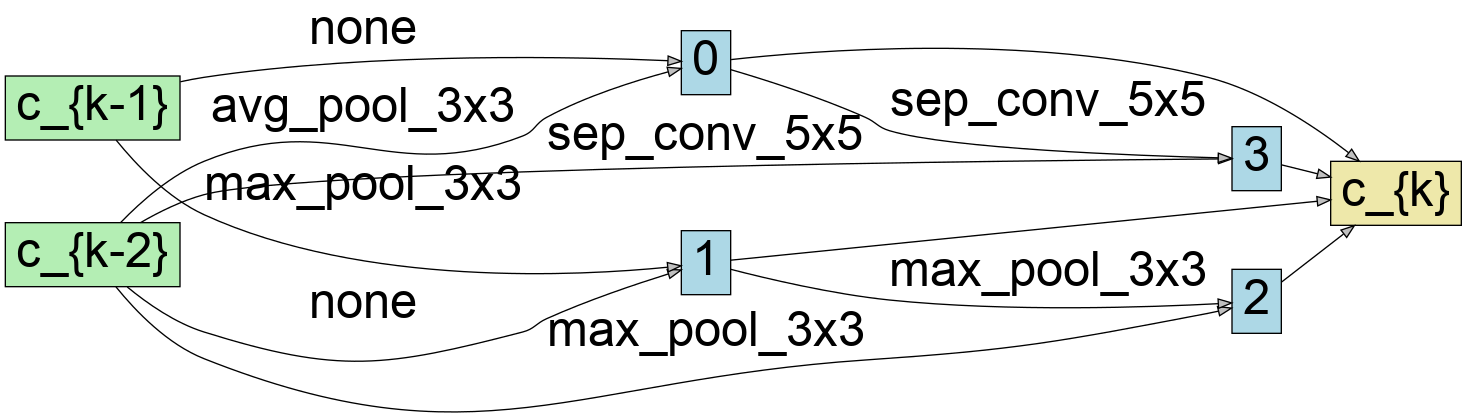

Visualize the searched normal cell and the reduction cell, and this architecture achieves a testing error 2.41%.

You can download the best architecture's genotype file from genotype with extract code 2h3y. The address of the retrained weight file is pth with extract code wxpc.

You can use the command in the section Test the retrained architecture to verify the model.

| CPU | GPU | Memory Size |

|---|---|---|

| Intel Xeon E5-2650 v4 *2 | Titan V * 6 | 128G |

| model type | link | password |

|---|---|---|

| ss_ccl_nasbench_101_140k | link | dvba |

| ss_ccl_nasbench_201 | link | 7q4f |

| ss_ccl_darts | link | w6k7 |

| ss_rl_nasbench_101_300 | link | kjtb |

| ss_rl_nasbench_201_1000 | link | p447 |

| ss_rl_nasbench_201_wo_normalization | link | tqmb |

| ss_ccl_nasbench_101_10k | link | nr8h |

| ss_ccl_nasbench_101_40k | link | qy3u |

| ss_ccl_nasbench_101_70k | link | tcjy |

| ss_ccl_nasbench_101_100k | link | f5jj |

| Experiment | visualization script* | link | password |

|---|---|---|---|

| predictor_finetune_nasbench_101 | tools_predictors/visualize/visualize_predictor_finetune.py | link | r94w |

| predictor_finetune_nasbench_201 | tools_predictors/visualize/visualize_predictor_finetune.py | link | u5u2 |

| predictor_batch_size_compare | tools_predictors/visualize/visualize_predictor_comparision_batch_size.py | link | 24r3 |

| predictive_performance_comparison | tools_predictors/visualize/visualize_predictors_predictive_performance_comparison.py | link | ebrh |

| predictor_normalize_ged | tools_predictors/visualize/visualize_predictor_normalized_ged_comparison.py | link | h5zc |

| npenas_fixed_nasbench_101 | tools_nas/close_domain/visualize_results.py | link | ap6f |

| npenas_fixed_nasbench_201_cifar10 | tools_nas/close_domain/visualize_results.py | link | rk9z |

| npenas_fixed_nasbench_201_cifar100 | tools_nas/close_domain/visualize_results.py | link | nptc |

| npenas_fixed_nasbench_201_Imagenet | tools_nas/close_domain/visualize_results.py | link | m16v |

| npenas_fixed_nasbench_101_batch_size_compare | tools_nas/close_domain/visualize_results.py | link | xdn4 |

| darts_results | link | 39xz | |

| darts_results_new | link | ikvy | |

| * modify the parameters of the visualization script to view results. |

- bananas

- pytorch_geometric

- NAS-Bench-101

- NAS-Bench-201

- MoCo

- Semi-Supervised Neural Architecture Search

- NPENAS

Chen Wei

email: [email protected], [email protected]