A comprehensive guide and implementation of architectural patterns that utilize Large Language Models (LLMs) for the efficient generation of SQL from natural language text.

For comprehensive details on the architectural patterns mentioned below, please refer to the accompanying article on Medium. This piece delves into the utilization of LLMS for enhanced BigQuery interactions. You can access the article via this link.

Architectural Patterns for Text-to-SQL: Leveraging LLMs for Enhanced BigQuery Interactions

- Introduction

- Architectural Patterns

- Getting Started

- Challenges and Limitations (In Progress)

- Contributing

- License

This repository offers an in-depth exploration into Text-to-SQL conversion, emphasizing the role of LLMs in enhancing the SQL generation process. With the advent of LLMs, there is a promising opportunity to streamline the translation of natural language to SQL. We explore various architectural patterns that leverage the capabilities of LLMs in this exciting field.

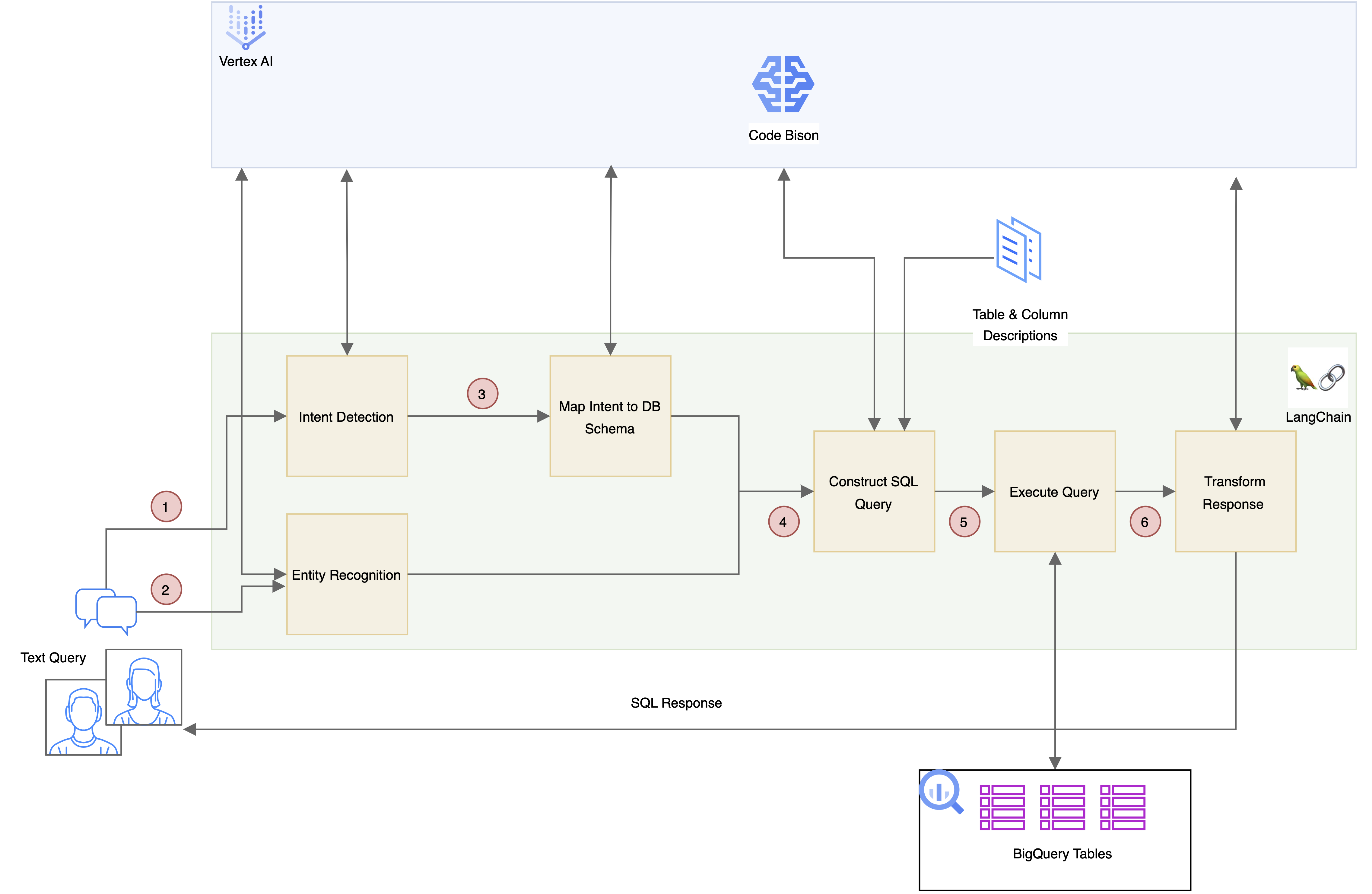

Utilizing LLMs to identify intent, extract entities, and subsequently generate SQL.

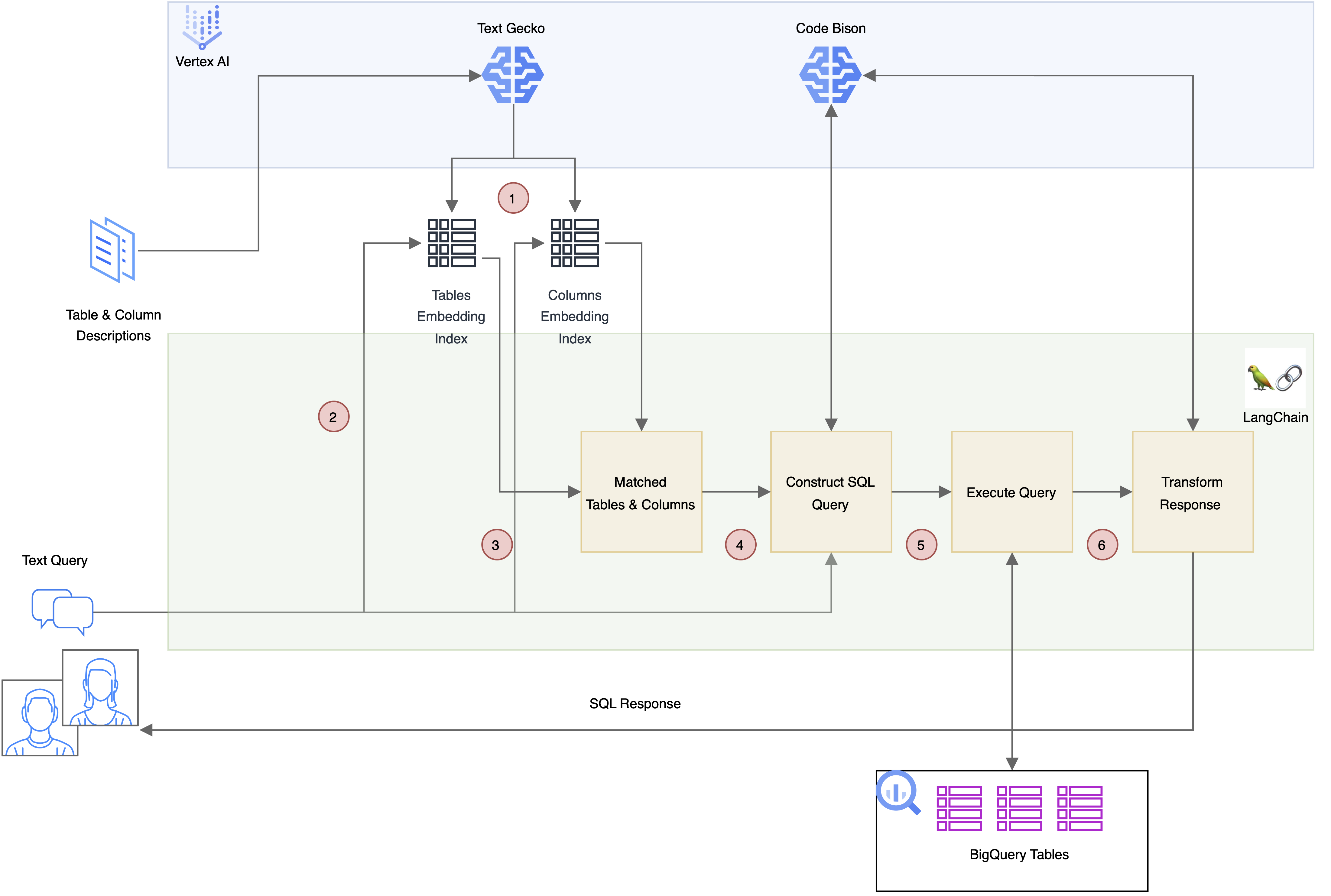

Incorporating Retriever-Augmented Generation (RAG) with table and column metadata to pinpoint relevant database schema elements, allowing LLMs to formulate queries based on natural language questions.

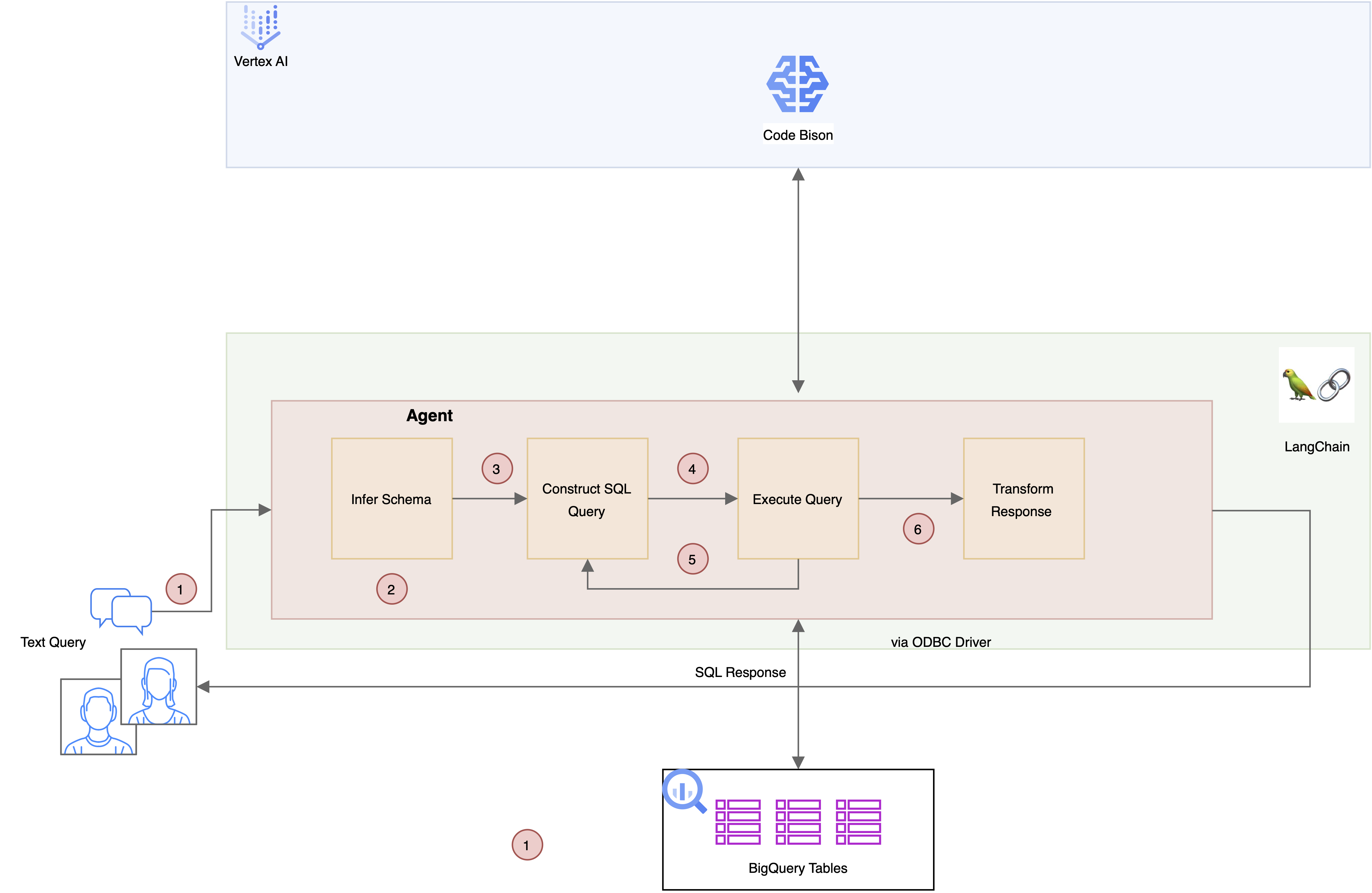

Adopting an autonomous agent-based approach where a BigQuery SQL agent, equipped with an ODBC connection, iteratively attempts and refines SQL queries with minimal external guidance.

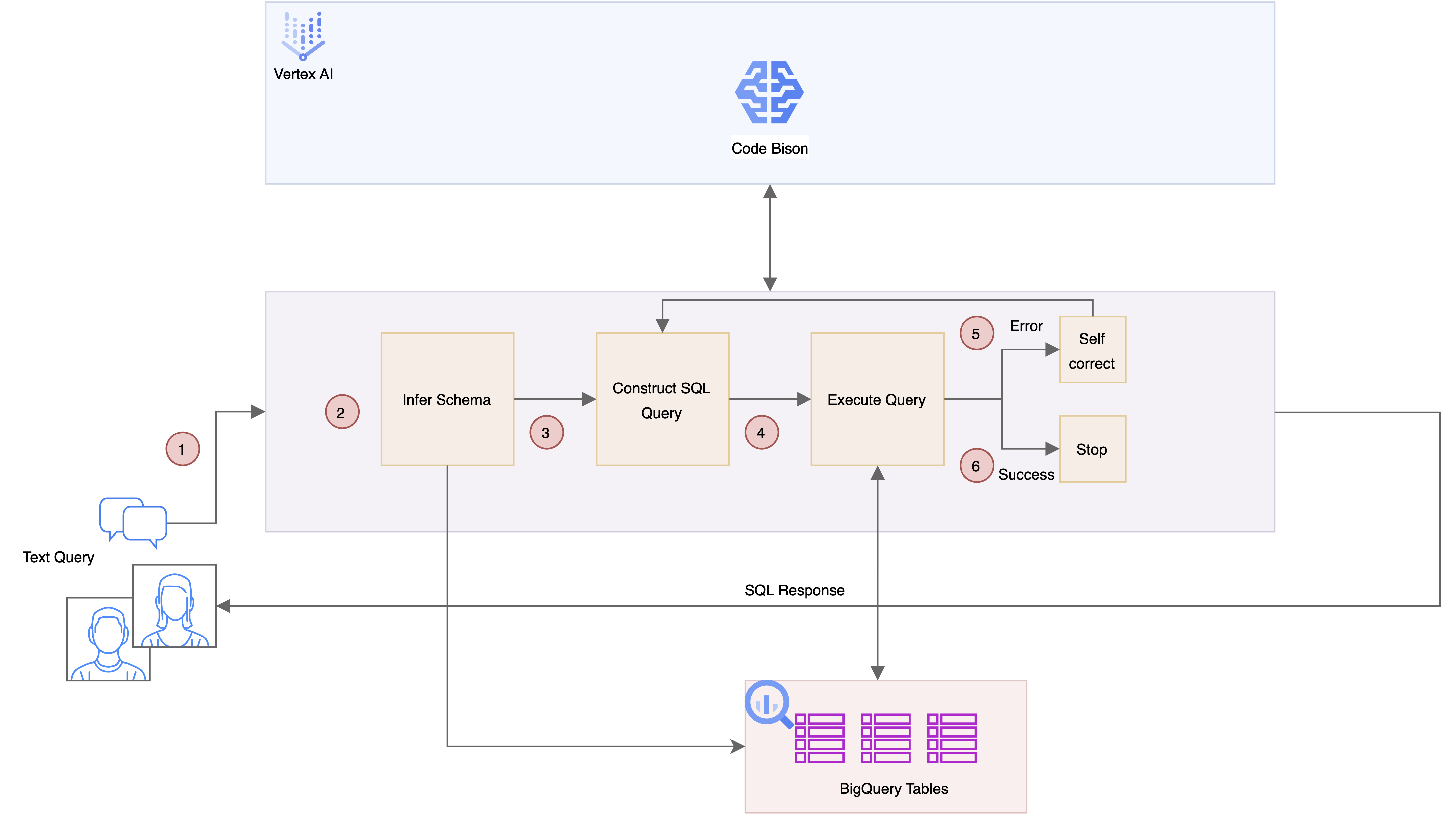

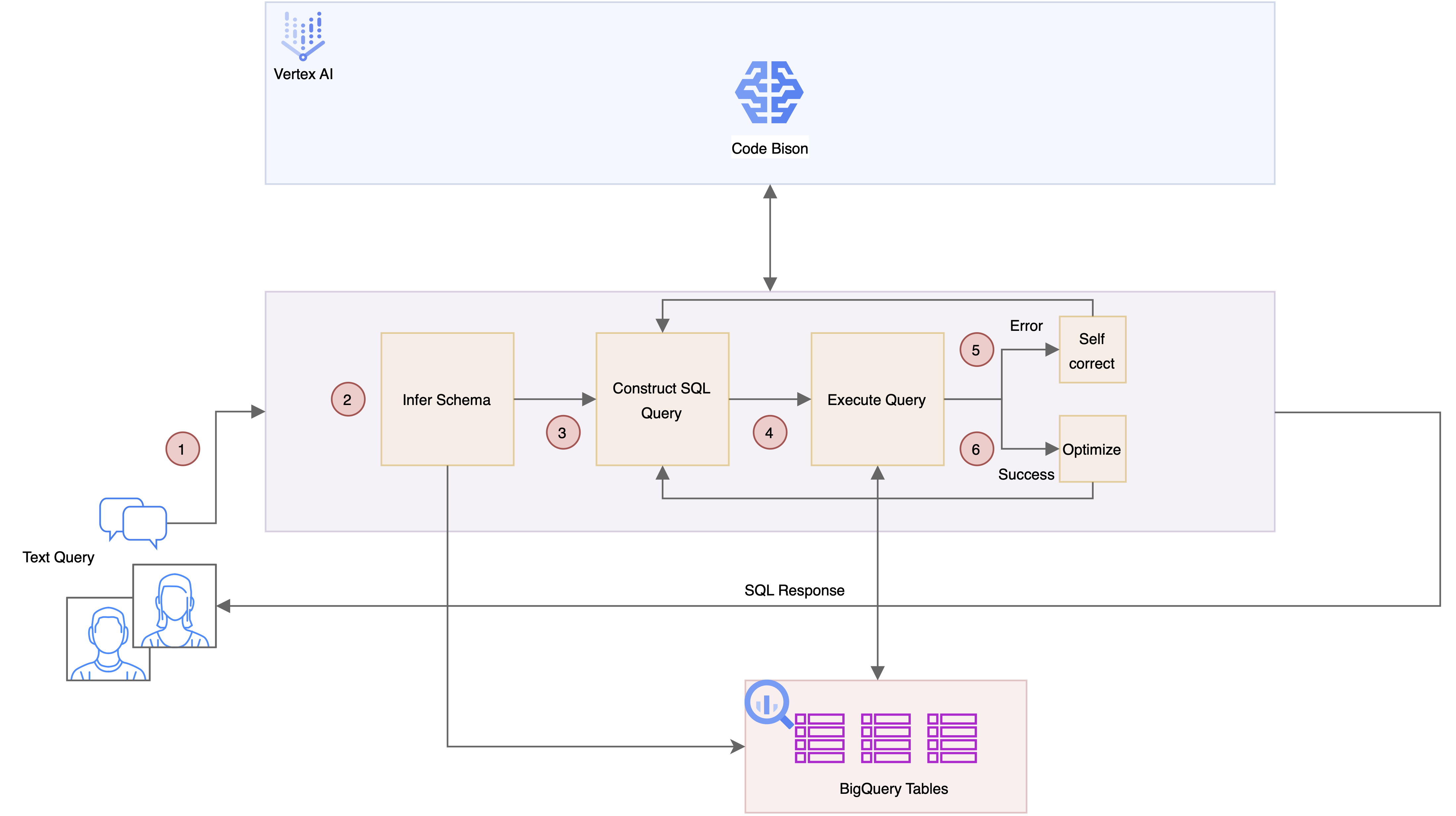

Implementing a self-correcting mechanism where the LLM receives direct schema input, generates an SQL query, and upon execution failure, uses the error feedback to self-correct and retry until successful. This pattern also explores using the Code-Chat Bison model to potentially reduce costs and improve latency.

Similar to Pattern IV but employs a stochastic approach with a temperature setting of 1. It runs multiple trials and selects the query that executes the fastest.

-

Clone the Repository:

git clone https://github.com/your_username/LLM-Text-to-SQL-Architectures.git

-

Navigate to the directory:

cd LLM-Text-to-SQL-Architectures -

Set up a virtual environment:

python3 -m venv .venv source .venv/bin/activate -

Install dependencies:

pip install -r requirements.txt

Follow the individual pattern READMEs for detailed setup and usage instructions.

This section discusses the potential pitfalls, challenges, and areas for improvement in the application of LLMs for Text-to-SQL conversion.

See Medium article: Architectural Patterns for Text-to-SQL: Leveraging LLMs for Enhanced BigQuery Interactions

Contributions are what make the open-source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated. Check out CONTRIBUTING.md for guidelines on how to submit pull requests.

This project is licensed under the MIT License - see the LICENSE.md file for details.