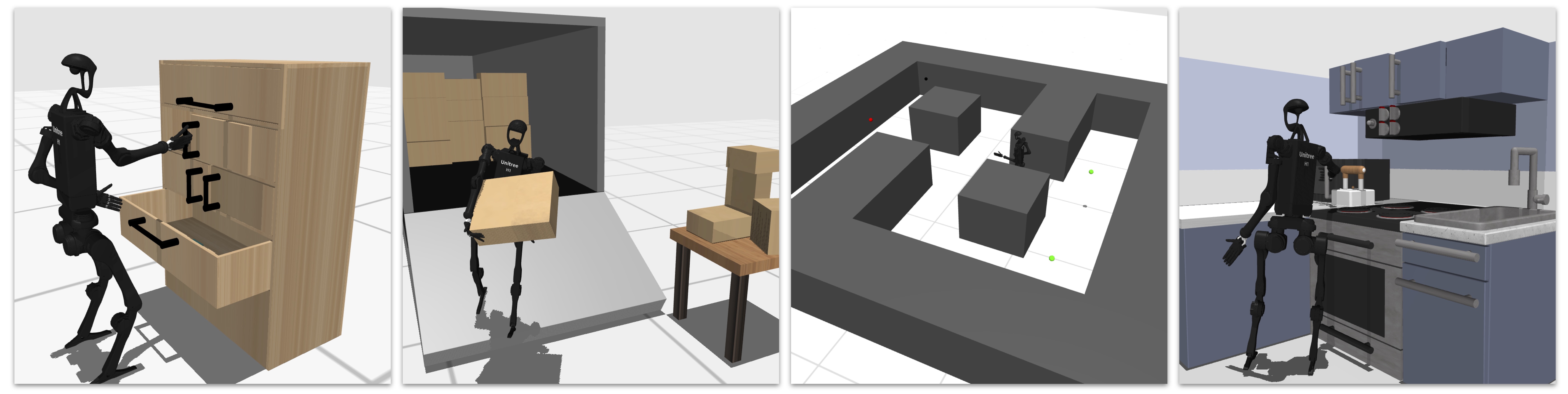

We present HumanoidBench, a simulated humanoid robot benchmark consisting of

Structure of the repository:

data: Weights of the low-level skill policiesdreamerv3: Training code for dreamerv3humanoid_bench: Core benchmark codeassets: Simulation assetsenvs: Environment filesmjx: MuJoCo MJX training code

jaxrl_m: Training code for SACppo: Training code for PPOtdmpc2: Training code for TD-MPC2

# Initialize env

git clone https://github.com/carlosferrazza/humanoid-bench.git

cd humanoid-bench

conda create -n humanoid_bench python=3.8

conda activate humanoid_bench

# Install humanoid benchmark

pip install -e .

# Install jaxrl

pip install -e jaxrl_m

pip install ml_collections flax distrax tf-keras

# Install dreamer

pip install -e dreamerv3

pip install ipdb wandb moviepy imageio opencv-python ruamel.yaml rich cloudpickle tensorflow tensorflow_probability dm-sonnet optax plotly msgpack zmq colored matplotlib

# Install td-mpc2

pip install -e tdmpc2

pip install torch torchvision torchaudio hydra-core pyquaternion tensordict torchrl pandas hydra-submitit-launcher termcolor

# jax GPU version

pip install --upgrade "jax[cuda12_pip]" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

# Or, jax CPU version

pip install --upgrade "jax[cpu]"

h1hand-walk-v0h1hand-reach-v0h1hand-hurdle-v0h1hand-crawl-v0h1hand-maze-v0h1hand-push-v0h1hand-cabinet-v0h1strong-highbar_hard-v0# Make hands stronger to be able to hang from the high barh1hand-door-v0h1hand-truck-v0h1hand-cube-v0h1hand-bookshelf_simple-v0h1hand-bookshelf_hard-v0h1hand-basketball-v0h1hand-window-v0h1hand-spoon-v0h1hand-kitchen-v0h1hand-package-v0h1hand-powerlift-v0h1hand-room-v0h1hand-stand-v0h1hand-run-v0h1hand-sit_simple-v0h1hand-sit_hard-v0h1hand-balance_simple-v0h1hand-balance_hard-v0h1hand-stair-v0h1hand-slide-v0h1hand-pole-v0h1hand-insert_normal-v0h1hand-insert_small-v0

python -m humanoid_bench.test_env --env h1hand-walk-v0

# Define checkpoints to pre-trained low-level policy and obs normalization

export POLICY_PATH="data/reach_two_hands/torch_model.pt"

export MEAN_PATH="data/reach_two_hands/mean.npy"

export VAR_PATH="data/reach_two_hands/var.npy"

# Test the environment

python -m humanoid_bench.test_env --env h1hand-push-v0 --policy_path ${POLICY_PATH} --mean_path ${MEAN_PATH} --var_path ${VAR_PATH} --policy_type "reach_double_relative"

# One-hand reaching

python -m humanoid_bench.mjx.mjx_test --with_full_model

# Two-hand reaching

python -m humanoid_bench.mjx.mjx_test --with_full_model --task=reach_two_hands --folder=./data/reach_two_hands

As a default, the environment returns a privileged state of the environment (e.g., robot state + environment state). To get proprio, visual, and tactile sensing, set obs_wrapper=True and accordingly select the required sensors, e.g. sensors="proprio,image,tactile". When using tactile sensing, make sure to use h1touch in place of h1hand.

Full test instruction:

python -m humanoid_bench.test_env --env h1touch-stand-v0 --obs_wrapper True --sensors "proprio,image,tactile"

In addition to the main benchmark tasks listed above, you can run the following environements that feature the robot without hands:

h1-walk-v0h1-reach-v0h1-hurdle-v0h1-crawl-v0h1-maze-v0h1-push-v0h1-highbar_simple-v0h1-door-v0h1-truck-v0h1-basketball-v0h1-package-v0h1-stand-v0h1-run-v0h1-sit_simple-v0h1-sit_hard-v0h1-balance_simple-v0h1-balance_hard-v0h1-stair-v0h1-slide-v0h1-pole-v0

# Define TASK

export TASK="h1hand-sit_simple-v0"

# Train TD-MPC2

python -m tdmpc2.train disable_wandb=False wandb_entity=[WANDB_ENTITY] exp_name=tdmpc task=humanoid_${TASK} seed=0

# Train DreamerV3

python -m embodied.agents.dreamerv3.train --configs humanoid_benchmark --run.wandb True --run.wandb_entity [WANDB_ENTITY] --method dreamer --logdir logs --task humanoid_${TASK} --seed 0

# Train SAC

python ./jaxrl_m/examples/mujoco/run_mujoco_sac.py --env_name ${TASK} --wandb_entity [WANDB_ENTITY] --max_steps 5000000 --seed 0

# Train PPO (not using MJX)

python ./ppo/run_sb3_ppo.py --env_name ${TASK} --wandb_entity [WANDB_ENTITY] --seed 0

# Define TASK

export TASK="h1hand-push-v0"

# Define checkpoints to pre-trained low-level policy and obs normalization

export POLICY_PATH="data/reach_one_hand/torch_model.pt"

export MEAN_PATH="data/reach_one_hand/mean.npy"

export VAR_PATH="data/reach_one_hand/var.npy"

# Train TD-MPC2 with pre-trained low-level policy

python -m tdmpc2.train disable_wandb=False wandb_entity=[WANDB_ENTITY] exp_name=tdmpc task=humanoid_${TASK} seed=0 policy_path=${POLICY_PATH} mean_path=${MEAN_PATH} var_path=${VAR_PATH} policy_type="reach_single"

# Train DreamerV3 with pre-trained low-level policy

python -m embodied.agents.dreamerv3.train --configs humanoid_benchmark --run.wandb True --run.wandb_entity [WANDB_ENTITY] --method dreamer_${TASK}_hierarchical --logdir logs --env.humanoid.policy_path ${POLICY_PATH} --env.humanoid.mean_path ${MEAN_PATH} --env.humanoid.var_path ${VAR_PATH} --env.humanoid.policy_type="reach_single" --task humanoid_${TASK} --seed 0

Please find here json files including all the training curves, so that comparing with our baselines will not necessarily require re-running them in the future.

The json files follow this key structure: task -> method -> seed_X -> (million_steps or return). As an example to access the return sequence for one seed of the SAC run for the walk task, you can query the json data as data['walk']['SAC']['seed_0']['return'].

If you find HumanoidBench useful for your research, please cite this work:

@article{sferrazza2024humanoidbench,

title={HumanoidBench: Simulated Humanoid Benchmark for Whole-Body Locomotion and Manipulation},

author={Carmelo Sferrazza and Dun-Ming Huang and Xingyu Lin and Youngwoon Lee and Pieter Abbeel},

journal={arXiv Preprint arxiv:2403.10506},

year={2024}

}

This codebase contains some files adapted from other sources:

- jaxrl_m: https://github.com/dibyaghosh/jaxrl_m/tree/main

- DreamerV3: https://github.com/danijar/dreamerv3

- TD-MPC2: https://github.com/nicklashansen/tdmpc2

- purejaxrl (JAX-PPO traning): https://github.com/luchris429/purejaxrl/tree/main

- Digit models: https://github.com/adubredu/KinodynamicFabrics.jl/tree/sim

- Unitree H1 models: https://github.com/unitreerobotics/unitree_ros/tree/master

- MuJoCo Menagerie (Unitree H1, Shadow Hands, Robotiq 2F-85 models): https://github.com/google-deepmind/mujoco_menagerie

- Robosuite (some texture files): https://github.com/ARISE-Initiative/robosuite