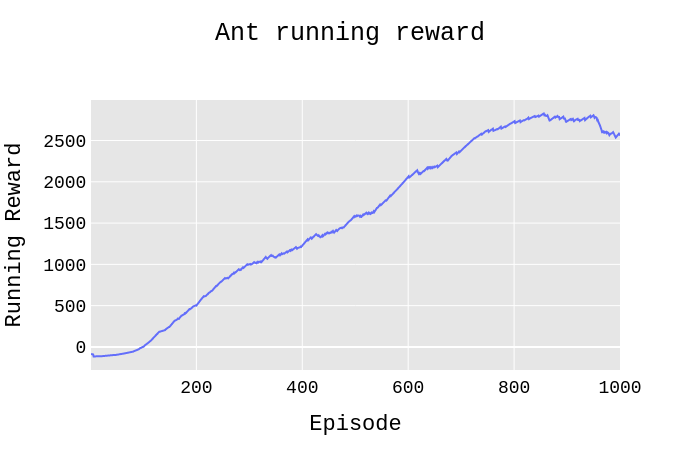

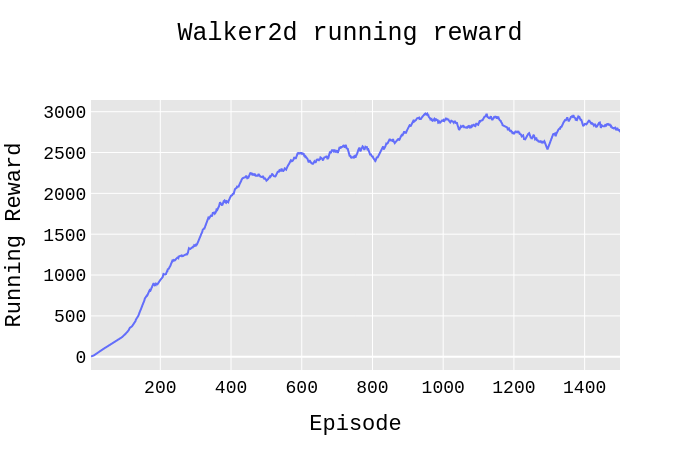

Implementation of the proximal policy optimization on Mujoco environments. All hyper-parameters have been chosen based on the paper.

For Atari domain. look at this.

| Ant-v2 | Walker2d-v2 | InvertedDoublePendulum-v2 |

|---|---|---|

|

|

|

| Ant-v2 | Walker2d-v2 | InvertedDoublePendulum-v2 |

|---|---|---|

|

|

|

- gym == 0.17.2

- mujoco-py == 2.0.2.13

- numpy == 1.19.1

- opencv_contrib_python == 3.4.0.12

- torch == 1.4.0

pip3 install -r requirements.txtpython3 main.py- You may use

Train_FLAGflag to specify whether to train your agent when it isTrueor test it when the flag isFalse. - There are some pre-trained weights in pre-trained models dir, you can test the agent by using them; put them on the root folder of the project and turn

Train_FLAGflag toFalse.

- Ant

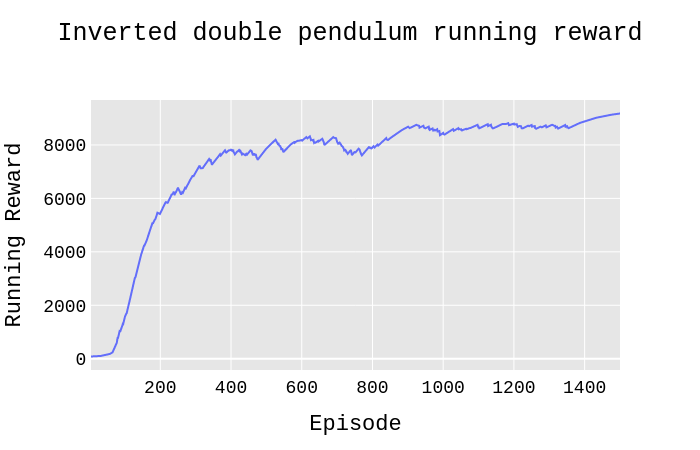

- InvertedDoublePendulum

- Walker2d

- Hopper

- Humanoid

- Swimmer

- HalfCheetah

Proximal Policy Optimization Algorithms, Schulman et al., 2017

- @higgsfield for his ppo code.

- @OpenAI for Baselines.

- @Reinforcement Learning KR for their Policy Gradient Algorithms.