| The aim of the project is to develop an application for extracting N-grams from the text, which can work with unlimited text size and quickly and efficiently use available resources. | |

The foundation of the algorithm is a language model, which computes the probability of a sentence or sequence of words.

For example, the phrase “The cat is sleeping” is more likely to appear than “The cat is dancing” in some writing. The probability of these sentences can be shown as:

P("The cat is sleeping") > P("The cat is dancing")

where the probability, P, of the sentence “The cat is sleeping” is greater than the sentence “The cat is dancing” based on our general knowledge.

The prediction of the next words using n-gram technique, say for n=3, approximates

the probability of P("The cat is sleeping") to P("cat is sleeping"), which can be

calculated as P("sleeping"|"cat is") (— what is the probability of the phrase "cat is" followed by a word "sleeping"?).

The calculation of this probability in our program is done by the following formula:

P("sleeping"|"cat is") = C("cat is sleeping")/C("cat is")

where the count, C, denotes the number of such phrases in text.

Thus, the main task of our project is to effectively remove n-grams from the text, calculate the probability and offer some of the most likely words to the user.

The main steps of the training are as follows:

- reading files — parallel;

- indexing — parallel;

- merging maps — parallel;

- calculating probability;

- writing results to the output files.

The main steps of the prediction are as follows:

- transforming files to the maps — parallel;

- user input processing;

- finding the most probable following words.

CMakeboosttbb

indir = "../data" # source directory for files

ngram_par = 3 # parameter n > 1

option = 0 # 0 for counting and 1 for word prediction

word_num = 3 # number of words to predict > 0

out_prob = "../results/out_prob.txt" # file to save result probabilities

out_ngram = "../results/out_words.txt" # file to save result n-gram and following words

index_threads = 2 # threads used for indexing >= 1

merge_threads = 2 # threads used for merging >= 1

prediction_threads = 4 # threads for prediction >= 1

files_queue_s = 1000000 # amount of max file paths in files_queue

strings_queue_s = 1000000000 # max size of queue of strings in bytes

merge_queue_s = 10000 # max amount of elements in merging queue

allowed_ext = .txt .zip # extensions to index (supported only .zip and .txt)

-

Clone the repository.

-

Set the desired attributes in

.cfgfile. -

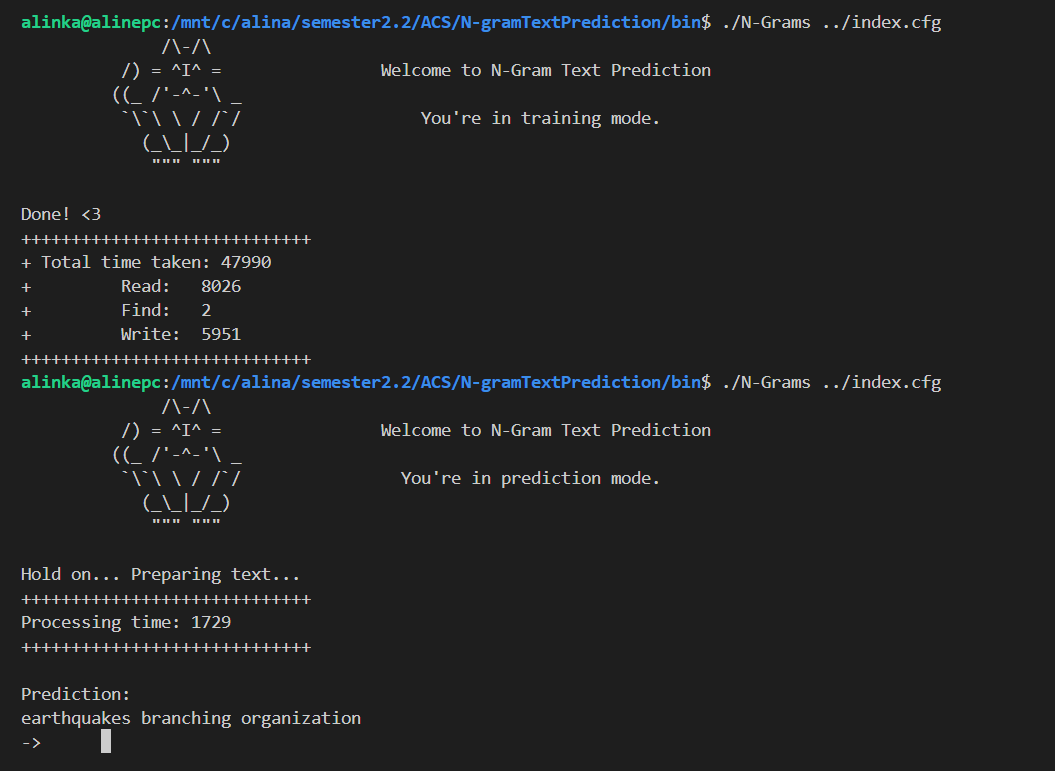

Train the model:

- compile the program with

./compile.sh -O - go to the

bindirectory - run

./N-Grams ../index.cfg

where the argument is the path to

.cfgfile. - compile the program with

-

Test model in the prediction mode.

- change the

optionto 1 in the.cfgfile - run

./N-Grams ../index.cfg- after the preprocessing for prediction is done, the input from user will be requested

- change the

Comparing plots of different settings for indexing.

Comparing plots for different settings for prediction.

- Implement sequential training algorithm

- Implement sequential prediction algorithm

- Implement parallel training

- Implement parallel processing for prediction

- Add processing of unknown words

- Build comparing plots for different settings