Arcode is a command-line tool designed to help facilitate holistic software development via LLM. It allows users to generate feature implementations for a given codebase based on user-defined requirements.

- Provide requirements and followup feedback to auto-build changes

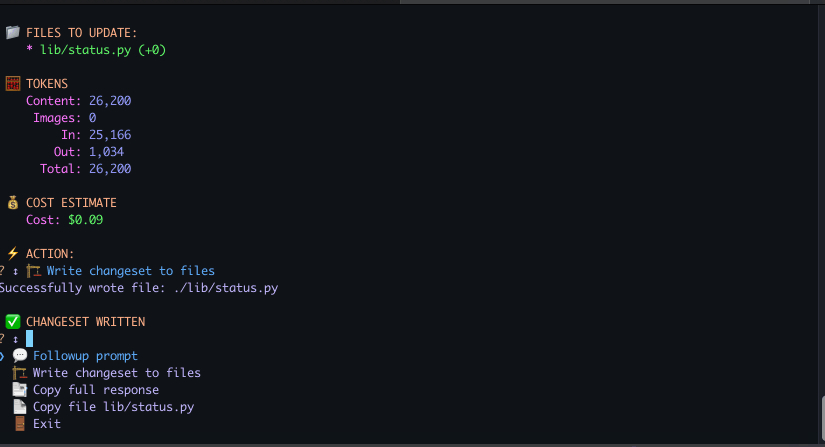

- Single button press confirmation + implementation

- Supplies context from your local files and select remote resources

- Optional limiting of file context based on relevancy to features

- Support for various providers/models via LiteLLM

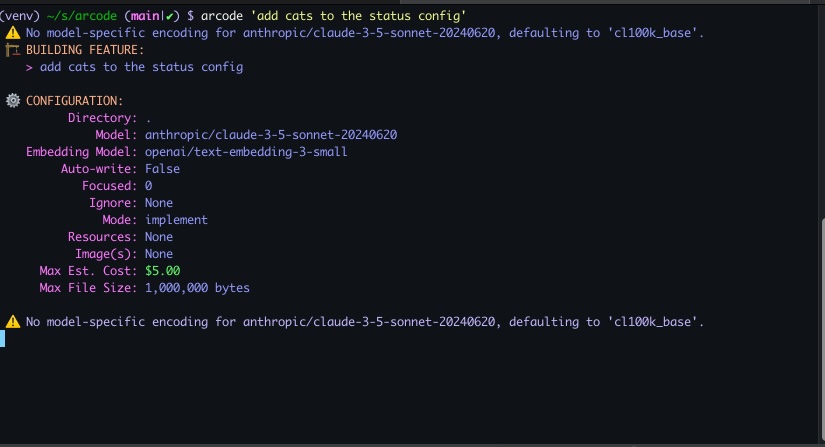

Generate a plan and optionally write the changes based on requirements:

arcode "Implement authentication"Use with input from a file:

cat feature_requirements.txt | arcode --dir ./my_codebaseProvide remote resources

arcode "Follow the latest docs in the provided resources to add a LangChain SQL query chain to retrieve relevant reporting details from the databse given user input" --resources="https://api.python.langchain.com/en/latest/chains/langchain.chains.sql_database.query.create_sql_query_chain.html#langchain.chains.sql_database.query.create_sql_query_chain"Run Arcode with and pass it your requirements:

arcode "Build feature X"Or if you're running the .py version, run with one of the following:

./arcode.py "Build feature X"

python arcode.py "Build feature X"You can pass configuration via CLI arguments or by creating an arcodeconf.yml file in your ~/.conf/ directory (i.e. global config at ~/.config/arcodeconf.yml) and/or in the root of your project working directory. An arcodeconf.yml file can set arguments and environment variables for a project.

Sample arcodeconf.yml:

args:

model: anthropic/claude-3-opus-20240229

ignore:

- build

- dist

- secrets

resources:

- https://example.com/resource1

- https://example.com/resource2

env:

ANTHROPIC_API_KEY: 3xampl3For best results, use one of the following models:

anthropic/claude-3-5-sonnet-20240620bedrock/anthropic.claude-3-5-sonnet-20240620-v1:0openai/gpt-4oazure/gpt-4o

Other popular models include:

anthropic/claude-3-opus-20240229bedrock/anthropic.claude-3-opus-20240229-v1:0openai/gpt-4azure/gpt-4

Popular supported embedding models include:

openai/text-embedding-3-small

Set API keys based on the provider(s) you're using - these values should be present in either your shell environment, an .env file in the working directory, or the env section of an arcodeconf.yml file located in the working directory.

OPENAI_API_KEY

ANTHROPIC_API_KEY

GEMINI_API_KEY

AZURE_API_KEYAZURE_API_BASEAZURE_API_VERSION

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_REGION_NAME

usage: arcode [-h] [--dir DIR] [--auto-write AUTO_WRITE] [--focused FOCUSED] [--model MODEL] [--model-embedding MODEL_EMBEDDING]

[--mode {implement,question}] [--ignore [IGNORE ...]] [--resources [RESOURCES ...]] [--images [IMAGES ...]] [--debug]

[--models [MODELS]] [--max-estimated-cost MAX_ESTIMATED_COST] [--max-file-size MAX_FILE_SIZE]

[requirements ...]

positional arguments:

requirements Requirements for features to build on the codebase or question to ask about the codebase.

options:

-h, --help show this help message and exit

--dir DIR The working directory of the codebase, default to current directory.

--auto-write AUTO_WRITE

Whether or not to immediately write the changeset. Useful when piping to arcode, e.g. cat feature.txt |

arcode

--focused FOCUSED Enable focused mode to limit file context provided based on relevancy using embeddings - accepts an integer

containing number of file chunks to limit context to.

--model MODEL LLM provider/model to use with LiteLLM, default to openai/gpt-4o.

--model-embedding MODEL_EMBEDDING

LLM provider/model to use for embeddings with LiteLLM, default to openai/text-embedding-3-small.

--mode {implement,question}

Mode for the tool: "implement" for feature building and "question" for asking questions about the codebase.

--ignore [IGNORE ...]

Additional ignore patterns to use when parsing .gitignore

--resources [RESOURCES ...]

List of URLs to fetch and include in the prompt context

--images [IMAGES ...]

List of image file paths to include in the prompt context

--debug Enable debug mode for additional output

--models [MODELS] List available models. Optionally provide a filter string.

--max-estimated-cost MAX_ESTIMATED_COST

Maximum estimated cost allowed. Actions with a larger estimated cost will not be allowed to execute.

(integer or float with up to two decimal places)

--max-file-size MAX_FILE_SIZE

Maximum file size in bytes for files to be included in the prompt.- Ensure Homebrew is installed

- Ensure

libmagicis installed (or runbrew install libmagic) - Tap

alexdredmon/arcodebrew tap alexdredmon/arcode

- Install arcode

brew install arcode

- Clone the repository:

git clone https://github.com/alexdredmon/arcode cd arcode - Install pyenv (if not already installed):

brew install pyenv

- Install Python 3.9 using pyenv:

pyenv install 3.9

- Set local Python version:

pyenv local 3.9 - Create & activate virtual environment:

python -m venv venv source venv/bin/activate - Install the required dependencies:

pip install -r requirements.txt

- Set your OpenAI API key:

or alternatively set in a .env file:

export OPENAI_API_KEY=<your_openai_api_key>

OPENAI_API_KEY=<your_openai_api_key>

-

Build a standalone executable via:

./scripts/build.sh

-

A binary will be created in

./dist/arcode/arcode -

Add the resulting binary to your PATH to run it anywhere:

export PATH="/Users/yourusername/path/to/dist/arcode:$PATH"

To install Arcode via Homebrew:

-

Clone the repository and navigate into the project directory:

git clone https://github.com/alexdredmon/arcode cd arcode -

Run the build script to generate the standalone executable and Homebrew formula:

./scripts/build.sh

-

Move the generated formula into your local Homebrew formula directory:

mkdir -p $(brew --prefix)/Homebrew/Library/Taps/homebrew/homebrew-core/Formula/ cp Formula/arcode.rb $(brew --prefix)/Homebrew/Library/Taps/homebrew/homebrew-core/Formula/

-

Install the formula:

brew install arcode

To upgrade to the latest version, simply:

brew update

brew upgrade arcode-

Run tests via:

./scripts/run_tests.sh

This project is licensed under the MIT License. See the LICENSE file for more details.

Contributions are welcome! Please fork the repository and submit a pull request with your changes.