Please install the latest versions of PyTorch (torch), HuggingFace Transformers (transformers), HuggingFace Accelerate (accelerate), and the OpenAI API package (openai). This codebase is tested on

torch==2.1.0.dev20230514+cu118, transformers==4.28.1, accelerate==0.17.1, and openai==0.27.4 with Python 3.9.7.

setup: directory containing scripts for downloading data and setting env variablesretrieve: directory containing scripts and files for retrieval and retriever evalconfigs: config files for different retrieval settingsindex.py: index datasets before or during retrieval steprun.py: retrieval, pass config fileeval.py:

read: directory containing scripts and files for generation and generation evalconfigs: config files for different generation settingsprompts: folder that contains all prompt files.run.py: retrieval, pass config fileeval.py: eval file to evaluate generations.

tools/: misc code (generate summaries/snippets, reranking, etc.)

You can download datasets (along with retrieval results) by running the following command:

bash setup/download_data.shAll the data will be stored in data/.

Before running any scripts, set up necessary environment variables with:

bash setup/set_paths.shThis sets up routing for all retrieval and runner output. By default, everything will be output to data/, but this can be easily changed.

You can complete the retrieval step:

python retrieve/run.py --config `retrieve/configs/{config name}`

*: ALCE is pronounced as /elk/ as ALCE is the Latin word for elk (Europe) or moose (North America).

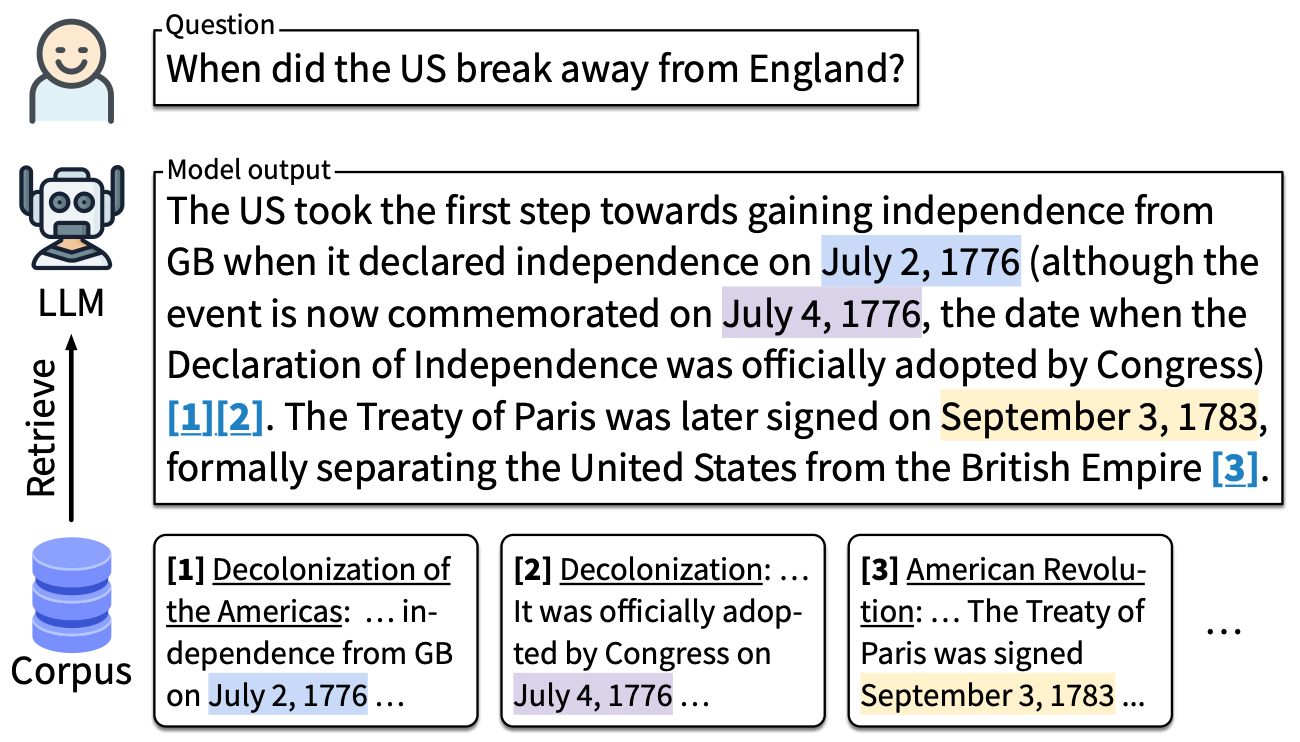

This repository contains the code and data for paper Enabling Large Language Models to Generate Text with Citations. In this paper, we propose ALCE, a benchmark for Automatic LLMs' Citation Evaluation. ALCE contains three datasets: ASQA, QAMPARI, and ELI5. We provide automatic evaluation code of LLM generations around three dimensions: fluency, correctness, and citation quality. This repository also includes code to reproduce the baselines in our paper.

You can reproduce baselines from our paper by

python run.py --config configs/{config_name}You can also overwrite any arguments in the config file or add new arguments simply through command line:

python run.py --config configs/{config_name} --seed 43 --model vicuna-13b

The naming of config files follow the rule of {LLM}_{#demos and #passages}_{retriever}_{method}.yaml. Method names include:

defaultcorresponds to the Vanilla model in our paper.summarycorresponds to the Summary model.extractioncorresponds to the Snippet model.interact_doc_idcorresponds to the Interact model.interact_searchcorresponds to the InlineSearch model.closedbookcorresponds to the ClosedBook model.

Our code support both OpenAI API and offline HuggingFace models:

- For OpenAI models (for example, ChatGPT), you need to set the environment variable

OPENAI_API_KEYandOPENAI_ORG_ID. If you are using the Azure OpenAI API, you need to set the environment variable ofOPENAI_API_KEYandOPENAI_API_BASE. You also need to add the flag--azure.- Note that in Azure OpenAI API, ChatGPT's name is different and you should set it by

--model gpt-35-turbo.

- Note that in Azure OpenAI API, ChatGPT's name is different and you should set it by

- For the open-source models, you should set the model name equal to the input of HuggingFace models'

.from_pretrainedmethod. This could either be a local directory (e.g. for the older LLaMA models) or a path to the HuggingFace hub.

For detailed argument usage, please refer to run.py.

Model output along with gold answers and run configs will be stored in a json file in result/.

For closed-book models, one can use post_hoc_cite.py to add citations in a post-hoc manner (using GTR-large). To run post-hoc citation, execute

python post_hoc_cite.py --f result/{RESULT JSON FILE NAME} --external_docs data/{CORRESPONDING DATA}The output file with post-hoc citations will be stored in result/, with a suffix post_hoc_cite.gtr-t5-large-external.

ACLE evaluation is implemented in eval.py.

For ASQA, use the following command

python eval.py --f {path/to/result/file} --citations --qa --mauveFor QAMPARI, use the following command

python eval.py --f {path/to/result/file} --citationsFor ELI5, use the following command

python eval.py --f {path/to/result/file} --citations --claims_nli --mauveThe evaluation result will be saved in result/, with the same name as the input and a suffix .score.

The results from our human evaluation (Section 6) are located under the directory human_eval.

Both the data and the analysis are available, please refer to the directory for details.

If you have any questions related to the code or the paper, feel free to email Tianyu ([email protected]). If you encounter any problems when using the code, or want to report a bug, you can open an issue. Please try to specify the problem with details so we can help you better and quicker!

Please cite our paper if you use ALCE in your work:

@inproceedings{gao2023enabling,

title={Enabling Large Language Models to Generate Text with Citations},

author={Gao, Tianyu and Yen, Howard and Yu, Jiatong and Chen, Danqi},

year={2023},

booktitle={Empirical Methods in Natural Language Processing (EMNLP)},

}