Quilt-LLaVA: Visual Instruction Tuning by Extracting Localized Narratives from Open-Source Histopathology Videos (CVPR24)

We generated spatially grounded visual instruction tuning data from educational YouTube videos to train large language and vision assistant in histopathology that can localize the prominent medical regions and reason towards diagnosis.

[Paper, Arxiv], [QUILT-LLAVA HF], [QUILT-Instruct], [QUILT-VQA] [QUILT-VQA-RED].

Mehmet Saygin Seyfioglu*, Wisdom Ikezogwo*, Fatemeh Ghezloo*, Ranjay Krishna, Linda Shapiro (*Equal Contribution)

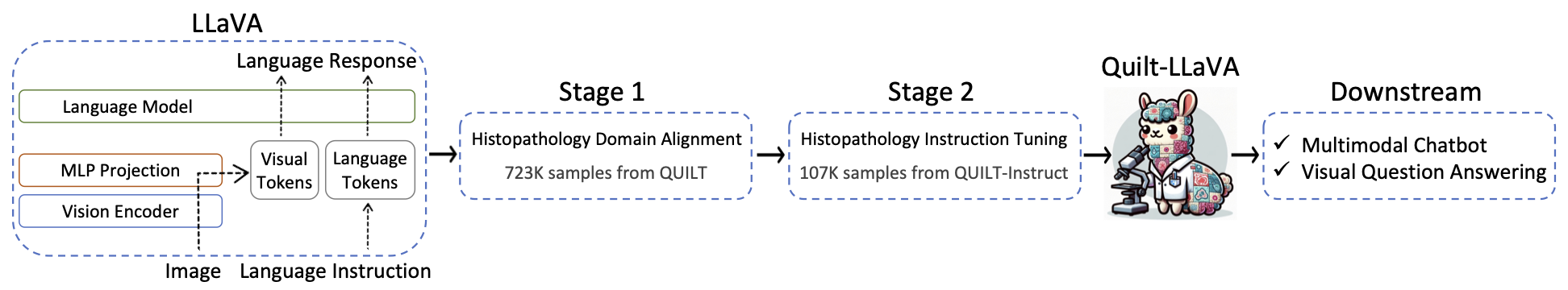

Quilt-LLaVA was initialized with the general-domain LLaVA and then continuously trained in a curriculum learning fashion (first biomedical concept alignment then full-blown instruction-tuning). We evaluated LLaVA-Med on standard visual conversation and question answering tasks. We release both stage 1 (Quilt) and stage 2(Quilt-Instruct) training sets as well as our evaluation dataset Quilt-VQA

- Quilt-LLaVA is open-sourced under the X release policy, which does not allow any commercial use. Checkout the paper

- Alongside Quilt-LLaVA, we also release Quilt-Instruct, our instruction-tuning data generated from educational videos. It is also protected by Y license.

- We also release Quilt-VQA, an evaluation dataset to evaluate generative multi modal histopathology models.

We have created a grounded image-text dataset from educational histopathology videos on YouTube. The bottom row displays an illustrative example. First, we detect frames that have a stable background. Then we extract the narrators' mouse cursors. Then, we perform spatio-temporal clustering on the mouse pointer locations to obtain dense visual groundings for the narrators' speech. Using this method, we create grounded image-text dataset, from which we generate Quilt-Instruct to train our visual Language Learning Model, Quilt-LLaVA.

| Instruction-Tuning data | Size |

|---|---|

| Quilt-Instruct | 189 MiB |

| Evaluation files | Size |

|---|---|

| Quilt-VQA | 305 MiB |

| Quilt-VQA Red Circle | 95.8 MiB |

| Raw Mouse Cursor Data | Filename | Size |

|---|---|---|

| Cursors | cursor.parquet | 333 MiB |

| Image URLS | Filename | Size |

|---|---|---|

| Images (Please click request time-limited access and sign a quick Data Use Agreement (DUA)) | quilt_instruct.zip | 25 GiB |

In case you want to generate the instruction tuning data from scratch, please see the quilt-instruct folder.

See quilt-VQA folder for the prompt and helper code to generate the evaluation Quilt-VQA data.

If you are using Windows, do NOT proceed, see instructions here.

- Clone this repository and navigate to LLaVA folder

git clone https://github.com/aldraus/quilt-llava.git

cd quilt-llava- Install Package

conda create -n qllava python=3.10 -y

conda activate qllava

pip install --upgrade pip # enable PEP 660 support

pip install -e .- Install additional packages for training cases

pip install -e ".[train]"

pip install flash-attn --no-build-isolation

Chat about images using LLaVA without the need of Gradio interface. It also supports multiple GPUs, 4-bit and 8-bit quantized inference. With 4-bit quantization, for our LLaVA-1.5-7B, it uses less than 8GB VRAM on a single GPU. Ignore LlavaLlamaForCausalLM Initialization warnings for the vision tower.

python -m llava.serve.cli \

--model-path wisdomik/Quilt-Llava-v1.5-7b \

--image-file "https://wisdomikezogwo.github.io/images/eval_example_3_.jpg" \

--load-4bitFor inference on multiple images in a single run, use cli_inference following the user prompt:

python -m llava.serve.cli_inference \

--model-path wisdomik/Quilt-Llava-v1.5-7b \

--load-8bitQuilt-LLaVA training consists of two stages: (1) feature alignment stage: use our 723K filtered image-text pairs from QUILT-1M to connect a frozen pretrained vision encoder to a frozen LLM; (2) visual instruction tuning stage: use 107K GPT-generated multimodal instruction-following data from QUILT-Instruct to teach the model to follow multimodal instructions.

Quilt-LLaVA is trained on 4 A100 GPUs with 80GB memory. To train on fewer GPUs, you can reduce the per_device_train_batch_size and increase the gradient_accumulation_steps accordingly. Always keep the global batch size the same: per_device_train_batch_size x gradient_accumulation_steps x num_gpus.

We use a similar set of hyperparameters as Vicuna in finetuning. Both hyperparameters used in pretraining and finetuning are provided below.

- Pretraining

| Hyperparameter | Global Batch Size | Learning rate | Epochs | Max length | Weight decay |

|---|---|---|---|---|---|

| Quilt-LLaVA-v1.5-7B | 256 | 1e-3 | 1 | 2048 | 0 |

- Finetuning

| Hyperparameter | Global Batch Size | Learning rate | Epochs | Max length | Weight decay |

|---|---|---|---|---|---|

| Quilt-LLaVA-v1.5-7B | 128 | 2e-5 | 1 | 2048 | 0 |

Our base model Vicuna v1.5, which is an instruction-tuned chatbot, will be downloaded automatically when you run our provided training scripts. No action is needed.

Please download the 723K subset/filtered image-text pairs from QUILT-1M dataset with reformatted to QA styling we use in the paper here.

Pretrain takes around 10 hours for LLaVA-v1.5-7B on 4x A100 (80G).

Training script with DeepSpeed ZeRO-2: pretrain.sh.

--mm_projector_type mlp2x_gelu: the two-layer MLP vision-language connector.--vision_tower wisdomik/QuiltNet-B-32: CLIP ViT-B/32 224px.

- Prepare data

Please download the annotation of our instruction tuning data quilt_instruct_107k.json, and download the images from Quilt-1M dataset:

- (Rescaled) On Zenodo you can access the dataset with all images resized to 512x512 px (36 Gb)

- (Full) To access the dataset with full-sized images via Google Drive, please request time-limited access through this form Google (110 Gb)

After downloading all of them, organize the data as follows in ./playground/data,

├── Quilt-LLaVA-Pretrain

│ └── quilt_1m/

└── xxxxxxx.jpg

...

└── yyyyyyy.jpg

├── quilt_pretrain.json

- Start training!

You may download our pretrained projectors in Quilt-Llava-v1.5-7b. It is not recommended to use legacy projectors, as they may be trained with a different version of the codebase, and if any option is off, the model will not function/train as we expected.

Visual instruction tuning takes around 15 hours for LLaVA-v1.5-7B on 4x A100 (80G).

Training script with DeepSpeed ZeRO-3: finetune.sh.

If you are do not have enough GPU memory:

- Use LoRA:

finetune_lora.sh. Make sureper_device_train_batch_size*gradient_accumulation_stepsis the same as the provided script for best reproducibility. - Replace

zero3.jsonwithzero3_offload.jsonwhich offloads some parameters to CPU RAM. This slows down the training speed.

If you are interested in finetuning LLaVA model to your own task/data, please check out Finetune_Custom_Data.md。

New options to note:

--mm_projector_type mlp2x_gelu: the two-layer MLP vision-language connector.--vision_tower openai/clip-vit-large-patch14-336: CLIP ViT-L/14 336px.--image_aspect_ratio pad: this pads the non-square images to square, instead of cropping them; it slightly reduces hallucination.--group_by_modality_length False: this should only be changed to True when your instruction tuning dataset contains both language data and multimodal (e.g. Quilt-LLaVA-Instruct). It makes the training sampler only sample a single modality (either image or language) during training, which we observe to speed up training by ~25%, and does not affect the final outcome.

We evaluate models on a diverse set of 4 benchmarks. To ensure the reproducibility, we evaluate the models with greedy decoding. We do not evaluate using beam search to make the inference process consistent with the chat demo of real-time outputs.

See Evaluation.md.

Our GPT-assisted evaluation pipeline for multimodal modeling is provided for a comprehensive understanding of the capabilities of vision-language models. Please see our paper for more details.

python model_vqa.py \

--model-path wisdomik/Quilt-Llava-v1.5-7b \

--question-file ./playground/data/quilt_gpt/quilt_gpt_questions.jsonl \

--image-folder ./playground/data/eval/quiltvqa/images \

--answers-file /path/to/answer-file-our.jsonl- Evaluate the generated responses. In our case,

answer-file-ref.jsonlis the response generated by text-only GPT-4 (0314), with the context captions/boxes provided.

OPENAI_API_KEY="sk-***********************************"

python llava/eval/quilt_gpt_eval.py \

--question ./playground/data/quilt_gpt/quilt_gpt_questions.jsonl \

--context ./playground/data/quilt_gpt/quilt_gpt_captions.jsonl \

--answer-list \

/path/to/answer-file-ref.jsonl \

/path/to/answer-file-our.jsonl \

--output /path/to/review.json- Summarize the evaluation results

python llava/eval/quilt_gpt_summarize.py \

--dir /path/to/review/If you find LLaVA useful for your research and applications, please cite using this BibTeX:

@article{saygin2023quilt,

title={Quilt-LLaVA: Visual Instruction Tuning by Extracting Localized Narratives from Open-Source Histopathology Videos},

author={Saygin Seyfioglu, Mehmet and Ikezogwo, Wisdom O and Ghezloo, Fatemeh and Krishna, Ranjay and Shapiro, Linda},

journal={arXiv e-prints},

pages={arXiv--2312},

year={2023}

}

@article{ikezogwo2023quilt,

title={Quilt-1M: One Million Image-Text Pairs for Histopathology},

author={Ikezogwo, Wisdom Oluchi and Seyfioglu, Mehmet Saygin and Ghezloo, Fatemeh and Geva, Dylan Stefan Chan and Mohammed, Fatwir Sheikh and Anand, Pavan Kumar and Krishna, Ranjay and Shapiro, Linda},

journal={arXiv preprint arXiv:2306.11207},

year={2023}

}- Our model is based on 🌋 LLaVA: Large Language and Vision Assistant so model architecture and training scripts are heavily borrowed from https://github.com/haotian-liu/LLaVA.

- LLaVA-Med: Training a Large Language-and-Vision Assistant for Biomedicine in One Day

Usage and License Notices: The data, code, and model checkpoints are intended and licensed for research use only. They are also subject to additional restrictions dictated by the Terms of Use: QUILT-1M, LLaMA, Vicuna and GPT-4 respectively. The model is made available under CC BY NC 3.0 licence and the data, code under CC BY NC ND 3.0 with additional Data Use Agreement (DUA). The data, code, and model checkpoints may be used for non-commercial purposes and any models trained using the dataset should be used only for research purposes. It is expressly prohibited for models trained on this data to be used in clinical care or for any clinical decision making purposes.