Guangkai Xu, Yongtao Ge, Mingyu Liu, Chengxiang Fan, Kangyang Xie, Zhiyue Zhao, Hao Chen, Chunhua Shen,

Zhejiang University

- 2024.4.30: Release checkpoint weights of surface normal and dichotomous image segmentation.

- 2024.4.7: Add HuggingFace App demo.

- 2024.4.6: Release inference code and depth checkpoint weight of GenPercept in the GitHub repo.

- 2024.3.15: Release arXiv v2 paper, with supplementary material.

- 2024.3.10: Release arXiv v1 paper.

conda create -n genpercept python=3.10

conda activate genpercept

pip install -r requirements.txt

pip install -e .Download the pre-trained models genpercept_ckpt_v1.zip from BaiduNetDisk (Extract code: g2cm), HuggingFace, or Rec Cloud Disk (To be uploaded). Please unzip the package and put the checkpoints under ./weights/v1/.

Then, place images in the ./input/$TASK_TYPE dictionary, and run the following script. The output depth will be saved in ./output/$TASK_TYPE. The $TASK_TYPE can be chosen from depth, normal, and dis.

sh scripts/inference_depth.shFor surface normal estimation and dichotomous image segmentation , run the following script:

bash scripts/inference_normal.sh

bash scripts/inference_dis.shThanks to our one-step perception paradigm, the inference process runs much faster. (Around 0.4s for each image on an A800 GPU card.)

GenPercept models can be easily used with torch.hub for quick integration into your Python projects. Here's how to use the models for normal estimation, depth estimation, and segmentation:

import torch

import cv2

import numpy as np

# Load the normal predictor model from torch hub

normal_predictor = torch.hub.load("hugoycj/GenPercept-hub", "GenPercept_Normal", trust_repo=True)

# Load the input image using OpenCV

image = cv2.imread("path/to/your/image.jpg", cv2.IMREAD_COLOR)

# Use the model to infer the normal map from the input image

with torch.inference_mode():

normal = normal_predictor.infer_cv2(image)

# Save the output normal map to a file

cv2.imwrite("output_normal_map.png", normal)import torch

import cv2

# Load the depth predictor model from torch hub

depth_predictor = torch.hub.load("hugoycj/GenPercept-hub", "GenPercept_Depth", trust_repo=True)

# Load the input image using OpenCV

image = cv2.imread("path/to/your/image.jpg", cv2.IMREAD_COLOR)

# Use the model to infer the depth map from the input image

with torch.inference_mode():

depth = depth_predictor.infer_cv2(image)

# Save the output depth map to a file

cv2.imwrite("output_depth_map.png", depth)import torch

import cv2

# Load the segmentation predictor model from torch hub

seg_predictor = torch.hub.load("hugoycj/GenPercept-hub", "GenPercept_Segmentation", trust_repo=True)

# Load the input image using OpenCV

image = cv2.imread("path/to/your/image.jpg", cv2.IMREAD_COLOR)

# Use the model to infer the segmentation map from the input image

with torch.inference_mode():

segmentation = seg_predictor.infer_cv2(image)

# Save the output segmentation map to a file

cv2.imwrite("output_segmentation_map.png", segmentation)- Marigold: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation. arXiv, GitHub.

- GeoWizard: Unleashing the Diffusion Priors for 3D Geometry Estimation from a Single Image. arXiv, GitHub.

- FrozenRecon: Pose-free 3D Scene Reconstruction with Frozen Depth Models. arXiv, GitHub.

For non-commercial use, this code is released under the LICENSE. For commercial use, please contact Chunhua Shen.

@article{xu2024diffusion,

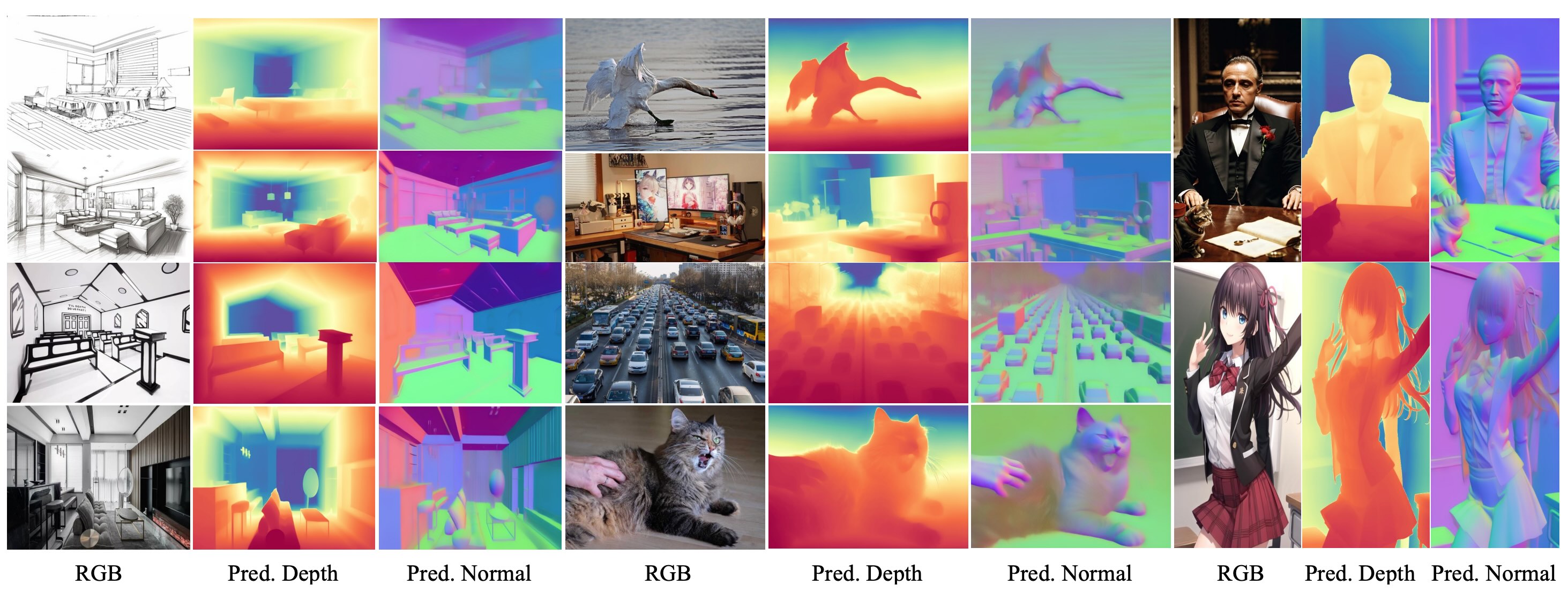

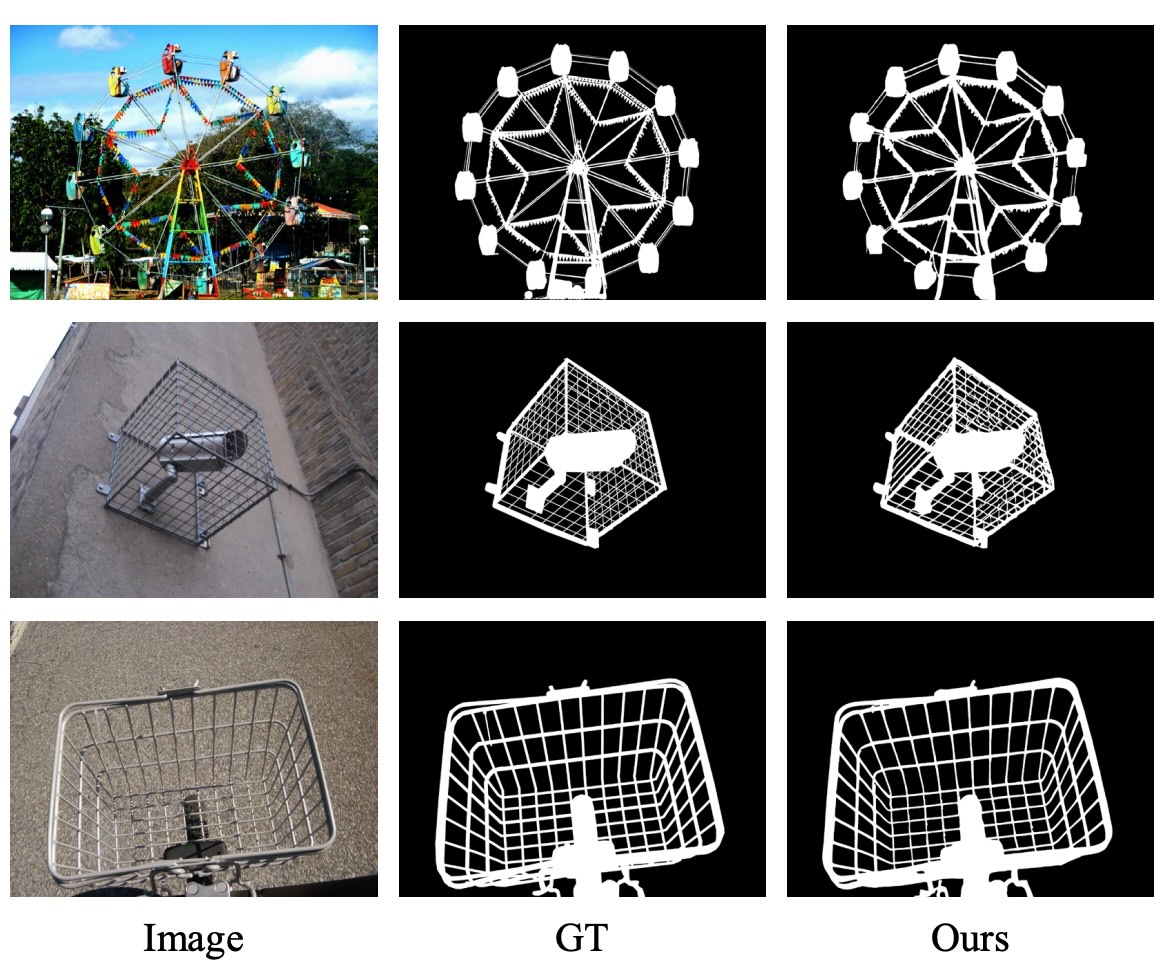

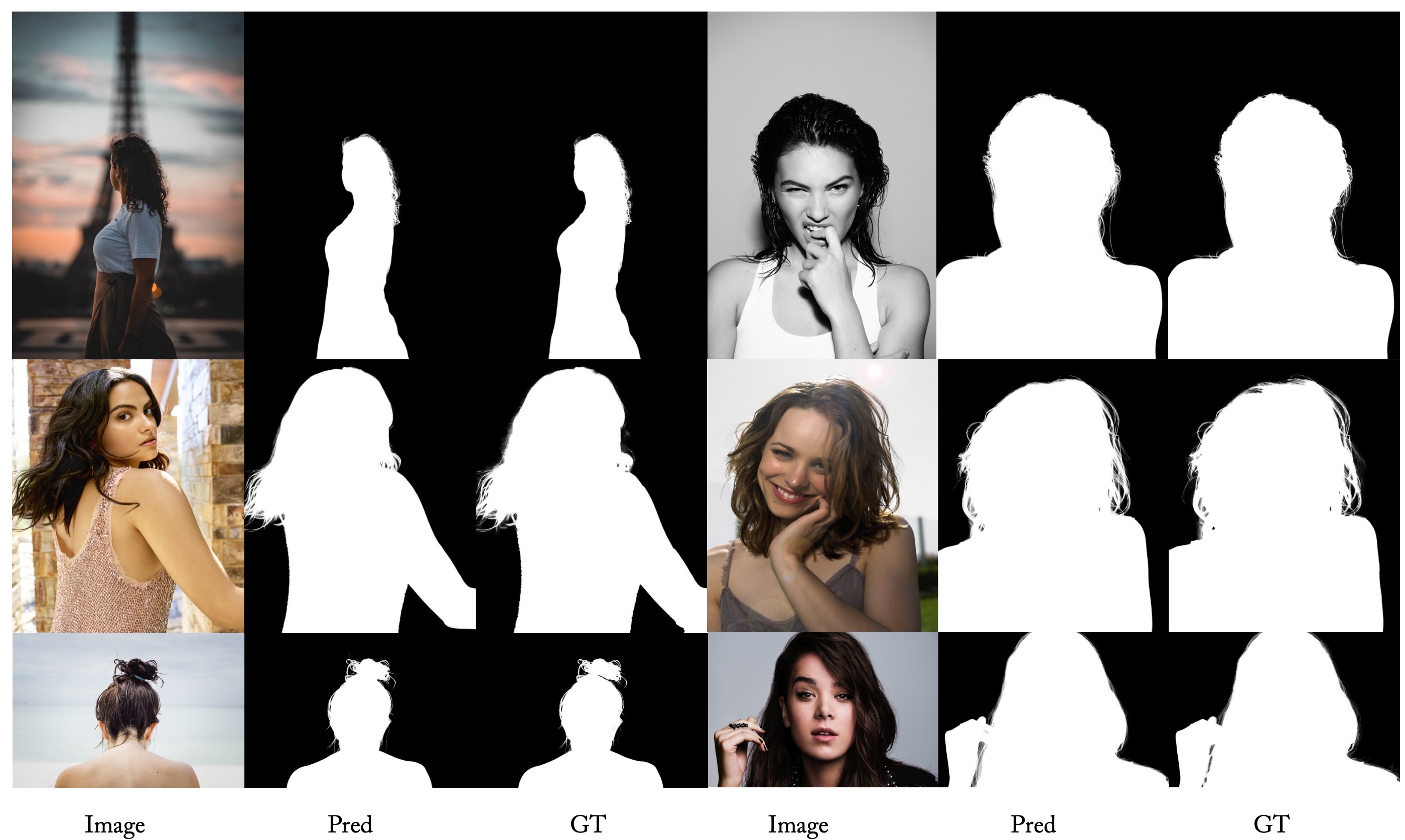

title={Diffusion Models Trained with Large Data Are Transferable Visual Models},

author={Xu, Guangkai and Ge, Yongtao and Liu, Mingyu and Fan, Chengxiang and Xie, Kangyang and Zhao, Zhiyue and Chen, Hao and Shen, Chunhua},

journal={arXiv preprint arXiv:2403.06090},

year={2024}

}